Sugu Sougoumarane discusses how to face the challenges of horizontally scaling MySQL databases through the Vitess distribution engine and Planetscale, a service built on top of Vitess. This episode explores ideas about topology management, sharding, Paxos, connection pooling, and how Vitess handles large transactions while abstracting complexity from the application layer.

Show Notes

- SE Radio #510: Deepthi Sigireddi on How Vitess Scales MySQL

- Michael Stonebraker

- www.planetscale.com

- Vitess

- www.cncf.io/projects/

- Twitter: @ssougou

- Planetscale blog

- Consensus Algorithms at Scale

Transcript

Transcript brought to you by IEEE Software magazine.

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected] and include the episode number and URL.

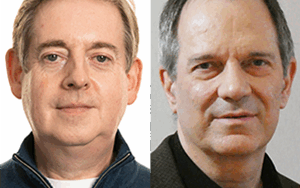

Roshan Diwakar 00:00:17 Welcome to Software Engineering Radio. This is your host, Roshan. Today I’m interviewing Sugu Sougoumarane, co-founder and CTO at PlanetScale. Before founding PlanetScale, Sugu worked at YouTube as a software engineer where he co-created Vitess, a database clustering system for horizontal scaling of MySQL. Sugu, welcome to the show. Is there anything you’d like to add to your background?

Sugu Sougoumarane 00:00:45 The only thing I would add is I’ve been working on databases for much longer, since the 90s where I was at Informix.

Roshan Diwakar 00:00:52 That’s great. So you have been working and building data systems for quite a while. I’d love to discuss how data systems have evolved over the past 30 years and what are some of the challenges we face in the modern era of large data systems. Before we go there, let’s talk about the origination of Vitess. What was the architecture at YouTube when it was starting to experience hyper-growth, both in terms of users and views, and what challenges were you facing?

Sugu Sougoumarane 00:01:25 This was the year 2006, late 2006 — about three years after Google bought YouTube — and we had actually already sharded the database at that point; I believe we had about four shards, but the number of outages we were experiencing was growing. It was somewhat exponential. We had reached a point where there were many outages every day, and our backs were against the wall. Any iterative improvements we were making were not catching up to those outages. At that time. Solomon, my co-creator of Vitess and me, we kind of took a step back together saying that we need something else that allows us to leap ahead of all these problems. So he actually went to a coffee shop, spent few days there, and created an enormous spreadsheet of every problem that we ever faced. What were the current solutions? What would be the best solution?

Roshan Diwakar 00:02:23 That’s an interesting way to organize your challenges so that you can see patterns or get a holistic view of the potential system you’re about to design. So for listeners who are curious about a method for system design, this may work.

Sugu Sougoumarane 00:02:39 And once you looked at that spreadsheet, the answer was almost obvious what needed to be done. The answer was, unless there is a layer between the application and the database, we are not going to be able to address all these problems, and all the problems that we are predicting will come in the future. And then we started small. So we started what is our biggest pain point? Our biggest pain point at that time were the number of connections that were going into MySQL. And the biggest problem we had was when a primary database went down — at that time we had, I think, about 5,000 connections going into the primary database. We would perform a failover and instantly, because YouTube is getting pounded by traffic; these web servers will instantly try to make 5,000 connections to the new primary and would bring it down. So that is when we said we need a layer in the middle that pools connections. So that was the first version of Vitess that we created, and the rest is history. After that, the beauty is once you figure out that there is a middle layer that can getting requests and sending them to the database, people then start looking for features in it. Can you do this? Can you filter that out? Can you black list queries? You know, so those kinds of things. That is how Vitess was started, and that is how it evolved.

Roshan Diwakar 00:04:00 Interesting. Of course, this is a distributed database system, and it is incredibly complex to build such systems. So what were some of the architectural decisions that you had to make at that early stage of designing? Did you anticipate all the traffic, all the number of databases that the system had to handle? And of course, there are CAP theorems and other complexities that you have to consider. So how much of the decision-making was done during the initial stage, or did you have to sort of go back and revisit any of the architectural decisions that you made?

Sugu Sougoumarane 00:04:38 The most difficult decision that we had to make was whether to write a parser. At that time, it was a very difficult decision. In other words, the thing is, do we use this just purely for connection pooling, or do we intend to do more with this tool? If we intend to do more to this tool, we need to understand the queries that are coming in. In order to do that, we are going to write a parser. So we said we want to eventually be able to understand the queries that are coming in and mediate them to the database, no? Decide what to do with it, what to do about the query. So, the big decision that we made was to say, we are going to write a parer. I used my old knowledge of YAC at that time. I had used the YAC (?) in ’89, I think, when we were trying to write a C++ compiler.

Sugu Sougoumarane 00:05:28 So all that knowledge came back to me. So we decided to write a parser, and that is a decision that has definitely paid off. That one decision allowed Vitess to become what it became today — essentially a full-fledged distributed database system. That is one decision. The CAP theorem is a very interesting thing. I think we should go over it later, but it is a very, very important factor in many of the decisions that we made later down the line with Vitess, especially related to ensuring durability and availability. Those played a very important part.

Roshan Diwakar 00:06:03 Got you. Yeah, let’s definitely go deeper later. Vitess has a number of features that enhances MySQL in the areas of performance, safety, monitoring, topology management, and sharding, right? And let’s walk through each of them. On Vitess’ website, it gives a number of features, especially in the performance, right? How does Vitess handle connection pooling and managed transactions?

Sugu Sougoumarane 00:06:25 So connection pooling is one of the first features that we did, and it is still one of the best performance parameters for MySQL. MySQL actually has improved. When we launched Vitess, we were on a very old version of MySQL 5.1, which is like supposed to be a dinosaur or nobody want to use it. So MySQL has improved, but still not enough. You still benefit from connection pooling, but more importantly, connection pooling solves problems beyond just MySQL performance. The one thing that it does is it handles spikes extremely well. So if you have 5,000 connections, 10,000 connections, actually at a later date, I think it would’ve been a lot more, I don’t even know — it would’ve been in the tens of thousands of connections, right? Going into MySQLs, unless Vitess was not there in the middle. When there are that many connections, a small spike would create a domino effect where everything starts to back up and nothing ever completes, right?

Sugu Sougoumarane 00:07:29 And because of the fact that it had connection pool, what it would do is it would keep the database performing at its most optimal performance because we don’t make more connections to MySQL than what it can handle at max load, which means that if there is a huge overload, all other queries are waiting and MySQL does not get pushed over its limit. There is a problem where once MySQL gets pushed over its limit, it takes a while to fall back to normal performance parameters. So it prevents that huge problem where an outage causes an outage.

Roshan Diwakar 00:08:07 Gotcha. So scaling up and scaling down was always an issue.

Sugu Sougoumarane 00:08:10 Yeah. Like, if there is a sudden huge spike, the database remains protected and we do the best we can to satisfy as many queries as possible.

Roshan Diwakar 00:08:18 Got you. You mentioned earlier that writing the query parser was the most important decision that you ever made. It should obviously help you in query optimization. And you also mentioned something about query deduping. Would you expand on how query optimization is done?

Sugu Sougoumarane 00:08:35 Yeah. Query deduping was a super cool feature. There is a story — this was inspired by, this story that happened at YouTube — was when engineers write software, they make assumptions about the data. And one of the assumptions that we had made is how many videos can a person upload, 500 you know? 500 is like, you have to upload moire than a video every day for one year to be able to upload 500 videos. So when we queried the table for a user’s videos, we didn’t put a limit class, right? So we say select all videos that this user has and then displayed . And it was fine until there was a person that had 250,000 videos.

Roshan Diwakar 00:09:21 Wow. Okay.

Sugu Sougoumarane 00:09:22 That’s nothing today, by the way.

Roshan Diwakar 00:09:26 It must be some automated bot or something.

Sugu Sougoumarane 00:09:29 Yeah, probably. It was still fine until someone listed their profile on YouTube’s homepage. So what happens is every page hit, every homepage hit ended up pulling these 250,000 videos. What does query deduping does, is if multiple queries come, identical queries come, and one of them is already gone and is executing, all the other queries just wait for that query to finish, and the result is shared across all of them. So, in this particular case, the 250,000 video case, yes, that query would hit the database, but only once, and it would hit the database again only after it has completed.

Roshan Diwakar 00:10:16 Gotcha. So that means you also had to implement some sort of a caching mechanism in this query layer.

Sugu Sougoumarane 00:10:22 This was implemented without caching because all that happened was the other queries just waited for this query to return the result, and they just shared the result. Believe it or not, we don’t know how many such dedupings it caused, but that issue never came back, ever . We never faced that issue ever again at YouTube once that feature was implemented.

Roshan Diwakar 00:10:42 Gotcha. What other performance benefits that you were able to gain out of the query parser? Like any other query optimization techniques that you used?

Sugu Sougoumarane 00:10:50 The other one that was super important was to, basically — the other defense mechanism that we did against these 250,000 video limit is to say that any query that you send that has no limit clause, we will add a limit clause to it. But in order to be relationally correct, right? Like if we rewrite your query, we cannot just say, you asked for a hundred thousand rows, I give you only 10,000. What we did was we limited it to 10,001. So if the number of results reached 10,001, then we just returned an error saying that your query is too big. So in other words, you are not allowed to query more than 10,000 rows if you are serving the website. If you are coming for doing some analytics and stuff, you are allowed to pull as much as you want, but if you are serving the website, you are not allowed to fetch more than X number of rows.

Roshan Diwakar 00:11:43 Gotcha.

Sugu Sougoumarane 00:11:43 Yeah. So that is another improvement we made from the fact that we were able, so the advantage of parsing means that that limit can be added no matter the complexity of the query. It could be a join, it could be anything, but we understood the query to be able to add the limit correctly.

Roshan Diwakar 00:12:02 Gotcha. Another important concept of distributed databases are sharding. What are vertical and horizontal sharding?

Sugu Sougoumarane 00:12:10 So vertical and horizontal sharding is essentially a growth process that almost every company has to go through. It is almost unavoidable. And the first type of sharding the company usually does is vertical sharding. The definition of a vertical sharding is; if there are multiple tables that are … you start with one database — actually, YouTube was just one database; the first sharding we did was to separate out tables that are not related with each other into separate databases. So, the first sharding that we did at YouTube was to pull out user-related information into its own database. Users and videos were actually moved into a separate database, and there were other secondary data like fraud detection — we had hundreds of tables there; there were so many tables which were in the other database. So that was, for example, that’s what I would define as vertical sharding. Then you are allowed to scale, scale more, but the limitation of vertical sharding is that it is basically a way to buy additional runway. It is not an indefinite scaling solution because eventually that’s what happened is our user database grew beyond what.

Roshan Diwakar 00:13:23 Yeah, exactly. And also like at the most you can think of four or five vertical economies to sharding.

Sugu Sougoumarane 00:13:30 Exactly. So once we hit that limit, we realized that now we have to go horizontal. Horizontal sharding means that one group of users are on one shard, another group of users are on another shard. That becomes more difficult in the case of a relational database, mainly because relational database is inherently complex because it defines relationships between multiple entities. The simplest way I would put it is the biggest challenge that every company goes through, that we also had to go through, was to convert that relational database into a hierarchical database.

Roshan Diwakar 00:14:03 Would you explain what that means?

Sugu Sougoumarane 00:14:04 In other words, the relational term is getting rid of the many-to-many relationships so that you only have one-to-one or one-to-many relationships. It’s not that many-to-many relationships don’t exist, but we weaken those relationships.

Roshan Diwakar 00:14:21 Weaken, as in, in terms of like taking out the constraints?

Sugu Sougoumarane 00:14:24 Exactly. There’s no strong constraints and those relationships are maintained asynchronously by the application, for example. So we have to rewrite the application to not rely on these many-to-many relationships. And once that is done, then there is the mechanism of sharding, which is mostly a database-only operation.

Roshan Diwakar 00:14:45 Gotcha.

Sugu Sougoumarane 00:14:46 The first part is actually rewriting the application to not rely on the many-to-many relationships.

Roshan Diwakar 00:14:51 Gotcha. But even with sharding, like now, the application layer has to have some sort of knowledge about where different sets of data.

Sugu Sougoumarane 00:15:01 Exactly. So that was actually the evolution of Vitess I would describe it, is that when we did sharding at YouTube, the application had all knowledge of the sharding topology is what we called it. And where Vitess is today, is that knowledge is now taken back from the application and lives inside with Vitess, and the application is unaware of that knowledge, does not need to know that information. So that was long evolution that took Vitess from where we were then to where we are today.

Roshan Diwakar 00:15:32 Okay. So that means the application don’t have to worry about the internal distribution of data, it still gets the view of a single database. And all on reduced complexity because of that.

Sugu Sougoumarane 00:15:44 Yeah. So in, in this particular case, if there are many-to-many relationships, those queries will work today. They did not work then because there was, the Vitess parser and analyzer were not sophisticated, but now we have added those sophistications. They will still not work optimally because the fact that you have to go to different shards to fetch different types of data. Right? And you have to have a working cache to be able to join the data together, has all those overheads exist, but it at least will work for you.

Roshan Diwakar 00:16:15 How does indexing work in a single database system? I know when I create an index, queries that use those indexes perform better because MySQL internally uses those indexes. But if data is distributed across multiple databases, does Vitess abstract away creating and using the underlying indexes? If you create an index on say, the logical view of the data?

Sugu Sougoumarane 00:16:43 That’s a very exciting question because there’s a beautiful story behind this . I don’t know if you know about Michael Stonebraker? Yeah. So when I was at Informix, there was this company called Illustra that Michael Stonebraker was the founder of. So his theory at that time was that the index of a database need not be an internal implementation of the database. So, he founded Illustra and talked about these pluggable indexes. I think the, their best product at that time was they built their own pluggable indexes. The spatial index, for example, was a plugable index of Illustra. I think it is now a first-class citizen of Postgres, the pluggable index capability. But that was his invention at that time. Informix acquired Illustra, so I actually moved myself into Illustra just because I was so excited about it. Of course, that kind of went nowhere later for various other reasons. But that idea and excitement has always remained with me. And when we implemented Vitess, we built plugable indexes from the ground up inside Vitess as first-class citizen type of a thing. So Vitess allows you to define your sharding scheme and your secondary indexes as code.

Roshan Diwakar 00:18:04 Would you go a little deeper into explaining both of those?

Sugu Sougoumarane 00:18:08 Yes. So one of the big arguments that people are, they don’t have it as much now, but these used to be huge arguments where we would say, should you shard by range or should you shard by hash? Right? If you’re shard by range, you have this problem, you have hot row problem, you know, those arguments were endless. That’s when it became obvious that there is no single silver bullet in terms of sharding.

Roshan Diwakar 00:18:32 So it depends on the application use case?

Sugu Sougoumarane 00:18:34 Exactly. The application use case. But not only that, the application use case may dictate one type of sharding today and it may change tomorrow. So we needed answers to all these scenarios. So that’s when we came up with sharding function. So the sharding scheme in Vitess is actually what we call as an Vindex, or a V index, is basically a function that takes a value as input and produces an output. And that output is range-based sharding. So if you wanted hash sharding, you just wrote a hash function. The beauty of this is that there was one Vitess adopter that was sharded by vendors. You know, vertical like, they were a multi-tenant system and they were sharding by vendors. And they had a JSON file and when they adopted Vitess, all they did was wrote code that read this JSON file and gave you the output.

Sugu Sougoumarane 00:19:26 Everything else just worked like magic. So that’s the first part of sharding. But that same concept, what we did was we extended it for cross-shard indexes. So in the case of a cross-shard index, you take an input, but it’s not just a function. Instead you go and query a lookup table saying that, where can I find this row? And then it becomes a cross-shard index. Like, for example, it’ll be easier to understand with an example, let me see, think of an example. Let us say I buy from you. So in other words, order, let’s call it the, I place an order with you. Right? The order row has two keys. It has me and it has you. When I make a decision, I have to decide whether the order row lives with me or with you.

Roshan Diwakar 00:20:16 Okay?

Sugu Sougoumarane 00:20:17 So if the order row lives with you, let’s say you are the merchant and I query, show me all the orders that Sugu has bought. I would have to go to all shards because I might have bought from everybody. So this lookup Vindex is what we call it. What it would do is when it inserts the row in your table, it will also insert an entry into the lookup table saying that Sugu has ordered from you. So when you then query the database saying that, show me all the orders Sugu has done, the lookup table will query these three shards, and you’ll find all the orders that Sugu has bought.

Roshan Diwakar 00:20:53 Gotcha. One of the — not disadvantages — like, the challenges with sharding is it may become very one-sided, right? Like, as in like the way data is created, unless you have some randomized keys involved, a single database may get additional rows and some databases may not have new keys at all. So you have to constantly sort of fight this, rebalancing the databases — even, in fact, if you add additional databases, you have to continuously re-shard. How does Vitess take care of re-sharding?

Sugu Sougoumarane 00:21:28 The simple answer is that’s what basically what we did at YouTube is as database grows organic growth, right? So if databases grow organically, all you do is keep re-sharding, which means that keep splitting your shards into two. Like we started at four shards, right? When I left YouTube, we had like 256 shards.

Roshan Diwakar 00:21:48 Gotcha.

Sugu Sougoumarane 00:21:49 And the beauty of this splitting is because every time you’re splitting into two, eventually you can outgrow any growth because it’s purely exponential. Like, initially we were sharding almost every three months, six months, et cetera, right? Once we had hit 256, our thought was we may never re-shard ever again.

Roshan Diwakar 00:22:11 . Oh wow. Ok. ,

Sugu Sougoumarane 00:22:13 It’s like the, you know, the chessboard, the story that you hear the. . .

Roshan Diwakar 00:22:16 Yeah, yeah,

Sugu Sougoumarane 00:22:17 Exactly. At some point of time you basically exceed any possible …

Roshan Diwakar 00:22:21 Astronomical numbers. Yeah, exactly.

Sugu Sougoumarane 00:22:23 Yeah. So that is one scenario. The other scenario, which happens a lot more with the multi-tenant situations: a single tenant grows immensely huge. This goes back to the scenario where I said you cannot predict how your application is going to evolve, right? So, this is a case where the application or the data evolved in a way where just like even putting that one tenant in their own database may not be sufficient. Imagine I’m a small store and suddenly I get Walmart as my customer. They’re like hundred times everything else I have, right? So if you get something of that size at that time, what you have to do is you have to change your sharding scheme. So, this is where the Vindex comes into play, you know? You can now reindex your table and say, now I want to use a different sharding scheme that distributes the data better for me.

Roshan Diwakar 00:23:16 Gotcha. And does this happen live in the sense like, or do we have to bring databases down and…?

Sugu Sougoumarane 00:23:21 It is all live. So the cool technology in Vitess, which is one of the best things we ever built in Vitess, is what we call as the materialization. We call it V replication, but it’s essentially a materialization feature where you can say: please materialize this table in a different form somewhere else.

Roshan Diwakar 00:23:40 Gotcha.

Sugu Sougoumarane 00:23:41 In a sharded setup. So if you don’t like your current Vindex, you can say, rematerialize this table using a different Vindex and then cut over the traffic to the other table when the materialization is complete.

Roshan Diwakar 00:23:52 And all these things can be performed like either through GUI or as a command line?

Sugu Sougoumarane 00:23:57 Command line, yeah. Basically these are what I would call basic primitives within Vitess. And you compose them — actually V application has met an innumerable number of use cases. It has helped with data migration, it has helped with schema deployment, it has helped with resharding, it has helped with re-Vindexing. It’s one of the best technologies we ever built. It is super exciting. It is also used for our boost feature in PlanetScale, where if a query is underperforming, you say, please build a cache for this query so that it performs better.

Roshan Diwakar 00:24:34 And we sort of briefly touched upon topology management. On the website, there’s something called reparenting. Is it related to consensus algorithms, or are they different? Is it the right time to bring CAP theorems and stuff like that?

Sugu Sougoumarane 00:24:48 Yeah, yeah. So what I say may feel like I’m overreaching, but I’m pretty confident of what I’m going to say. Vitess actually uses what I believe is a more generalized form of consensus, which actually allows you to come up with more practically useful topologies. Like, the way I would put it is, today the Cloud is very complicated. The Cloud architectures are very complex. There are zones, there are regions, you know, there are failure boundaries, and people have different tolerances depending on their business and stuff. So there is no one-size-fits-all when it comes to giving durability guarantees. So similar to the Vindex approach, what we have built in Vitess is basically a pluggable durability policy. Which means that I can come and say I want my durability to be — like, the English words for this would be, I want cross-cell durability, right? I want cross-zone durability, or I want cross-region durability.

Sugu Sougoumarane 00:25:50 Or it may be more specific. I have only two regions, and I want cross-region durability. But then if one region goes down, I want to be able to operate in a diminished fashion. So all these flexibilities are provided by Vitess, and they give you the same guarantees that a consensus algorithm like Raft or Paxos would give you. And I actually tried to describe the theory crafting behind this in a Word document — in a Google doc — and somebody said, oh, this is too big, you cannot post it as a blog. So okay, I’ll split it up. So it is now eventually ended up as an eight-part blog series that explains the consensus behind the generalized theory behind consensus algorithms that Vitess uses to guarantee the durability constraints that users want.

Roshan Diwakar 00:26:44 Would you be able to go through that blog post like in a short period — like in five minutes? Would you be able to do that?

Sugu Sougoumarane 00:26:50 Let me try. So the basic thing is that it’s basically a top-down approach. Like, the problem with Raft or Paxos is that they were solutions that were built and adopted by people that had specific problems. But if you flip it around saying what is your problem that you’re trying to solve? And then let’s build a solution that solves it. The way I would define the problem is: what we want is distributed durability, which means that if a node fails — basically if I have acknowledge that I have accepted your transaction and that node fails — that data is somewhere else that I can pick up and continue from. So that is guarantee number one. Guarantee number two is availability, right? If a few nodes in the system fail, my system should not say I’m going to stop. It should continue to make progress as long as it can continue to provide the durability guarantees that you have requested.

Sugu Sougoumarane 00:27:45 So, if you have two zones and I want cross-zone durability and there are five nodes in the other zone, if three nodes go down, it is fine because I can still save my data in one of the available nodes and continue processing. And the third one is automation, which means that if a node fails, you shouldn’t have to wake up someone in the night. The system has to be able to heal itself without data rot. So I think those are the three things that you look for in a consensus system. And if you built a system with those objectives, you may not end up with something like Paxos. And actually, I would say there are essentially two types of consensus system: there is the lock-free consensus system, which is Paxos and the systems that what I call lock-based, which actually Raft is actually more of a lock-based consensus system.

Sugu Sougoumarane 00:28:39 And the fundamental difference between them is the time component. If you look at Paxos, there is no time in the description of the algorithm. It could take a millisecond, or it would take 20 years, it doesn’t matter. In a lock-based system, because lock-based systems tend to prefer the first attempter to win. Like if I say I am going to perform a failover, then I have preference to win that operation, which means that anybody else that comes after me has to wait for me to finish. So you need to put a timeframe because if I attempt and then fail, right? After some time somebody else should pick up the fact that I fail. Right? So that’s the reason why a time component is needed. So lock-based systems add time components and then perform their operations. Whereas a system like Paxos does not have a time component. And most practical systems actually need this time component. The reason is because real life is based on the fact that I cannot wait two years, you know, for you to perform. So after a while you have to put an end to it and move forward. So most consensus systems actually use time component, but it’s slightly deeper topic. Let me know if I’ve gone too far deep.

Roshan Diwakar 00:29:53 This is good because like our listeners definitely they encounter the challenges of consensus systems again and they have to pick between Paxos versus Raft, and like you kind of explained why we need practicality and like most systems kind of pick Raft because of that practicality.

Sugu Sougoumarane 00:30:10 Yeah, totally. And the other reason why Raft is better is because it also includes failure detection, which Paxos doesn’t, and you cannot build a consensus system without failure detection. So that is the thing. The one improvement that we made over Raft is there is actually a paper called Flex Paxos, which actually makes a modification in how you select your quorums, which gives you a huge flexibility in terms of your deployments. Like, for example, at YouTube we had like 75 replicas for each primary — and imagine running consensus algorithms with 75 nodes, right? But we did, and how did we do it is because of this modified quorum definition, which basically the way we run it at YouTube is if one other node other than the current primary has my data that is sufficient for my durability. And in the 10 years that we operated at, you know, millions of QPS, we have never been in a situation where both the node that accepted the write and the node that saved the data fail at the same time.

Roshan Diwakar 00:31:18 And you mentioned all these configurations are customizable, as in you can turn on knobs for the right reliability and safety trade-off that you can make.

Sugu Sougoumarane 00:31:31 Yeah, exactly. And that was actually the reason why I ended up doing this study right? which ended up in this eight series blog post, which is: why is it that we are not using Paxos? Why are we able to give you the same guarantees that a consensus system gives you, not just YouTube, right? Slack runs at millions of QPS, JD apparently runs like 30 million QPS on their biggest sale day. They all use Vitess, and they’re a huge test user and none of them have come and told me ever that they lost data because you know. So that’s why I said like what is the difference between all these algorithms? And that’s how I ended up with this.

Roshan Diwakar 00:32:12 I’ll put a link to your series of blog post series. It’s an interesting read for the listeners.

Sugu Sougoumarane 00:32:16 Yeah, it’s a little deep. Unfortunately, it’s the nature of the beast. It is a very, it’s a very difficult topic.

Roshan Diwakar 00:32:22 . Yeah, the distributed systems are hard.

Sugu Sougoumarane 00:32:25 Yeah.

Roshan Diwakar 00:32:26 And you also have on Vitess site has something called reparenting. Is it something finding the new master or is it a . . .

Sugu Sougoumarane 00:32:35 Yes, that is part of this consensus. So, initially we had this as core within Vitess. Now we have built something called VTOrc, which stands for VT Orchestrator, which performs all these operations. So reparenting, detecting what has failed, and — reparenting is a process of choosing a new primary. So this is all part of that consensus protocol that we now actually officially qualified into this tool.

Roshan Diwakar 00:33:02 And finally, like we can also talk about safety because you have written your own query parser, I think you can do query rewriting, blacklisting, and curricular. Would you want to explain those benefits?

Sugu Sougoumarane 00:33:14 Before going into that, the biggest fear, the biggest anxiety I always had — at least in the early days — was what if I mess up a query rewrite, right? I get a query and I rewrite it wrong. And the interesting thing was at some point of time I even got paralyzed about making changes, right? How do you, like I’m afraid to break the system that is now, you know, serving all of YouTube. You want a feature? I don’t know. I’m not too sure. So over time we started getting scared of making changes to the system, and at that time our tech lead was talking to our tech lead ally, he’s still at the Google by the way. And he said something extremely wise to me, which is we got to trust our tests. So if you don’t feel confident about your changes, write more tests.

Roshan Diwakar 00:34:12 Oh, that’s a very important wisdom for all software engineers.

Sugu Sougoumarane 00:34:15 Yes. So I took that to heart, went down and just blasted the thing with all kind of tests, like any possible failure that I could think of, you know. I wrote it and also made sure that the tests had enough code coverage. So I followed that discipline, like that has never left me from that day on. And, that is a curse that every Vitess engineer carries today.

Roshan Diwakar 00:34:44 So at least now we can be sure of the quality and reliability of Vitess because of that culture.

Sugu Sougoumarane 00:34:51 Yeah. Yeah. So it does, like I wrote five lines of code. You can’t push it; where are the tests, where are the tests? We need to write tests that demonstrate the feature you are doing. Those are separate tests. And you need to show that all failure modes are covered. You need to also show that all meaningful core parts have been covered. So it’s a pretty tall claim, but the thing is, companies rely on the accuracy of the system so there’s no choice. Right?

Roshan Diwakar 00:35:20 Gotcha. And like in terms of like how does one configure blacklisting, and how do you decide when to click query or how to sign? Is it something that the user needs to configure or there is some automation within that?

Sugu Sougoumarane 00:35:35 There is some defaults about — query killing, for example, has defaults and you can tune it up or down. And there are people, most people — believe it or not, most OLTP systems want the same defaults. There are only a few corner cases here and there that want to play. Like for example, Etsy said we don’t want this limit. They completely extended to like not two hours what was like a query killing that was like 30 seconds, right? Etsy said we want to make it two hours. But barring those corner cases, most people are very happy with just the defaults that Vitess provides, which is within 30 seconds. If a query takes longer than 30 seconds, it’s probably not worth it for OLTP.

Roshan Diwakar 00:36:19 Exactly, yeah. So, we talked about all the OLTP use cases. Are there companies or firms that are using Vitess or PlanetScale for even analytics use cases? Because you get the benefit of distributed databases and probably some — what’s the right word? some big data processing capabilities.

Sugu Sougoumarane 00:36:41 Right, right. So we have traditionally always encouraged people to export the data out to an analytics system. But there is actually a few trending changes that I’m noticing that may bring back some analytics into Vitess. The first one is the materialization feature of Vitess, people are starting to play with that. And what that allows them to do is like keep cheap aggregate tables materialized that they can query instantly, and it’s very cheap to do it within Vitess because it’s just one system, right? Iteration and then it’s ready for you.

Roshan Diwakar 00:37:21 It gives you the benefits of a like single store and you don’t have to do all the ETLs.

Sugu Sougoumarane 00:37:26 Exactly. So that is trend number one. Trend number two is more, I read a blog post — I need to find the person — that are saying that this big data people are starting to get dissolution because it is becoming more clutteris what I’m hearing. Most people that have big data, have it big mainly because they don’t know what to get rid of.

Roshan Diwakar 00:37:47 Yeah, exactly. Yeah. In the sense like the complexity increases and it’s always an operational challenge.

Sugu Sougoumarane 00:37:52 Correct, correct. Correct. So I don’t know where that is going though. I mean, that is Snowflake and that seems to be doing well, but as long as there are companies like Snowflake that are giving meaningful value to customers, there is no reason for Vitess to try to get into that area is what I think.

Roshan Diwakar 00:38:09 Gotcha. And in terms of like exporting data, obviously because Vitess has additional metadata and additional intelligence, when you export data, do you export from MySQL or do you export from Vitess? Like, does Vitess has additional integration tools to export data?

Sugu Sougoumarane 00:38:28 Vitess has — the reason why it cannot be MySQL is because it’s a sharded system, right? And when you want to export data from a sharded system, you want unified view of that sharded system. And Vitess gives you a GRPC API, essentially. And it also gives you something very elegant, which is that when you want to do ETL, if you look at these ETL tools, they do some very awkward data dump and then catch up and then resync. And you always worry is it correct? Like, did it miss any rows? Right? You always wonder. So what Vitess is — we call it V Stream — gives you an accurate materialization of the, but what it does is it gives you an API saying that an API where it says you need to insert this, you need to update this, you need to delete this. So that’s kind of what the API gives you and you subscribe saying that I want to materialize this table. It doesn’t differentiate between data that already exists from rows that are changing in the table. You will get events, and if you apply those events when the thing catches up, the entire table will be materialized on your target without you knowing. There is no separate copy phase, there’s no dump phase; this one API fulfills the entire need.

Roshan Diwakar 00:39:48 Okay, that’s great.

Sugu Sougoumarane 00:39:49 And that is the reason why we are able to build so many tools around it because of the simplicity of this API.

Roshan Diwakar 00:39:55 Gotcha. And Vitess is of course is an open-source software and it has earned, it has graduated to the maturity level on CNCF, and PlanetScale is a managed service built on top of Vitess. What additional features does PlanetScale give to users and enterprises?

Sugu Sougoumarane 00:40:14 Yeah, the way I would put it is if Vitess is the engine, PlanetScale is the car. Like people that have to use Vitess, Vitess has a large number of levers. Essentially, you need to employ your team to be able to run Vitess. Whereas with PlanetScale it is readily available, but PlanetScale gives you certain value ads that Vitess does not have. And in my opinion, they are really, really huge. They’re really awesome. The biggest one for example is the schema. The schema flow is so simple that I as a developer, if I want to use a database, I don’t need to go wrestle with, you know, a DBA or — sorry about that. Like, it’s basically become self-service for you. So that is one of the biggest things that PlanetScale add. And the other one is for example, the Boost is a new thing which is built on Vitess’ V stream based on the PhD thesis, which basically allows you to materialize partially materialized queries in memory as a cache and make them more efficient.

Roshan Diwakar 00:41:18 Gotcha.

Sugu Sougoumarane 00:41:19 So there are basically value adds that basically PlanetScale really packages Vitesss in an extremely user-friendly and powerful manner.

Roshan Diwakar 00:41:29 Gotcha. And you talked something about schema changes. Would you go a little deeper?

Sugu Sougoumarane 00:41:34 Yeah, yeah. So in a traditional system, you want to do a schema change, what do you do? You write the schema, right? And then you give it to the DBA and then they review it and they want to say you have to make changes. But after all this happens when the schema deploys, things may still break. It is like the nightmare of everybody, you know, the engineer’s nightmare, the DBA’s nightmare, to keep the database from not failing. And the other problem with the schema changes, it doesn’t really flow well with my development cycle, right? Like I check out code, make changes, and I make corresponding schema changes. So that is a completely different workflow from my checkout flow.

Roshan Diwakar 00:42:14 Exactly, like schema changes has been one of the biggest headaches for software development.

Sugu Sougoumarane 00:42:18 For everyone, right? So now what we have done is we have integrated schema change as part of your developer flow. So when you check out code, we check out a database for you to work with, and when you add schemas, you’re adding schemas to your local database, and just like you merge your code back into your main branch, you also have a workflow to merge the schema back into your main database.

Roshan Diwakar 00:42:45 And does it also provide forward- and backward-compatibility?

Sugu Sougoumarane 00:42:48 That is actually the greatest thing that Vitess can do that I don’t know anybody else can do this because of this materialization feature. You deploy the schema, and things have broken and now you want to undo it. Vitess can undo your schema change with no data loss — to the extent possible, right? There are some things that are theoretically not possible, but what is theoretically possible it’ll do, like for example, you’ve added a column and let’s say your website is broken, you’ve added a column and you’ve inserted, you know, 10 rows and now you want to revert. What about those 10 rows, right?

Roshan Diwakar 00:43:24 Yeah, exactly.

Sugu Sougoumarane 00:43:24 So, what Vitess will do is it’ll reverse replicate those rows into your source table, without the column, but you can at least revert back to your old source table and continue, and then fix forward later as needed. And the technology there is deep because we need to have an understanding — this is where the decision of building the parser comes back, right? Because we can understand queries, we can understand schemas, we can understand the diffs between the source and target table and make all this work.

Roshan Diwakar 00:43:56 Exactly. Yeah. Are there other features that are available on PlanetScale?

Sugu Sougoumarane 00:44:00 Yeah, the other big one is, which is again based on materialization, is a one-click migration, right? Like, let’s say if you are on a different database — like you know, RDS or something — and you want to migrate to PlanetScale. What we can do is you click a button and this V replication will materialize all your tables into the PlanetScale database, and then you can switch your traffic. And while you are switching traffic, we will actually replay these events into your source.

Roshan Diwakar 00:44:28 Gotcha. Okay.

Sugu Sougoumarane 00:44:29 So, if something goes wrong, you can go back and continue. So this makes migration — typically these types of migration take, you know, six months, one year, some companies actually just walk away, they give up because migration, data migration is one of the hardest things. So in our case, in my opinion, we have trivialized that thing with just a click of a button.

Roshan Diwakar 00:44:47 Gotcha. And like, Vitess of course works on MySQL family of databases. Are there any plans for it to sort of work on other relational databases?

Sugu Sougoumarane 00:45:00 Not today. Every once in a while, it is so tempting. Every three or four days, you know, the thought comes up. But it is very important to stay focused and make the current solution work. In my opinion, this is a high-level opinion; not many people would agree with me. An application should not over-exploit a database. An application should use a database for its bread-and-butter features, right? Don’t go into elaborate foreign key because those are eventually going to hurt you if you have to scale. Stick to simple queries, stick to point queries, stick to index scans, right? Those are the bread and butter of a database, don’t do stored procedures. And you know, there’s so many crazy things you can do with a database, but the more you do that, the less flexible you become in your app.

Roshan Diwakar 00:45:52 Yeah. Migration’s going to be a challenge.

Sugu Sougoumarane 00:45:54 Migrations are going to be a challenge. So, if you did something like that, then it doesn’t really matter if you’re using Postgres or MySQL, right? Because you’re just using the database for its — for such things, I would say all these databases do that job well.

Roshan Diwakar 00:46:09 Okay, gotcha. And in terms of like, what are the plan future features for PlanetScale? Any important changes that are coming?

Sugu Sougoumarane 00:46:19 I can’t talk about . We are building lots of cool features and we’ll announce them as they come. But Vitess features, that’s an open-source project. I can definitely talk about those things. And we are making some pretty core changes in the test. Especially the one big change that we are making is make Vitess more approachable. Previously, it was full of manholes, you could go and tweak anything you like and make anything you want. But now that we have seen all these use cases, we know that some of these use cases are very peripheral, and so we are making some opinionated changes and shutting those down so that we can move forward even faster.

Roshan Diwakar 00:46:57 Gotcha. And is there anything that we missed talking about, either of Vitess or PlanetScale, that you want to talk?

Sugu Sougoumarane 00:47:05 The only thing is I haven’t had enough time to talk about how excited I am about PlanetScale, the amount of interest that we are getting. I have not seen excitement like this about a database in the last 15 years. I can tell you. The last time I have seen this much excitement was probably when I was at Informix. Like that time, databases, databases, databases. And I see that now today, which is so exciting.

Roshan Diwakar 00:47:34 And if listeners want to contribute to Vitess or know more about PlanetScale or about you, how can they reach out to you?

Sugu Sougoumarane 00:47:42 I’m reachable on Twitter and the Vitess Slack is one of the best communities we have ever built. Very positive, very welcoming, very accommodative Slack channel. You can go there and ask anything. And for PlanetScale, PlanetScale.com, there is plenty of ways to contact PlanetScale people. And thank you for these really wonderful questions. I completely enjoyed talking to you.

[End of Audio]