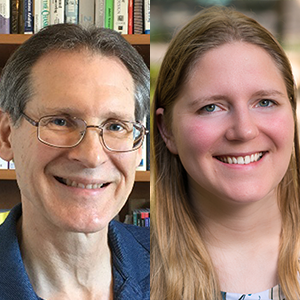

Karl Wiegers, Principal Consultant with Process Impact and author of 14 books, and Candase Hokanson, Business Architect and PMI-Agile Certified Practitioner at ArgonDigital, speak with SE Radio host Gavin Henry about software requirements essentials. They explore five different parts of requirements engineering and how you can apply them to any ongoing project. Wiegers and Hokanson describe why requirements constantly change, how you can test that you’re meeting them, and why the tools you have at hand are suitable to start straight away. They discuss the need for requirements in every software project and provide recommendations on how to gather, analyze, validate, and manage those requirements. Candase and Karl offer in-depth perspectives on a range of topics, including how to elicit requirements, speak with users, get to the source of the business or user goal, and create requirement sets, models, prototypes, and baselines. Finally, they look at specifications you can use, and how to validate, test, and verify them.

Show Notes

- SE Radio 114 – Christof Ebert on Requirements Engineering

- SE Radio 518 – Karl Wiegers on Software Engineering Lessons

- Impact of Budget and Schedule Pressure on Software Development Cycle Time and Effort (from IEEE Transactions on Software Engineering)

- LinkedIn – @karlwiegers

- LinkedIn – @candase-hokanson

- www.karlwiegers.com

- www.processimpact.com

- ArgonDigital

- www.softwarereqs.com

- Software Requirements Essentials practice summary

Transcript

Transcript brought to you by IEEE Software magazine and IEEE Computer Society. This transcript was automatically generated. To suggest improvements in the text, please contact [email protected] and include the episode number.

Gavin Henry 00:00:18 Welcome to Software Engineering Radio. I’m your host Gavin Henry. And today my guests are Karl Wiegers and Candase Hokanson. Karl Wiegers is Principal Consultant with Process Impact, a software consulting and training company in Portland, Oregon. He has a PhD in organic chemistry. Karl is also the author of 14 books on software development and other topics. His most recent book is Software Requirements Essentials, Core Practices for Successful Business Analysis . And Candase is a co-author. Candase Hokanson is a Business Architect and PMI-Agile certified practitioner at ArgonDigital, a software development professional services and training company based in Austin, Texas with over 10 years of experience in product ownership and business analysis. The latest book is Software Requirement Essentials, Core Practices and Successful Business Analysis . Karl and Candase, welcome to Software Engineering Radio. Today we’re going to talk about software requirement essentials and as luck would have it, you’ve both written a book on that topic. So I’d like to start with a brief review of what requirements and business analysis mean and spend up to 10 minutes on five different parts of requirements engineering. Actually is requirements engineering the correct term?

Karl Wiegers 00:01:36 Well, I think it’s a slightly optimistic term, but it’s the term that I use. The term that’s used more often in recent years for, for this domain is business analysis, which encompasses things beyond just requirements. But requirements engineering is where my hearts at.

Gavin Henry 00:01:53 Excellent. So what do we mean when we use the word requirements? Who would like to take that question?

Karl Wiegers 00:01:59 Well, I’ll start with that Gavin. And I think this is a great place to start today because people interpret the word requirements in various ways. So I always have to begin my training classes with some definitions. So at least for the, the time we’re together during the class, we’re all talking about the same kinds of things. So I think of requirements as encompassing two major perspectives. First, there’s a description of stakeholder needs and constraints, and second, there’s a description of the capabilities and characteristics of some solution that we’re trying to build that we expect will satisfy those needs. But people sometimes talk about requirements as if they’re all one big monolithic kind of thing. I think it’s better to put some adjectives in front of the word requirements to distinguish these various sorts of requirements related information. We talk about business requirements, user requirements, which are sometimes generalized to stakeholder requirements, but I don’t like that term very much because as far as I can tell, all requirements come from some stakeholder. There are solution requirements which are typically subdivided into functional and non-functional requirements. Non-functional requirements include things like quality attributes and constraints. We have data requirements. You might talk about features, use cases. There are all sorts of things that people talk about in this domain. So I think it’s valuable to differentiate those with some adjectives. And these various kinds of information come from multiple sources at different stages in the lifecycle of your project, and they’re represented in a variety of ways.

Gavin Henry 00:03:31 Thanks. Well try to break them down in the next sections. I think this one is for Candase. What is business analysis, Candase?

Candase Hokanson 00:03:40 Oh, that’s a big question because as Karl mentioned, business analysis is a rather large umbrella, but in my opinion, at the highest-level, business analysis is really everything that it takes to understand a projects or products or even a companyís business problems and business objectives. And from there define a solution that would solve that problem or achieve those objectives. From there then business analysis decomposes that solution into the set of requirements knowledge, like Karl mentioned, that can be built, tested, and deployed to meet those business objectives and solve those business problems. So obviously that includes a lot of activities and artifacts, and the software requirements is one piece, but a very critical piece of that.

Gavin Henry 00:04:24 Thanks. And in your book it discusses 20 requirements practices, so we’re not going to be able to cover them all here and but I’ve picked a good chunk of them and broken them up to five sections where we can hopefully spend about 10, 15 minutes on each. So the first one I have here is requirements elicitation, I said that, correct?

Karl Wiegers 00:04:45 Yes.

Gavin Henry 00:04:46 Who would like to explain what that means without looking up?

Karl Wiegers 00:04:50 Well, that’s another good place for a definition. The first thing we have to do regarding requirements is to get some, and people often talk about gathering requirements, but that’s a little restrictive. The term requirements elicitation is broader and more accurate. I mean, of course there’s an aspect of gathering or collecting requirements out of people’s brains and documents and existing products and all other sources, but elicitation goes beyond that because there’s also a lot of discovery and invention that takes place during requirements elicitation. So you can’t just ask people what their requirements are and expect to get a useful or very complete answer. The business analyst is really a guide, or the requirements engineer, if we’re being optimistic, they’re a guide that leads this requirements exploration. And people also need to understand that elicitation, like the rest of the stuff that we’re talking about in this general broad category of requirements development, that’s an incremental and iterative process. You can’t simply ask people what they want in some workshop, write it down, and then come back with a good solution sometime later. It would be great if it were that simple, but it’s not. So we need this business analyst to guide the process and try to ask the right sorts of questions, explore the right kinds of information to get the knowledge that we need to build a good solution.

Gavin Henry 00:06:12 And this business analyst as a person that you just mentioned?

Karl Wiegers 00:06:16 Well, yeah, although I keep getting asked questions about, well, is AI going to take our jobs and how is AI going to affect business analysis? And that’s actually something that I haven’t paid much attention to. There are other people doing that, but for now, yeah, it’s people.

Gavin Henry 00:06:32 And has this evolved, the software methodology has transitioned from Waterfall to Agile or, for example, because you mentioned it was an iterative of constant elicitation.

Karl Wiegers 00:06:46 Well, it is, even if you’re doing, I mean I get really tired of hearing people talk about Waterfall versus Agile as if those are the only two possibilities. And of course they’re not. Those are two extremes of the spectrum. And one thing you’ll learn as you get older is that either terminal position of a spectrum is almost always silly, and being somewhere in the middle is almost always more reasonable. But it’s certainly, we don’t want to let people think that you can just do this once at the beginning of a project, go away and come back later with the right answer. But nor can we just have a couple conversations as we go along and then come back for a couple more conversations and expect to get a, a broad picture of what we’re building and see all the pieces fit together. So yeah, it’s an ongoing process and partly it’s ongoing because you get some questions answered, you go away, and you think about it and you realize there’s more questions or I didn’t fully understand the answer. So I think of requirements development as progressive refinement of details. You get a certain amount of information initially and then you have to elaborate that at the right time in the right level of detail with the right participants. And so I think the concepts apply, whether you’re talking about a Waterfall or Agile approach or anything in between. It’s just a matter of how much you do it and who’s involved and what you do with the information you get.

Gavin Henry 00:08:08 And the gathering the requirements is, as I understand it from both sides, so the user has a problem or the business has a problem, you’ll try and document that or wherever it is, but then more requirements will pop up from your side. So it’s driven from both sides, isn’t it? The elicitation.

Karl Wiegers 00:08:28 Yeah. Candase has got a lot of experience with those kinds of Agile projects. Do you want to talk about that Candase?

Candase Hokanson 00:08:34 Yeah, it’s just a fair, as Karl mentioned, it’s kind of how you do it and when you do it, you’re doing the elicitation maybe in smaller chunks as you’re preparing for an iteration versus maybe a whole release. But you’re still having to do the same fundamental activities of getting that information out of people who may have an idea of what they want, but we still have to figure out if that’s really what they need, if that’s going to solve their problem. And then getting that into a format that can actually be consumed by the developers. So you still have that iterative and ongoing conversation with your users, with the developers just in at least in most Agile projects, I’ve worked on kind of smaller chunks.

Gavin Henry 00:09:12 That leads us onto my next question perfectly, which is to users always know what solution they need. And by the sounds of it, no.

Candase Hokanson 00:09:19 Yeah. Anytime I see a word like always or never, the answer is almost certainly, well, not always there’s, there’s going to be an edge case, but in this case, I would almost go so far as to say a lot of the time users don’t really know the solution that they need. They may have an idea or a vision of what they think will meet their needs, but then it takes a strong and skilled business analyst to work with their users and understand the full problem space to say, yep, that’s really going to be a correct solution to meet your problem. Or yeah, that looks shiny or sounds good, but it’s not really going to do what you think it will do. And so as Karl talked a little bit about eliciting and getting information out of people, one of the ways we like to do this is by asking about usage. Like what are the things you need to do today to get your job done? And then we can help translate that into what it might look like in the future.

Gavin Henry 00:10:11 Yeah, because I’ve always wondered, could companies bring a product onto the market for the first time? Take for example, Apple or someone, it’s brand new, it’s being invented. Where are the requirements coming from there? It must be some problem space they’re trying to address or, if it’s brand new in the market, is it just better than what was there before or it’s a strange one.

Candase Hokanson 00:10:33 . Yeah. And consumer products definitely are more likely trying to exploit opportunities and they see a niche somewhere, maybe where some maybe even unspoken or like, we didn’t know we needed iPhones or smartphones until they existed.

Gavin Henry 00:10:47 That was exactly my thought, partner.

Candase Hokanson 00:10:50 And now we can’t live without them. And so in that case it was probably a, in case of Apple, just being smart and saying, Hey, people have cell phones today, we can make them better. There’s an opportunity, we could be the first to market. And because they were, I mean, and it really took off.

Karl Wiegers 00:11:05 Candase touched on a really important point there that I want to emphasize, Gavin. She mentioned usage and that’s one of my hot button issues when it comes to requirements is that you can take two approaches to exploring requirements. One is to focus on the product ask people what features do you want? The other is to focus on usage, ask people what they need to do with it as Candase brought up. And I think that is an absolutely essential point and I think that would be applicable certainly to not just business situations where we can talk about how people do their job now and how that might be different. But you can do that with new product development where we think not in terms of the features that somebody might say, oh that’s cool, or you put it in because someone figured out how to do it and perhaps someone will find it useful. Sure, some of that’s fine, but let’s think about what people want to do with a device. And as Candase mentioned, they may not even know they want to do that, but let’s try to imagine how that might work. Think about what people would do if they had this product available. And I believe that in almost every case, a focus on usage is more likely to yield accurate and valuable understanding of requirements than a focus on products and their features.

Gavin Henry 00:12:19 Yeah, that was one of my next questions about the do users always know what they want to do with it versus what the solution is they need? So thanks for that. What information do we need first, I think there’s a nice chat in your book called Laying the Foundation, which I’ve kind of skipped over, but obviously we need to do that. Can you take us through that?

Karl Wiegers 00:12:39 Yeah, I can comment on that. We do have six sections of practices. These 20 practices are grouped into six categories. Five of them address the major subdivisions of requirements engineering, that is elicitation, analysis, specification, validation, and management, which I think we’ll get to today. But we also have this chapter called Laying the Foundation because we describe five practices that I think are fundamental to putting into place the basics upfront about what we need to do to understand how to proceed with the rest of the project. So the first practice really addresses this question of let’s make sure we all understand the problem before we converge on any particular solution. Next, let’s define our business objectives so the participants all understand why we’re even undertaking this project. It’s important also to define the solutions boundaries, which helps the team take scoping decisions. What’s in what’s out, you can’t do everything. So letís draw those lines.

Karl Wiegers 00:13:37 So all of that will then help you identify and characterize the various stakeholders who need to contribute to the requirements process. And little safety tip, there are almost certainly a lot more stakeholders than you think there are initially. So that that’s worth thinking about. And finally, from those stakeholders, we can then identify the empowered decision makers who are going to have to handle the various types of many requirements related decisions they’re going to encounter. And let’s figure out who those people are and how they’re going to make decisions preferably before they encounter their first major decision.

Gavin Henry 00:14:14 Yeah, I think we touched upon some of this when we last spoke. So just for the listeners that haven’t heard that show Episode 518 when we spoke on more general software engineering lessons based on your 50-year experience of at that time. Excellent. So we’re going to dig into the five terms you mentioned. Requirement solicitation is what we’re almost finished. Analysis, requirement specification, validation, and management. Before we move on to the next section, I’d like to pick an example that we can pull apart moving forward. Would either of you be able to give me an example project we could talk about for requirements analysis, our next section?

Candase Hokanson 00:15:00 Sure. So this is a project I worked on several years ago. We were completely rebuilding one of our clients’ credit adjudication platforms. So how they get information from customers, go to credit bureaus, determine if someone is credit worthy, and then give them a decision. So we had set the scope of doing kind of a major re-platform of their credit adjudication process to make it more flexible going forward. And to do that we had to make major database schema changes. And so we can talk about this through the various areas today as well. But in terms of elicitation and kind of understanding the problems, one big hurdle we ran into close to launch was we had to make these, these large database schema changes to support our project. And probably a couple weeks before our go live date, our main stakeholder came and said, no, we couldn’t change the database schema.

Candase Hokanson 00:15:52 If we had just taken her at face value, this would’ve completely derailed our project and sent us back to square one in terms of defining what to do. So instead of just saying, yep, let’s start over again, I worked with her, did some solicitation to understand why she was so resistant to changing the database schema. Well as it turned out, she had several kinds of SQL queries that she had written based on the database that were driving all of her regulatory reporting. So once we knew this, instead of going back to square one, we actually offered to rewrite all of the SQL queries so that her reporting would work with the new database structure so we could launch on time. And thus we saved that, that project, at least at that point, and we were able to move forward. So we can continue to talk about that one as we go.

Gavin Henry 00:16:38 Excellent. And I guess it does come down to how flexible they are on that, yes or no, doesn’t it? If she said, no, I’m not going any further into detail, then there’s not a lot you can do, is there?

Karl Wiegers 00:16:53 There’s two points. Well there’s a lot of good stuff in what Candase just said, but there are two points I wanted to reinforce. One is the nature of constraints, and we talk a lot about requirements, but we also get constraints from our various stakeholders that we have to understand and, and document and communicate to all the other participants because those limit some of the choices that we can make either in terms of what requirements we can do or what the designers and developers can do. And in this case saying no, you can’t touch the database. That’s a pretty major constraint in this case it could have been a showstopper constraint, but we need to know about those things. And the second point I want to make is that Candase mentioned that she asked why, and that’s a wonderful question to ask, especially if you hear something that kind of surprises you or sounds like it’s maybe going to limit what we need to do. Let’s understand why. Because people like to say, well the customer’s always right, so we have to do what they want and that’s just silly. We all know the customer’s not always right, but the customer always has a point. So we have to understand and respect that point and then figure out what to do moving forward.

Gavin Henry 00:18:02 And you mentioned blockers, are showstoppers there constraints, the two usual ones are time and money, aren’t they? With regards to business?

Karl Wiegers 00:18:12 Yeah, those are project level constraints and then there can be requirements level of constraints, there are lots of kinds of constraints. We should probably put some adjectives in front of those too.

Gavin Henry 00:18:21 Okay. So I’m going to move on to requirements analysis. Who would like to explain what that is?

Karl Wiegers 00:18:28 Well, I can take a shot at that. In a way I used to think requirements analysis just sounds like something that just somehow happens if you stare at the requirements long enough. But in reality, there are some specific things we can do around requirements to fully understand the requirements that we have. That’s all what analysis is about. So requirements analysis involves first ensuring that the needs of all the stakeholders are understood and documented appropriately. And the outcome of this a successful analysis gives us confidence that we can build a satisfactory solution to meet those needs. We can define it, we can agree upon it, we can build it and we can test it. So there are a lot of things to look for when you’re analyzing requirements. For example, we need to understand each requirementís origin and rationale.

Karl Wiegers 00:19:19 That gets back to why again, so why is this in there? And there can be a lot of reasons and some of them are good. Some maybe mean, oh I guess we don’t need to do that. A big part of analysis involves decomposing requirements into appropriate levels of detail and then deriving requirements from other information such as business rules or policies. Those aren’t requirements, but they can certainly turn into a lot of requirements. I mean, just think about security. You can have a very concise security requirement, but that turns into a whole lot of functionality. We need to make sure that any exceptions that could take place are identified, they’re handled. We need to define acceptance criteria and so forth. So there’s a lot to think about besides just writing down each requirement as clearly as you can. Analysis goes down to another level.

Gavin Henry 00:20:07 And you talk about once the analysis is ongoing or a complete term requirement sets, could you take us through that?

Karl Wiegers 00:20:17 Yeah, there’s a list of requirements and a business analyst can find many potential problems by looking at individual requirements in isolation. I’ve kind of done that as a consultant over the years. I’ve reviewed a whole lot of requirements documents for clients and I can find a lot of problems just by looking at a specific requirement. You can find ambiguities and things like that that lets that maybe there’s a need for more work on it. But requirements don’t exist in isolation. They exist in the context of lots of other requirements. So that’s what I mean by a set of requirements. And when you’re analyzing sets of related requirements, you have to look for some different things. You need to look for omissions. Is there a requirement missing from this set of related things or is there a piece of information missing from certain requirements?

Karl Wiegers 00:21:05 Look for conflicts and inconsistencies. You can have a parent requirement that conflicts with its children, or you can have one requirement that says do A and the other one says do B. But you can’t logically do both. Look for duplications, that’s a whole conversation in itself. There are dependencies one requirement might have on another. And please keep in mind when we’re talking about this general term requirement here, I don’t really care what kind of information you’re using on your project. You might be using user stories as your atom of requirements, but that user story could have dependencies on others as well. So if you don’t like the word requirement for your project, then substitute whatever term globally, this is your local terminology. Priorities are relative you, you can look at one requirement and say, well that’s, high priority or X priority, but that’s relative statement compared to what? Compared to the others in the set and so forth. Missing requirements incidentally are perhaps the hardest kind of errors to find because they’re not there, they’re invisible. So they’re kind of hard to see. So these are some of the things you look for if you’re trying to analyze a set of related requirements.

Gavin Henry 00:22:18 And Candase, when you spoke about the database schema and the user or the business needing it for compliance reasons, there’d be quite a few parts and pieces of data that would need to be set together or kept together. So that was sort of feedback to this, wouldn’t it, once you said we’ll rewrite these queries but we need to keep X, Y, and Z together.

Candase Hokanson 00:22:40 Absolutely, yeah. In terms of the requirements set, we had the features that described the changes to the database schema. And so we relied heavily on that when we were analyzing the SQL queries to rewrite them.

Gavin Henry 00:22:53 And what are requirement models?

Candase Hokanson 00:22:56 Oh, one of my favorite things. So requirement models are just really any way to visually represent requirements information. A lot of people when we talk about requirements use natural language text, but a lot of times that’s not the best way to represent information. So these could be tables, pictures, wire frames, or even models we’re familiar with from say a UML type modeling language like process flows or data flow diagrams. But they can be really helpful in finding requirements and identifying those gaps in your requirements that Karl mentioned in terms of things you were looking for in that requirement set. I’ve also found it’s a really great tool for elicitation as well. It’s an interesting psychological aspect of humanity that we’re much quicker to correct something if, when we’re shown something than to be able to make something out of nothing. So if we have those requirements models that show a view of the world, then our stakeholders can tell us if they’re correct or not.

Gavin Henry 00:23:54 And how would you create those models? Just sketch pad and paper or you know?

Candase Hokanson 00:23:58 It really depends on what you are trying, what information you are trying to show visually. So I’m a big fan, we use Lucid chart here at ArgonDigital for our more formal models, for wire frames, certainly just a sketch pad. Back in the days when we got to be in person, I love just moving sticky notes on a whiteboard.

Gavin Henry 00:24:16 Yeah, surprisingly effective isn’t it?

Candase Hokanson 00:24:18 Yep. Because it’s very, you can move them around, it fits wrong, it’s not set in stone and then you can take a picture of it and kind of more formalize it later. And so it’s just really powerful to get that information out of people as well as to do that analysis. And one of the things I recently found out I guess a couple years ago is that not everybody thinks the same way. I mean it sounds obvious, but until you actually think about it you grow up thinking everybody thinks like you. And this was true for myself as well. I realized a couple years ago that I have aphantasia, which is the inability to see pictures in my head. I just kind of assumed nobody could see pictures in their head but I was wrong. And so for me learning that and kind of help turn the light bulb of why I love visual models so much because I can see it in person so to speak right on the screen or in paper or on the whiteboard versus trying to visualize it in my head.

Gavin Henry 00:25:12 Interesting. Thank you.

Karl Wiegers 00:25:13 Iím a big fan of modeling as well. I took a course in structured analysis and design many years ago, 1986 I think. And that just totally transformed how I thought about software development. Tremendously powerful tools. There are lots of kinds of pictures we can draw standard diagrams from these various modeling languages like UML or structured analysis or IDEF0 and there’s a lot of them. And one thing I always recommend to people is please do not invent your own modeling notation because these are communication tools, it’s a language and we all have to be able to speak and read the same language if we’re going to communicate effectively. If you draw some kind of picture and nobody knows what an arrow with two double-headed arrows means, then that’s going to be a problem. So there’s lots of standard kinds of models we should learn about them. This should be a standard practice for every business analyst and I suggest that they use the standard models in almost every case because I think it will be quite rare that they want to depict some information and nobody has yet figured out a picture to draw for that.

Gavin Henry 00:26:18 And with regards to this section of requirements analysis, can you give me an example when this step is not needed or is it something that is always needed?

Candase Hokanson 00:26:28 So I believe that no matter what project or product you’re working on, some level of requirements analysis is necessary. Now the level how much analysis you do and whether you’re using specific visual models or whether you’re creating prototypes versus I’ve done this a thousand times so I’m able to do the analysis ad hoc in my head. That could definitely vary. But I think you are still doing some level of analysis for every single requirement that we’re writing. Now some cases where you might use less analysis might be if the product or product team are well established and they have consistent delivery velocity. So maybe then you’re using less time on analysis but you’re still doing some. On the other hand, if you’re doing new or risky or unknown projects or unknown features, you’re going to spend a lot more time on analysis to really understand that problem space.

Gavin Henry 00:27:20 And in those situations they might call for a prototype because it’s brand new.

Candase Hokanson 00:27:26 Absolutely. Especially if you’re building something that that doesn’t exist. Prototypes are a great way to kind of get that, that early and iterative feedback by putting something in front of users that they can either imagine themselves interacting with or in some cases actually interact with. So absolutely prototypes should at least be considered on all of the projects.

Gavin Henry 00:27:45 This point as well because that would also give you, more requirements questions to ask the users or the business once you tried to build a thing.

Karl Wiegers 00:27:55 Exactly.

Gavin Henry 00:27:55 For your example Candase, did you need to do a prototype or is it more?

Candase Hokanson 00:28:00 Because this one was more API it was more backend. It was because it was all automated, we didn’t have as many prototypes but we did have a simple UI where the users would view information and be able to reprocess different adjudications. And so for that case we did have a very kind of simple wireframe prototype that we used in our early analysis.

Gavin Henry 00:28:21 Yeah, because a prototype is something that you can throw away.

Karl Wiegers 00:28:24 Sometimes. Sometimes it grows into the product. You just have to make sure you understand upfront what are we going to do with this prototype? Are we going to use it to gain knowledge and then throw it away and build the real thing? Or are we going to use it as a starting point which will evolve into the product? The strategy that you take with those two scenarios is very different because if it’s the latter where you expect it to become the product, you better build it with production quality code from day one. Instead of getting into a situation where someone says, wow, this looks great, I think you’re done. Can we have this? And the intent was not to do that and then you have a problem.

Gavin Henry 00:29:02 When would that decision be made, whether you, when you start that prototype, whether it’s going to be throwaway?

Karl Wiegers 00:29:08 Well I would do that at that point because I think of a prototype as being an experiment. You’re taking a tentative step into the solution space saying if we understand the requirements correctly, then here’s a way we might approach building it. Or maybe you don’t understand the requirements thoroughly and so you build the prototype to help you explore those further and plug gaps in your knowledge. So view it as an experiment and understand what your objective is. How will you determine if the prototype has served its purpose and that thought process should let you decide, okay, what do we do with it when we’re done?

Gavin Henry 00:29:46 Excellent. So I’m going to move us on to our third section requirement specification. Now the word specification for me, I think of like RFCs, Request for Comments or some big hideous massive thing that tells you how to do exactly everything. Is that what we mean?

Karl Wiegers 00:30:05 Well you could, and in certain situations you need that kind of information because if you’re going to be mailing your requirements off to some offshore development company to build it, they’re going to have fewer opportunities perhaps for day-to-day quick responses to questions and clarifications and elaborations and they need more details, really boils down like so many things here to a question of risk. And I think when people look at these books that talk about requirements, it can be a little overwhelming. 10 years ago I wrote the third edition of my book Software Requirements , which was co-authored with Joy Beatty and that turned out to be a rather large book. It’s about 645 pages long. But that doesn’t mean you have to do all that stuff. What you need to do is say, here’s a bunch of tools and techniques and practices and we will use whichever of those in whatever level of detail and timing is necessary to manage the risk on our project.

Karl Wiegers 00:31:08 And the risk we’re concerned about is either building the wrong solution entirely or having to do a lot of reworks to then fix whatever solution you have or whatever partial solution. I hate rework, I hate unplanned rework doing over something that we’ve already done. So I think people need to keep in mind the question of how far wrong we could go with this if we don’t do analysis or if we don’t write down our requirements in some structured way to communicate with other people. So I think requirement specification is really important, but we should remember it has two meanings. First, it refers to the process of recording what you learn about requirements in some persistent in shareable form. And then the second sense of the term, which is what I think you were thinking of Gavin, is whatever container you store that requirements knowledge in, it could be big, it could be small, different problems require different approaches and it doesn’t have to be a document, it could be a document or a spreadsheet or a database or a commercial requirements management tool or a bunch of sticky notes on the wall, whatever works for your team.

Karl Wiegers 00:32:16 But we’ve got different kinds of information that we need to record. And I think it’s important to do that simply to ensure effective communication both among the various stakeholders and across time human memories are imperfect and incomplete. They fade and distort over time and strangely other people cannot yet access them. So I think of a requirement specification in some form serving as a persistent group memory for this critical requirements knowledge.

Gavin Henry 00:32:46 Yes, it’s an agreed way to document and communicate.

Karl Wiegers 00:32:50 Right.

Gavin Henry 00:32:51 Just a note for the listeners, Karl mentioned a book that you did 10 years ago. We also did a show 15 years ago, very early one Episode 114 with Christophe Ebert on Requirements Engineering. So once you finish this, it’d be good to listen, to that one after and see how far we’ve come along. Okay, so I think we’ve covered off my next question, which is how formal is this specification? Which is kind of because of my misunderstanding of the term.

Karl Wiegers 00:33:21 Yeah. That’s worth elaborating on just a second if I could. Gavin, is it how formal it is and the answer is the same as unfortunately with so many questions around software is, well it depends. It should be exactly as comprehensive and formal as it needs to be to manage those risks of having to do a bunch of rework. Because we got it wrong, it’s easy to go wrong in either direction of formality and comprehensiveness. You can try to pin down every requirement in detail upfront in the classic, although I think rarely practiced in reality Waterfall approach or you can rely on telepathy, clairvoyance and memories instead of writing anything down. And both of those approaches are likely doomed to fail. So let’s use some of the things that kind of Candase was getting at how complex the problem is, how long it goes on and that sort of thing to try to determine well how much effort should we put into recording this versus just saying, okay, I think I know what you mean, I’ll call you when I’m done.

Gavin Henry 00:34:21 So we’ve answered the question, how formal is this? It depends. And also what the specification looks like. This isn’t an RF seed format, this is just what works for the project. And also what you touched upon there, Karl about how detailed the specification needs to be potentially goes back to what you said before with the limits of time and money. You don’t want to spend the whole project trying to document the project. So kind with your example project, what was your agreed way to document and communicate under the term specification?

Candase Hokanson 00:34:58 So I actually had worked with this client for a lot of their projects to set up how they would kind of structure their requirements and requirements specification. So we were using a modified safe or scaled Agile approach. So we organized our requirements into epics and features down to the user stories that the developers would implement in, their iterations. And then we would group those together into the releases and we were using a requirements management tool at that time to organize everything. And so that was a really great way because it had a, it was a centralized repository, everybody could access it. It had a really robust version history so we could tell if someone tried to change our requirements, which happened from time to time. However, one of the things we did find is that like most requirements tools or even some specifications, they tend to be point in time. So this was the user story when we developed it, but that requirements management tool won’t tell you we built that in sprint one, but that was changed by another story in Sprint three or a bug that happened later. So I’ve been a really big fan actually from my civil and structural engineering days, something called an as-built. So it’s kind of lightweight documentation that says at the end of the release, what did we actually deploy? What is the product as built in in use versus the myriad of stories that we built to support that release.

Gavin Henry 00:36:21 So you used a tool requirement gathering tool just for the listeners, is that commercial thing open-source thing or a Google doc?

Candase Hokanson 00:36:29 There are many, many requirements management tools. In fact, ArgonDigital has done I think two tool studies of I think up to a hundred different requirements management tools that exist in the marketplace.

Gavin Henry 00:36:41 Oh, if you’ve got some links, I’ll put them in the show notes.

Candase Hokanson 00:36:43 Yeah I can dig those up. Some of them are commercially available, some of them are open-source and some of them cost a lot of money. In this case we were using Microsoft’s Azure product, their requirements management tool. But there are many others. Jira, Rally, I can’t even rattle off all of the different tools that exist out there.

Gavin Henry 00:37:02 Thank you. And I guess from what you are saying, Karl, where the agreed upon way to communicate document, the specification isn’t applicable to only large teams or large companies?

Karl Wiegers 00:37:16 No, not at all. I don’t know if you’ve ever had an experience like this, but I worked on a project once, many years ago. There were just three of us on the project. I was leading it and I was doing the requirements which were fairly informal. We didn’t have anything as structured as what Candase was describing. This was in the, oh geez, mid 1980s before I really a appreciated how important this was. And we would have a weekly meeting and then we’d go off and work on what we agreed to work on for the week and we’d come back and reconvene to continue. And on two occasions we walked away the three of us from a meeting with different understandings of what we had decided to do. And so we worked in different directions for that next week and that that didn’t really work out very well. So even having rudimentary recording of the knowledge and the decisions that are made, I think is really important. Because people’s memories simply are not as reliable as everyone likes to think they are. Have you ever had a conversation where some decision was made or agreement was reached and people walked away and you found out later that they had different understandings of those decisions or agreements?

Gavin Henry 00:38:29 Yeah, I was just trying to think of whether a tool like GitHub issues would be suitable to do this, but when you do an issue or a, this is what we’re trying to do, it often doesn’t capture the why, which is what I think you’re saying is why did we get to that point? Or how did we, and then if all three of you are of a different memory of why that decision was made

Karl Wiegers 00:38:53 Or even what the decision was

Gavin Henry 00:38:55 Yeah that’s happened a lot. Because it goes back to the initial or the ongoing, sorry, requirements capturing of yeah we’ve got to do this, but why did you think we did it that way and when was that agreed to change it?

Karl Wiegers 00:39:11 Right? So having some records like that I think is very valuable. It doesn’t have to be again, any more detailed than necessary, but I like this phrase, a persistent group memory, which can then be a resource that everybody can go to. Is it going to be complete and perfect and current all the time? No, probably not. And it takes some effort, but it probably takes less effort to build and maintain that repository of knowledge, decisions and, and agreements and details than it does to build something found out you did it wrong and then build it again.

Gavin Henry 00:39:45 Well let’s just move on to the next section because I think it feels fits nicely. So requirements validation is the next section. How do you validate what, what you’re doing basically? Is that, is that correct? What does that mean, Candase?

Karl Wiegers 00:40:00 Well, I can maybe talk a little bit about what validation means and then I’d love to hear how Candase’s team did that on their project. Just because you’ve accumulated some set of requirements in any form, whether it’s telepathy or whether it’s detailed in a tool that doesn’t necessarily mean you’ve got the right requirements. You could have a set of beautifully written and modeled requirements that seem crystal clear, complete and unambiguous and yet they could still be wrong, wrong in the sense that they do not completely satisfy the need that we’re trying to do with our solution. So we need to validate requirements to make sure that the specified solution would satisfy the real business needs and hopefully achieve the intended outcomes that the stakeholders are looking for. I think it’s a lot cheaper to do that before you finish building the product and then ask the users if you got it right. So we want to look for techniques that can give us validating information that we’re on the right track all along the way.

Gavin Henry 00:41:03 And can you give me an example of that validation?

Candase Hokanson 00:41:08 Absolutely, yeah. So I mean when we’re validating the requirements a lot of what, at least my goal is to get as many eyes on the requirements as possible to have different people with different lenses look at the requirements and compare them to other requirements information, whether that be prototypes or visual models or even a solution architecture to find issues to say will this actually meet our business objectives and solve our business problems? And so reviewing requirements can be as simple as just reading them and saying, Hey, do you have the same understanding as I do to as formal as an inspection with checklists of specific things to look for? But I love getting multiple people to review my requirements. In the case of my project, we were constantly reviewing requirements as because we were, we were sprinting in an Agile-ish fashion. So we were reviewing requirements with the developers and testers or as Karl likes to call them, the victims of our requirements.

Candase Hokanson 00:42:08 So I was weekly reviewing my requirements with them. I was also reviewing with our solution architects to ensure that what we were defining would actually meet our intended goal because we did understand what the business problems were upfront, which is a really foundational piece to understand because if you don’t understand what problem you’re solving, how do if your requirements will meet that problem or solve that problem? And then of course with my business stakeholder as well, reviewing both the high-level requirements to make sure that the problem was solved and then also some of the lower-level requirements of the details of how we would get to that final state. So that was one way we validated them. In this case, I didn’t yet know about testing the requirements. That was one of my eye openers as I was co-authoring with Karl was how we can use our multiple views of requirements information like visual models and text-based requirements to test the requirements before you’ve written any code at all. So we didn’t do that on this project, but it’s a valuable tool that I will and now currently do use on my current projects. And it’s really about having two different thought processes, so either requirements and testing and in two different brains if it can be. And comparing them to find issues in both, issues and requirements and issues in tests.

Gavin Henry 00:43:27 Can you test your requirements? Are met with software?

Karl Wiegers 00:43:32 Oh yeah, absolutely. And people I think are surprised to think about that, but that was another real eyeopener for me. As we go through our careers you have a handful of small experiences, and you realize there’s a really powerful message in there. And the very first time that I did that, that message came through, I had written some requirements for a project, and it was just, a couple dozen requirements. It was a simple thing. But then I said, well I wonder how I could tell if these requirements had been correctly implemented. And so I wrote down a bunch of tests to go along with those. So as Candice said, really there are two different thought processes. One is describing go build this and the other is describing how do we tell if we built the right thing correctly, which is really both validation and verification.

Karl Wiegers 00:44:17 So then I took this set of requirements and a set of tests, and I mapped them against each other, and I made sure that every test could be executed by firing off certain set of requirements. And I made sure that every requirement was covered by one or more tests. And every single time I did that in my career, I found errors. I found maybe a place where there was some test that couldn’t be executed well what does that mean? It means either I’m missing or have some incorrect requirements that would let that test happen or it means the test is invalid and I don’t know which is which, but the fact that we created multiple views, multiple representations of that knowledge, a set of written requirements and a set of written tests. And even better, you could have a prototype, you could have some models like Candase talked about.

Karl Wiegers 00:45:08 In other words, multiple representations of the knowledge then give you the opportunity to compare them this way. You can take a diagram and some tests and trace through it with a highlighter pen, and you can find errors that way when you hit a test and your picture doesn’t let you do it. Okay, something’s wrong. And here’s an important point. If you only create one representation of requirements, and it doesn’t matter what that is, whether it’s just stories or just acceptance criteria or just pictures, if you create only one representation, you must believe it. It’s all you have. But if you create more than one, particularly if different people create them, then you can compare them. You can find these disconnects and errors and incorrect or different assumptions people made. So I think that’s an enormously powerful way to improve the quality of requirements early on. And if you’re doing that in conjunction with the people who have the needs, that helps you validate that those requirements you end up with will meet their needs.

Gavin Henry 00:46:07 And is there something that this validation, you mentioned validation and verification, are they the same thing?

Karl Wiegers 00:46:17 Well they aren’t really the same thing, although the terms are frequently confused. A team can make for make sure that their code passes its unit integration and system tests. They can confirm that the code correctly implements the design, they can confirm that the design addresses all of the requirements. Those are all software verification activities, but they still don’t tell you if the requirements are the right solution requirements or not. That’s what validations about. So kind of the colloquial way people say that is that verification checks to see that you did something right, validation checks to see that you did the right thing. When you get to the very top level of requirements, then basically they’re the same thing because you’re validating against what someone told you or what you found in various documents whatever the source of the requirements is, that’s how you validate, you have to go back to the source. And that’s essentially verifying that you’ve done that translation correctly as well. But basically, validation and verification are complimentary. They’re not the same.

Gavin Henry 00:47:20 So if you’ve got your requirement that could be feature X, you’ve done that feature, you’ve written the most applicable tests for it, unit tests, property based tests, integration tests, what have you, does that not mean because your tests are passing and the feature’s been implemented that you validated the requirements and it’s, sorry, you’ve verified and that now it’s valid? Or is this a step completely regardless to what the features you developed? Have I asked that correctly? ,

Candase Hokanson 00:47:55 Ideally you’d be validating that they’re the right features to build before you build them. So the way I think of it is that we should be validating before we build and then we verify after we build to say did, we build it correctly? So some of the colloquialisms that we like to use are: did we build the right thing, or did we build the thing correctly? And so building the right thing is validation, building it correctly is verification. And I actually sometimes when I teach my training classes, go back to a story again from my civil structural engineering days when a bridge fails, it’s all over the news and, and one of the first questions is, well was it built to the specification? So verification or was it designed wrong by the engineer? And that designing wrong is more of that validation of like, did we confirm that it would stand the loads that we needed it to withstand versus did I lay the rebar on that bridge at eight-inch intervals as per the specification or use the right thickness of bolts or whatever.

Gavin Henry 00:48:57 Okay, I think I understand that right? I was trying to pull apart what Karl was saying where we’ve got this software based suite that validates and verifies our requirements. And is that the same as the test we already write for software or is this something new?

Karl Wiegers 00:49:18 I’m not sure what you mean. Software based suite of tests

Gavin Henry 00:49:22 Like your test suite where you’ve implemented a feature or you’re testing a small unit of code. If you scoop them all up because they’ve been driven by a requirement to create this thing, does that not mean the requirement is then satisfied orÖ

Karl Wiegers 00:49:35 No, not well it means the requirement is satisfied but it doesn’t tell you if it was the right requirement. It doesn’t mean the customer’s satisfied or the business need is satisfied.

Gavin Henry 00:49:44 So itís that gap there that

Karl Wiegers 00:49:45 Thatís the gap, right. Yeah, that’s exactly the difference between validation and verification. And I think Candase explained it nicely, but yes you can run all those tests. You can say, well wait a minute, I satisfied the specification so I don’t know what you’re complaining about. It’s like, well, because you’re missing a whole chunk of stuff we need or you misinterpreted what I said or things have changed. That’s the difference between validation and verification.

Gavin Henry 00:50:10 Thank you. So I’m going to take us on to the last part of our show, which is requirements management. So who would like to explain this?

Karl Wiegers 00:50:19 I’ll start. Candase has got a lot of experience that she can fill in some details, but so far we’ve been talking primarily about requirements development activities these four domains of elicitation, analysis, specification and validation. And most of our practices are in those categories and the large volume of any book on requirements or business analysis covers those kinds of activities. But requirements management is the sub-domain of requirements engineering that deals with how the requirements are used on the project and how the project responds to evolving needs. There are several valuable requirements management practices. They include things like version control of requirements as they change over time because we want to keep track of those changes. Sometimes you have to revert to an earlier version and it’s good to know why a requirement was changed. We want to track requirement status as each one of them.

Karl Wiegers 00:51:17 Again, a requirement being whatever granularity you’re at, user stories are fine, but we want to track status as they move from being proposed through whatever steps in their lifecycle exist until ultimately it’s either delivered and verified in the product or perhaps deleted from the base or deferred to a future release. Requirements traceability or tracing, that’s another important practice. And if we had written a book about the 21 most important business analysis practices, I think traceability would’ve been the one we’d added, but we left that out. I think most teams probably don’t do much tracing other than perhaps from requirements to tests. And again, that’s a risk-based thing. I talked to, a guy once, the very first course I taught on requirements and I’ve taught about 250 of them now. But the very first one I taught around 1995, there was a guy in the class who had worked on the Boeing Triple seven airplane project and he said their requirement specification for the software was a stack of paper six feet thick and they had a complete requirements traceability matrix. And for an airplane that’s a pretty good idea, but most people aren’t going to do something that rigorous for a phone app or something. But the two requirements management practices that we talk about in the book and that do apply to every project are establishing and managing requirements baselines, and then of course managing changes to requirements effectively. So those are I think, core parts of requirements management that apply to everyone.

Gavin Henry 00:52:51 And what’s requirements baseline?

Candase Hokanson 00:52:54 Sure, I can take that one. So the requirements baseline, it’s really any set of scope that has been agreed by the stakeholders development and testing to be developed tested, and deployed as a group. And once they have that alignment, this kicks off the change control process. This managing the changes after it has been aligned. There’s a couple of different types of baselines that we talk about. So they can be time bound where you start with a time box, like an increment, a set of increments or release and then when you’re going to deploy. So you fill up that time box up to your development and testing capacity and that becomes your baseline. Alternatively, we also have scope bound baselines. So we don’t know the deployment date yet because we haven’t necessarily sized everything, but we take a logical group set of functionality and we agree that it can be developed, tested, and deployed together. Regardless of which type of baseline you’re using it, it’s really used to align all the parties that we can start and move forward with development with minimal risk of major changes. I mean, change always happens, but we don’t want to get halfway through and find out we’ve built completely the wrong thing. So getting that baseline says, yep, we acknowledge there will be changes, but we are confident enough that we can build this and that the changes will be relatively minor. And that does of course officially start the change control process for that set of scope.

Karl Wiegers 00:54:21 So really a baseline is simply an agreement what we’re going to build in a particular chunk of time or chunk of work and that’s a pretty good idea to have explicit, even if it has some opportunities for changing.

Gavin Henry 00:54:33 Is there an example you have from your project Candase, where you said you agreed to get X, Y, Z done but the user or customer came back and said, nah, we need this done and you got to deal with it?

Candase Hokanson 00:54:47 So we, we definitely had changes on our project. We had a kind of a multi-tiered approach to our baselines since we were doing a modified scaled Agile approach in scaled Agile, they have program increments. So they, all the teams that are working on similar products form an Agile release train and they agree to a release schedule, which is typically four to six iterations, that’s a program increment. And during that program increment, they’ll have a planning session where they establish the baseline for that program increment release. And so we would establish baselines at that level, which was again typically four to six iterations, so roughly a quarter and then as well at the iteration level. So every sprint or iteration during sprint planning, we would also have the baseline of this is what is in scope for this sprint versus the other sprints. And having that multi-tiered baseline meant that I, as the product owner, could move things between iterations as long as I kept them in the same release baseline.

Candase Hokanson 00:55:45 Now of course you mentioned if the stakeholder comes back and says, no, we need something else, well that’s part of that change control process. So we would be able to take those in, assess the change, and depending on our capacity, could say, yes, we can either do that or we can, but what are the trade-offs? What do we take out of the release or the iteration so that we can bring in the new story that you’re asking for? And that process doesn’t have to be super onerous. It can be fairly lightweight involving emails and discussions. But every team should know how they request changes and how those changes get incorporated into their products so that they’re all aligned effectively. One of the things Karl likes to talk about is that requirements is a communications, problem or communications project, so to speak. It’s not really a technical issue. It’s really can we get everybody aligned on what we should be building and track that over time?

Gavin Henry 00:56:44 How do you manage these changes that they asked for? Just in the agreed requirement specification that we’ve discussed about, or is there a different way?

Candase Hokanson 00:56:52 So we had kind of a very lightweight change control process that we had agreed upon, whereas changes were identified, they would get sent usually via email, sometimes through like a communications IM tool like Slack. And then we would put those in our requirements management tool. So any requirement, whether it was part of the initial specification or part of the chain change control process would get entered into our requirements management tool so that we had one place to track everything. From there, we would assess kind of the impact of that change. So how big it was, we would size it with the team and the development and testing team. And from there we’d kind of talk about the urgency. So is it something that needed to be in the current release or could it wait for a future release? If it was for the future release, it just got put in the backlog.

Candase Hokanson 00:57:40 If it needed to be part of that current release, then we would talk about trade-offs. Do we need to remove something from this release to make room for this? If the trade-offs were acceptable, then we would move the impact it scope out, bring the new change in, and it would be move through the development cycle in the requirements management tool. So this was pretty lightweight. We didn’t have a whole lot of approvals, but we did have a couple of checkpoints to say we have to do all of our requirements development work with changes just like normal requirements. So we have to elicit, analyze, specify and validate them. So we did all of that and to ensure that we had the right requirements documented as part of the change. And then once we knew the impacts, then we had another kind of checkpoint to say, is this higher priority than what we’re working on now so that we can make the appropriate tradeoffs and keep the release moving. So in that way we were able to take in most changes and just have that open communication.

Gavin Henry 00:58:39 So there’s a few toggles, switches, you can tweak depending on what the user’s after and see if it messes up your baseline. Excellent. So I guess it sounds like the requirements tools are separate from your project tools, that this isn’t the same as traditional project management at all, is it?

Karl Wiegers 00:59:00 Well, I always kind of wonder what people are thinking about when they talk about traditional anything. It somehow sounds pejorative. Like anything traditional is old-fashioned and therefore useless or bad. Sometimes people will think, oh, we’re Agile around here. We don’t do traditional project management or traditional fill in the blank. But it’s much more important to consider whether a certain practice is likely to increase the project’s chance of success, regardless of whether that practice is brand new or whether it’s been used for decades. So for example, all projects define requirements baselines, as Candase described it, whether they call them that or not, it’s just an agreement about what we’re going to implement in a certain period of time or a certain release. People do that, although they may not think in terms of a baseline, and then if you say, well here you ought to have baselines, they think, well that’s old fashioned we don’t do baselines. Yeah, you’re just not calling them that. You may be calling it an iteration plan or something.

Gavin Henry 01:00:02 What I had in my head was traditional being a Gantt chart that you move stuff around on.

Karl Wiegers 01:00:07 And you still do that. I mean, people are still doing tasks on any project. They’re still doing them in a certain sequence. They’re estimating how long those tasks are going to take. They’re looking at dependencies between tasks that have to be done in a certain order. This has to be finished before I can finish that. Gantt chart’s a convenient way to show that. And you maybe find people that say, well that’s traditional, that’s old, we don’t do that. But you’re still doing the same kind of work even if you’ve got this new mystical terminology that we’re using. So if a Gantt chart’s a useful way to show how these pieces fit together to make sure that we have the right outcome when we’re done, why not?

Gavin Henry 01:00:50 Thanks. I’m going to start winding up the show now. It’s gone far too quick. So apologies for that. There’s one question I’m going to sneak in though it’s actually one of my emergency questions in case we got finished too early. But can you provide some tips for somebody that’s got an ongoing project that loves what we’ve spoken about? It totally fits their brain. They want to start things. Where could they start?

Karl Wiegers 01:01:15 We can probably both comment on that. I have some suggestions. I would say first, if things aren’t going as well as you like and you think that, oh, maybe we should do some of this stuff, let’s do some root cause analysis to understand why I’m a big fan of root cause analysis. So we understand a problem before we start applying a solution such as maybe you say, oh, well we should do use cases. Okay, well why are you not already getting the results that you want? If you, if use cases are the answer, what’s the question? Might be a perfect solution might not solve your problem. Retrospectives are a good way to start that activity in the, you’re going through a, an iterative project retrospective is a typical part of many Agile projects and those should be taken very seriously using the knowledge from that, the insights to understand what didn’t go as well as we’d like, what did great, and let’s translate that into better action on the next iteration.

Karl Wiegers 01:02:14 So if you can trace any of the problems or issues you encountered back to requirements related factors, then you can consider which of the 20 practices we talk about or the dozens and dozens of practices in other books on requirements engineering and business analysis. Pick out which of those you think might help you solve that problem. But you can actually start doing this stuff incrementally. If you don’t have a complete set of models, fine draw a model for the next piece of the work you have to do just to both get comfortable with the technique and to begin enhancing your skills.

Gavin Henry 01:02:46 Yeah, you could create a baseline, couldn’t you at any point and then work from there.

Candase Hokanson 01:02:50 And that was exactly what I wanted to add on that is assess where you are now. You may be doing a lot of the pieces of the practices that we talk about, but you may be calling them something else. So understand where you are. And then as Karl mentioned, you don’t have to do everything at once. And sometimes it can seem overwhelming to have 20 practices. That’s a lot of things to do, but you don’t have to bite off everything at once. You can pick one practice or one model just to incrementally improve over time.

Gavin Henry 01:03:18 Thanks. So I think it’s a good time now to ask my usual question at the end of the show. I’ll start with you, Karl first, if there’s one thing you’d like a software engineer to remember from this show, what would you like it to be?

Karl Wiegers 01:03:32 Well, my bottom-line point is that all project and product teams have to deal with requirements for the work they do, whatever they call them. And I think if anyone from a software team were to look at the 20 practices that Candase and I recommend in this book, they would realize that virtually all of them apply to their work. So I suggest that listeners and readers select those practices starting with maybe just a couple, as Candase suggested, you don’t have to do them all right away, select the practices that they think would be most valuable for increasing the chance of success, for reducing risk, or even better reducing current points of pain. And then think about how best to adapt the practices to suit the nature and culture of your project team and organization. So I think of the practices that I write about and requirements books that I’ve done as a toolkit, and you can assemble a package of tools and techniques that are going to be appropriate for your project, your situation, your problems, and adapt them. Don’t necessarily figure they’re going to plug in exactly without a little thought in considering your culture and the nature of the organization. But I think if you go through the list, you’ll find that they pretty much all apply.

Gavin Henry 01:04:49 And Candase?

Candase Hokanson 01:04:51 Well, I think Karl stole mine, but I have another one up on my sleeve. It goes back to some of the themes of the book that we talked about early on, which is, we don’t do software requirements all at once. It is that iterative and circular process that we were going to go through multiple times throughout a product or project’s lifecycle. So understanding that you’re not going to do the analysis once and you’re done, and also that change happens. So those two together means that we’re, we should always be constantly learning more about the product or project that we’re working on and be willing to embrace that change and move forward with that project.

Gavin Henry 01:05:26 Thank you. Was there anything we missed youíd liked to mention?

Karl Wiegers 01:05:30 Well, we could both talked about this stuff for hours and hours, but we only have one hour here, so I think we’ve done a pretty thorough job.

Gavin Henry 01:05:38 Thank you. So people can follow you both on LinkedIn. I’ll put the links in the show notes. Is there any other way you’d like them to get in touch if they need to?

Karl Wiegers 01:05:46 Well, through LinkedIn or through our websites, I think you’re going to show some of our websites there as well. The website for the book is probably the most valuable place to get started. It’s just softwarereqs.com. Software REQs.com is a good entry point into all of this. For example, we have a video series called the One Minute Analyst, which has got a, just a very, very concise highlights of each of these 20 practices. So that’s a good starting point as well. But we’re pretty easy to find online.

Gavin Henry 01:06:18 So Karl and Candase, thank you for coming on the show. It’s been a real pleasure. This is Gavin Henry for Software Engineering Radio. Thank you for listening.

[End of Audio]