Mojtaba Sarooghi, a Distinguished Product Architect at Queue-it, speaks with host Jeremy Jung about virtual waiting rooms for high-traffic events such as concerts and limited-quantity product releases. They explore using a virtual queue to prevent overloading systems, how most traffic is from bots, using edge workers to reduce requests to the customer’s origin servers, and strategies for detecting bots in cooperation with vendors. Mojtaba discusses using AWS services like Elastic Load Balancing, DynamoDB, and Simple Notification Service, and explains why DynamoDB’s eventual consistency is a good fit for their domain. To explain the approach, he walks us through how his team resolved an incident in which a traffic spike overloaded their services.

Brought to you by IEEE Computer Society and IEEE Software magazine.

Show Notes

Related Links

- Queue-it Smooth Scaling Podcast

- Queue-it High Availability White Paper

- Hype Event Protection<

- What is an Application Load Balancer?

- DynamoDB

- Simple Notification Service

Transcript

Transcript brought to you by IEEE Software magazine.

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected] and include the episode number and URL.

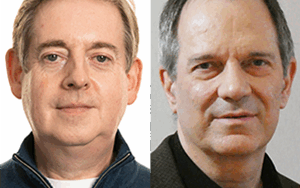

Jeremy Jung 00:00:19 Hey, this is Jeremy Jung for Software Engineering Radio and today I’m talking to Moji Sarooghi. He’s a distinguished project architect at Queue-it. Moji, welcome to Software Engineering Radio.

Mojtaba Sarooghi 00:00:31 Hello, hello. Nice to be here. I’m Product Architect, just to be clear.

Jeremy Jung 00:00:37 Oh, Product Architect. Okay.

Mojtaba Sarooghi 00:00:40 Sure.

Jeremy Jung 00:00:41 And I think what we should start with, because a lot of people won’t be familiar with what Queue-it is and what it does, what’s a virtual waiting room?

Mojtaba Sarooghi 00:00:52 When there is a traffic spike on a web server, what we do, we get that traffic and then based on some flow that our customer set, we redirect back those visitors to the customer website. Actually what we call it is more like a traffic management tool that we have, but what will happen under the hood or what is visible for our visitors that when there is a ticket sales or there is a specific product drop, there are a lot of people interested on that product so they go and try to buy that product. Imagine it could be a new iPhone release or really interesting singer that wants to have a new concert. So, the websites announced that there is the sale at this time a lot of people will go and try to buy that one. We get the first hit and we show a good user experience to the visitors and give them a fair user journey. We redirect back traffic to the customer websites, and they can buy the tickets or product that they’re interested in.

Jeremy Jung 00:02:00 And would this usually be where your customers would always have queued in front or would it be where the customer decides, oh I’m going to have this high traffic event and then, so I’ll only use it in these situations?

Mojtaba Sarooghi 00:02:14 Yeah, we have two different scenarios. We call it opening scenario. In the opening scenario we will set up and say that the waiting room will start at this specific time. So, the traffic not at that time will go directly to the origin, which means all the product pages and all the resources are accessible at that specific time. The traffic will be redirected to us. We have another scenario; we say 24/7 protection or we call it safety nets in the old name means that always traffic will be going through flow protection. So, if suddenly one of the customers without knowing it that there is a specific sale time. So, there is a social marketing goes and put a post in the social network, suddenly there is a surge of traffic coming to the website. We also monitor that one. So opening scenario versus 24/7 protection.

Jeremy Jung 00:03:10 Because for the second case, something that I’ve been experiencing a number of times is where a website that sells something, they put up a popular product and the time they put it up you go to the webpage and it just hangs or doesn’t load and people are all trying to refresh the page or wonder if they’re going to get in and they’re trying to put stuff in their cart and they’re getting error messages. So that’s what this tries to avoid.

Mojtaba Sarooghi 00:03:38 Exactly, exactly. That is the case. So how we kind of like talk about our surface is that there are two reasons here. The first one is that we want to give a good user experience to the visitors and that good user experience come by because of the scarcity. The nature of the product is that you have 10 or a hundred items in the stock. So how do you want to sell those? Whoever comes fast and on the internet for fast does not make sense. Am I closer to the data center so I’m fast or the other one is fast. So, what we do, we go for the opening scenario, everybody comes, they get randomized, get a place on the waiting room. So, it’s a kind of like a fair user journey. The other scenario as you mentioned is that I just put it there and then my website does not respond in the right time. So that is also a good, still we are talking about the good user journey in both scenarios.

Jeremy Jung 00:04:30 So I imagine each scenario is a little different in how Queue-it handles it. Out of the two, which one do you think we should start with? I want to walk through what happens when a user goes to the site and then how that flows through Queue-it’s architecture.

Mojtaba Sarooghi 00:04:46 From the technical perspective, both of them are the same, almost just the opening is different. So, we can start by the scheduled waiting room. I think that has something extra than 24/7 which is opening time.

Jeremy Jung 00:04:59 Okay, so let’s start with that. Let’s give an example of you’ve got a big event whether that’s a big concert or a conference that’s really popular and let’s say there’s a time that it’s scheduled to start, I go to the ticket selling page, what’s happening with Queue-it and my connection?

Mojtaba Sarooghi 00:05:20 Sure. I get also a little bit technical in this one what we have, we have a piece of code, we call it Connector. That code has a responsibility to look at the request context on that specific add to cart or visiting that specific ticketing page. What will happen, a visitor makes a request to the backend and this connector code is running on the customer website that could be sitting on the Edge, which is CDN, and we use Edge compute, we call it Edge worker. So that calculation or compute will happen on the Edge or could be on the customer website that could be a PHP server, dot .net server or Java server. And in each language, we have this connector. What does it do is that look at the request, look at the context. When I’m saying context, it’s clear, it could be cookie header or all the other information related to the HTP get or post.

Mojtaba Sarooghi 00:06:14 If the says that this specific visitor has not been in the waiting room, what we do, we do a redirect to the waiting room as a to response and then the visitor will see a page of the waiting room is presented to them. And at this stage what we do is that we design the page in a way that is look like the brand that you are seeing. So, you don’t see that, oh I was buying a ticket now it’s totally different page. So, it’s customizable page and then what will happen in the opening scenario that we are discussing, you get a QID as your queue ID means that you have that ticket and you are waiting with the other one. Still the waiting room is not open. Imagine the waiting room is open at 9:00 and now it is 8:30 so we will wait here until all the other people come.

Mojtaba Sarooghi 00:07:10 It’s like you can imagine of a shop that all the people come and stay in front of the door, door is not open yet. The time that the door wants to open we do a raffle, or we randomize based on a specific algorithm and then to each queue ID we give a queue number which gives you a place on the waiting room. Then this page that is generated there is a pooling mechanism. Again, little bit technical, it’s just as if they get, give me what is my place in the waiting room. Now we know what is your place because the randomization has been done, we show you that your place in the waiting room is number 100 for example, how many people in front of you we know what is the outflow of the waiting room per minute, how many visitor we can redirect. This value is set by a customer.

Mojtaba Sarooghi 00:07:58 So based on that one, based on your place in the queue and based on the outflow number, we can calculate and show approximately when is your turn and you will be directed. There are some details here. Somebody might leave the waiting room so there is a no show and then this number will be calculated but it is a good estimate that what will happen. So once can put the phone on the pocket and then go and say on 30 minutes later that will be a turn they open up what we show is that now is your turn at this stage we redirect back to the customer website with a specific token that is hashed by us and then that token or session is showing that this specific visitor has passed the waiting room and then we let the call to the origin happens now so you can purchase the tickets. That is like the flow of the opening scenario.

Jeremy Jung 00:08:55 Okay, so to summarize, you are looking at certain things that determine whether or not this user should be allowed into the waiting room and then you give them a random QID and you basically wait until the actual starting time of when people can buy tickets, you randomly assign them a place in line and then you manage that line until once the user gets to the front of the line, then you send them to the user’s system or the customer system so that their system can process the actual sale. Exactly. So, I want to talk a little bit more about that first step. When you say you’re trying to determine if this person should be allowed in, what are some of the things that you’re looking at to determine whether they should be or not?

Mojtaba Sarooghi 00:09:45 Yes, in the first part that I mentioned, what we do is that we send another token from the customer website to Queue-it and that token is hashed by our connector. So, our connector knows that this is specific visitor. When you make the first request, that will be a redirect to Queue-it carrying that token. So, there is a token in and there is a token out. That is one way of setting up this. We have another type of a waiting room we call it ìinvites onlyî, or kind of like a VIP waiting room. In that kind of waiting room people start their journey by a specific link that issued by customers. So, it’s like a, imagine is a Black Friday, customer has a loyalty program, they have a list of their loyal customers, we call them visitor, but for our customers they are their customers. They issue a specific link for them, and we let whoever has that link come and join the waiting room. Also, we have something in place that says every key can get just one queue ID. So, people also are limited not reuse their tickets when they join the rating.

Jeremy Jung 00:10:57 In that first scenario you were saying the customer site sends you a token. So, it sounds like in that scenario before the user gets to Queue-it they would be going to the customer site directly getting this token. Exactly, yes. Okay, so that would mean that the customer site needs to be able to handle at least this initial load.

Mojtaba Sarooghi 00:11:17 Exactly.

Jeremy Jung 00:11:18 I got it.

Mojtaba Sarooghi 00:11:18 Yeah, you are pointing to the writing and exactly that is the point that I’m mentioning. If you use for example, Edge Connector actually is not the customer website that getting the first hit. It is the CDN and CDN can handle quite a lot and also in the scenario that or connector is set up not on the edge but on the origin at this stage still, although the website gets the first hit, but the first hit is not touching any database or anything like that. The logic is pretty simple on that scenario says that okay you get the call, it is protected, issue a token, redirect to the queue. So, it’s not a heavy computation from that regard.

Jeremy Jung 00:12:01 And so this example where they go to the customer’s site first, you’re saying that they integrate a library or a piece of code from Queue-it and that code is actually what’s making the determination that this is a valid user or not?

Mojtaba Sarooghi 00:12:17 Exactly.

Jeremy Jung 00:12:18 Okay. So, it’s the origin needs to handle it but that check is relatively, it’s not resource intensive so hopefully as long as you have enough compute power you should be okay.

Mojtaba Sarooghi 00:12:32 Exactly and this the whole process again in the scenario that it’s open, we are talking what scenario that sales is open for everybody or the person has been locked in the system and this does not happen on the peak. Because the login process is kind of like a distributor on the time. So, this is pretty simple calculation adding some kind of a key and then redirect it to Queue-it. Even we have another layer, another way of integrating if you are doing on the CD and that was a kind of like, it’s like aka iEdge worker or cloud filler workers or AWS or Fastly, this is a pretty high capable CDN. So, origin does not get even the first seat, the first seat will happen on the Edge and then we even do not call that calculation or compute that I mentioned does not happen on the customer website. It’ll happen on the Edge.

Jeremy Jung 00:13:28 I see. And that’s the customer’s choice in terms of they can choose one of these cloud providers to run this initial check on?

Mojtaba Sarooghi 00:13:37 Exactly, correct and also, we really recommend the Edge computes because we believe that this is also the future from the security perspective, from the load perspective and also from the maintainability perspective. We had this piece of code that I’m writing it for example in C# and give it to the customer, is hard you need to open the server and some of our customer have, I don’t know, 500 developer that’s maintaining that server. So, it would be really hard to go and tell them that okay, please put it on the action for example for this add to cart. But when we put it on the Edge there is this decoupling between the origin and queued code, which is pretty neat from that perspective for maintainability.

Jeremy Jung 00:14:19 And that piece that’s running on the Edge, is that a piece of code that you provide to your customers?

Mojtaba Sarooghi 00:14:26 Exactly. That is, we call it connector, it’s a STK code, it’s not a complicated code. Yeah, it has a couple of if then else what path I need to look up because maybe at the day that we are protecting one show we don’t want to protect the other show. So, we need to do a pass matching and then also evaluation of those hashes that I mentioned before. So that is a pretty lightweight code that can run almost everywhere.

Jeremy Jung 00:14:55 And what is that written in? Is that JavaScript or something that’s kind of commonly available?

Mojtaba Sarooghi 00:15:00 Yes. Okay, you have a point on that one? Yeah, it is, when we are talking about the Edge ones, it is mainly JavaScript. Now also we are seeing little bit of the vast part as well also getting popular but almost all the Edge compute technology, at least four of them that are mentioning Fastly aka Cloud Filler, CloudFront, all of them support the JavaScript one in different architecture. But at the end the code is the same. If we are talking the about the origin one, it is written on the languages that the origin runs. So, if there is a customer that’s running C#, it is written in C#. If it’s Java, it’s written in Java. So, the important here, again, not specifically related to QID, but this connector, the piece that we need to put on the customer website or on the Edge should be easy to integrate. That will speed up the whole onboarding process, which is pretty important.

Jeremy Jung 00:15:49 You can either choose to run this connector, this check for valid clients on the Edge, in which case it’s JavaScript or they can run it on their origin. And it sounds like you have a number of different languages that you’ve provided. Let’s say we get past that point, we’ve done this check, now we’re in the waiting room as a customer, what service of Queue-it are we interacting with? Are we polling something to see what’s going on? What’s that part look like?

Mojtaba Sarooghi 00:16:17 Yes. Now this page is generated that is an HTML page. We use CDN to have these static resources for ourselves as well. Now we are talking about sitting in front of, we have a valve in front of our services, to do a DDoS. On the backend. We call those services visitor facing services. So, we created a, we can say a small microservices to be able to answer this request from the visitors and these services need to be, they’re part of those highly reliable services and a scalable services because they’re the main part that will respond to the visitor request that can be couple of million per minute. I’m talking about 50 million, 60 million, a hundred million during the peak time, which maybe not all real users, maybe 90% could be bots as well, but they are the main parts. What we do here, the first request is a page gets, so the gets request if it’s understand that there is a queue scenario we need to visit, show or render this HTML page and then after that we need to have some kind of call that give the visitor a state of where he or she is in this waiting room and then update the information.

Mojtaba Sarooghi 00:17:34 We have also capability of the customer putting some kind of information to show oh a stock is done or we are still selling in this product and all those stuffs. So, we have three different things here. First, we need to assign a queue number, do that calculation, give them a queue IDs. Then we need to render a page, customize that page and then also we need to update the information.

Jeremy Jung 00:18:03 And that service that’s handling all this or this set of services. Can you give some more details on where all this information is being stored? Is it in some kind of in-memory store or some more traditional database? What’s going on there?

Mojtaba Sarooghi 00:18:20 Sure we are using AWS as our clouds and then what we do, if we go from the layered perspective, we have CDN, we have that and then we have application load balancer. Application load balancer get the request and based on the path of that request we are directing those requests with different services that doing their job. For example, imagine that we are showing a capture. So, on the specific path that says API capture, we kind of choose the target in AWS means that those microservices that are responsible for this one and because all of the services are behind this application load balancer, we have this capability of a scaling behind the scene. So based on the scenario we can scale and say, oh now the CPU response time or number of requests is high CPU response time is high again, CPU usage is high. And then based on that one we can automatically scale the backend, which is pretty important for us.

Mojtaba Sarooghi 00:19:24 Then this microservices will do a calculation if it’s needed. We touch a database for the database we are using as you mentioned in memory for Somersoft that is more like a local or I need a really, really fast one. We are talking about couple of milliseconds but also, we are heavily using DynamoDB in AWS. That is a really cool high throughput database that we have. We have used it something I remember that I said in some states we were talking about hundred or 200 KTPS, Transaction Per Second for that one. So, it give us quite a really great capability to handle the traffic spikes.

Jeremy Jung 00:20:10 It sounds like maybe the primary store of somebody’s in the waiting room, you’re keeping track of their QID where they are in line. All that information it sounds like is in Dynamo?

Mojtaba Sarooghi 00:20:24 That’s correct. As the backend but also, we use the cookies as well is high. So, on the client side we have some information stored. Again, we do validation on that one, but from the database perspective, after the cache is hitting, it’ll be a Dynamo and Dynamo is used quite heavily in this picture.

Jeremy Jung 00:20:43 And you’ve mentioned how you have so many transactions per second, you gave the 100 to 200 thousand as an example. Is there some kind of caching layer in front that’s helping you handle that kind of throughput?

Mojtaba Sarooghi 00:20:58 It’s really dependent on the scenario. Yeah, if I want to say yes, we have the caching layer as well, but Dynamo itself in most of the cases can handle this traffic. Creating a cache and creating a different layer will help in the scenarios, but what I actually experienced is that Dynamo itself can be seen as a persistent storage that is pretty fast. So, we are talking about if we have something like MySQL or other traditional database for sure there is no doubt that you need a cache in front of it. In Dynamo when you are talking a Dynamo, at least in the scenario that you are worried about your data because when you have a cache then you need to be careful about the cache’s is stale or not a stale and all those itself will use some kind of resources, but Dynamo doesn’t. You have a scenario that can handle this stuff. I can say at least in our system is 50/50 whenever we can we use that cache but if it’s not possible Dynamo will handle for us. So at least whenever a product comes and we are in the beginning state and we want something fast to the market, we can trust Dynamo for this scenario as well. Without having the needs for creating a cache in front of the microservices and Dynamo. So, we can directly use Dynamo.

Jeremy Jung 00:22:16 And the software that’s maintaining the person’s position in the queue, and the software that’s deciding when somebody should be removed from the queue, what is that written in and how does that part work?

Mojtaba Sarooghi 00:22:34 Or back end is mainly C#, not mainly, I can’t say 95% is C#, in .Net. We have different services as you mentioned actually you pointed out that there is one service, one group of services that’s returning the tracking the user place in the waiting room rendering that one. And there is couple of services that do the calculation of the waiting room itself. How many people should be redirected right now, and again, the complexity here is that we are promising to redirect X per minute and then from number hundred to hundred plus X should be redirected in the next minute. However, in reality those hundred plus hundred X might not be ready to get redirected. Why? Because a visitor is looking at another tab or closes his phone. We want to get that flow. So, there is some kind of calculation on that service as well.

Mojtaba Sarooghi 00:23:30 There are two different services. The other one look at how many people are ready to get redirected and based on that one — we call that one open window means that the window that we can redirect — we don’t open it is imagine that the outflow is X, we don’t say that open window is fixed X, we say it might go to 2X because there are not enough people to get redirected in the previous minute. So, there is some kind of calculation on that one based on the historic data. So, these two responsibilities are separate from each other as you mentioned, but the language for all is C#.

Jeremy Jung 00:24:05 This is C# for the user-facing part. Is that using a framework like Microsoft’s asp.net?

Mojtaba Sarooghi 00:24:14 Yes, it is the .Net core that is hosted and we use it is hosted on Linux servers.

Jeremy Jung 00:24:21 And the part that is managing, I believe you called it the open window. How many users should be passed on to the origin or to the customer’s site? I would guess that there’s multiple instances of this service that needs to run and if that’s the case, how do they all manage this shared state they have to keep track of?

Mojtaba Sarooghi 00:24:42 Exactly, yes. Each of these services that we discussed — user facing, for example, Captcha management, or the flow managers. Yeah, those services, we call them flow managers, all of them are behind load balancer; means that we have couple of them and based on the scenario they can scale. We have two different scenarios right now that is working. One is that sharing the same storage that could be in cache or Dynamo that I mentioned. So, they look at the specific row and then they do the calculation here based on that row. So every of these services will say that okay, I received this amount of requests for redirect, the other one says that I received this amount, and they put on the same row and then one of the ones say that for next minute we will going to redirect this amount. So that is one of them. The other scenario is that the server talks to each other through. In this scenario I can say one of the technologies we use SNS in AWS some kind of the update on that one says that okay, I received this, I received this, what I should do, and then everybody gets locally calculate based on the all the information. So, point to point messaging or a central storage to be able to communicate to each other.

Jeremy Jung 00:25:51 SNS. It’s Simple…

Mojtaba Sarooghi 00:25:53 Simple Notifications Services.

Jeremy Jung 00:25:54 …Notification services. Okay.

Mojtaba Sarooghi 00:25:55 Exactly. So, in a simple way like a message bus, you could say that not a traditional way of defining that one is that you update that one and it will update to all the nodes.

Jeremy Jung 00:26:07 And something I believe I’ve heard about DynamoDB is that it’s considered eventually consistent. Is that correct?

Mojtaba Sarooghi 00:26:14 Exactly, yes.

Jeremy Jung 00:26:15 How does that factor into your architecture? Does that ever become an issue?

Mojtaba Sarooghi 00:26:20 Actually this is a really interesting topic, and I would say that one of the design practices that we have here is about this eventual consistency. I would call it actually the type of the business that we work and design a product for is really important. When I joined Queue-it before I was working kind of like insurance or banking you could say system. In that scenario when I moved to Queue-it and I started to develop the engine and I was talking to a colleague for me about wow, you don’t need to have the full transaction whenever you write. I said for sure we don’t because I need to support a hundred thousand requests per second. That’s not, that’s impossible. So that is an important fact here. We don’t need to have all the nodes know exactly at this specific point what is happening because we have this tolerance, okay one node is behind one second, then the 500 millisecond they can get updated after a second.

Mojtaba Sarooghi 00:27:18 So there is this factor heavily used in our design of the system, not just this one. Also, the fact that for example doing back off from the client that this is not all the same it’s like eventual consistency and then back off. There are different things but what I want to mention here is that in a specific scenario that we design a product for a specific business, there is some tolerance that we can use tolerance of consistency, tolerance of time, now for a human beings 50 milliseconds and a hundred millisecond is not a big factor. So, if there is a traffic really high and in some scenario we cannot, in our design we need to design for the failure. So, if we think that there might or server get overcrowded, so why not doing a backup for example on the client side or why not using the eventual consistency? So, for us is used pretty well and we didn’t see any negative impact on the business part.

Jeremy Jung 00:28:19 I would say it sounds like it may be because of the part of the problem you’re trying to solve. If you look at the customer’s side of somebody buying a ticket for example, if there’s no tickets left but somebody’s able to buy a ticket that requires that kind of consistency. But since you’re letting people into the place to buy and not actually doing the buying and selling, maybe that lets you sidestep that part.

Mojtaba Sarooghi 00:28:43 You have a point on that one. Yeah, for example, if we are one second behind or one second ahead does not change anything but on the ticket part you cannot be like that.

Jeremy Jung 00:28:54 In a worst case, maybe somebody is in the waiting room, they get to the part where they can buy the ticket, but the customer’s site will just say, well, I’m sorry we got you in but there’s really no more tickets left.

Mojtaba Sarooghi 00:29:05 Exactly, that is a 100% correct. Yeah, because we use the time factor and we smooth the stuff and that also the visitor got a good experience and also again we are redirecting on the 10 hundred, 200 that is also a normal scenario and visitor might see this one still. They had this experience of going through the process.

Jeremy Jung 00:29:29 Yeah and on their end that experience of knowing, okay, I’m in a line getting some information of okay, tickets are being sold, maybe you’re even telling them, hey there’s not that many left. So, if they do get to the end and there’s a problem, at least they had some amount of information on what was happening.

Mojtaba Sarooghi 00:29:48 That’s correct. Yes, exactly.

Jeremy Jung 00:29:50 You mentioned captures as an example. Are there other parts of the system that are checking to make sure that this is a real person as you’re going through this queue?

Mojtaba Sarooghi 00:30:03 Exactly. This is a really hot topic. I think for all the businesses nowadays fighting with the bad actors and how you make sure that the right actor buying the product, we need to make sure right actor is waiting on the waiting room and we fight with this one in different level. As I mentioned, there is one of them, for example, we say invites only waiting rooms. So, users that had a good profile on the customer website are allowed to join a waiting room. So, we give this responsibility and work with our customer say that these people have a good profile on your side so sign a ticket for them. We let just people with the signature from you to join our waiting room. That is one way of doing it. We have also different partners to work with related to the bot recognition.

Mojtaba Sarooghi 00:30:52 Recently we had this new product we call it help with one of the partners with Akamai bot. A team we work together that they will try to tell us that oh this traffic initiated from this specific session or visitor is a bad actor. That would be one of them. We can have also different challenges entering the waiting room. Some people saying that okay, capture is a friction that’s correct is a friction, but if it can recognize who is bad and who is good, it is valuable not only for us, it’s valuable for our visitor because there is a limited number of the product wants to sell. The person that is interested in that product is willing to spend a minute to solve for example a capture, but he gets the product not the bad actor. So, in our scenario this is a kind of like a use case that we have.

Mojtaba Sarooghi 00:31:43 We have also machine capture that shows that is not just a bot that trying to hit the end point. So it need to have some kind of capability to solve that machine capture. We say that make it expensive for the bot so it is not easy for them to join the waiting room. We have also some kind of we call it traffic access rules, a way for our customer to customer and say for this is specific IP address, I don’t want to, they join the waiting room. So, with the combination of these tools, we try to protect our customers and waiting rooms and real visitors from the bad actor to get the advantage. And I can say that based on the numbers, some waiting room, I don’t know in a specific business there is more than 98% of a scalpel or bad activity. So, when we look at the data, how many it was blocked and how many got the queue ID, we issued this queue ID and then how many of them we blocked is more than 98%.

Jeremy Jung 00:32:44 And so in that scenario almost all of it is bot traffic it sounds like. Is that pretty common for these events?

Mojtaba Sarooghi 00:32:51 It is actually, it is in a specific interesting for that, imagine that there is a brand that’s selling a specific cart, I don’t know a specific sneakers, a specific, even in the tickets because the bots will buy those and then sell it in the open market for example. Or really interested person wants to buy more than what it should buy. So, we are seeing as a real driver also in the using the waiting room itself that brings the fairness on the queue. So not everybody that’s putting the most request buy it first. The example that you mentioned from the beginning, imagine I use my simplest script that do add to cart are the fast and then a script the whole process. So, if it opens up now and I’m doing first call so I will get the product and the other cannot but putting this kind of like a journey in front of that, it gives first of all to the bot protection providers to get enough time to recognize who is good and who is bad. It’s not any more transactional now you need to go through a journey so that intelligence behind this bot protection tools can help to figure it out who is good and who is bad and also just buying, being fast or having a lot of compute power, you cannot purchase over a normal person that is interested on that specific product.

Jeremy Jung 00:34:16 Ah, okay. So, the queue itself, that process of having a set time users join before the actual sale occurs gives you this window of time, whether that’s 10 minutes or 20 minutes for you to put users into a queue but then while they’re in the queue, try and determine are they a real user?

Mojtaba Sarooghi 00:34:37 Exactly. Now actually you pointed out to the our new, one of the main reason that we did with the partner, not just only for us also it was good for the other parties, the bot protection because this journey give the possibility and time and also imagine in this scenario you can put a capture and people willingly solve that capture and who wants not solve it then they don’t get it and the people that solve, they don’t complain because they’re interested, they are more happier to prove that they are real.

Jeremy Jung 00:35:05 Are there other examples I think you mentioned there might be certain IP ranges that you decide okay these are probably bots. The other example was you present a Captcha, they don’t solve it, they’re probably a bot. Are there other examples of things you look at when you’re deciding whether or not someone’s a genuine user?

Mojtaba Sarooghi 00:35:25 Yeah, on the partner part they have a lot of behavioral telemetry that your mouse is moving, mouse is moving, not moving, when I’m solving the capture how fast, there is TLS fingerprints, all those telemetry would say that signature from the client’s side how it’s behaving. We have also the part for example, we know this endpoint should not be more than this amount of requests or the request comes should have this payload or if payload is not there, so it’s is not a correct one. And then also you can think of some kind of dynamic evaluation that you send a new version and then you change something there. The bots need to add up to the new one. So, if they are sending the old payload, you understand that this is not a real user because real user they don’t record and replace. So, there is these capabilities because they have to go through this time and also the steps. So, it gives us possibility to recognize between good and bad actors.

Jeremy Jung 00:36:25 And I think you explained there’s maybe two different parts. There’s this part that’s in front that has the bot detection and then there’s your own queue that that has some form of bot detection. How do you decide what parts should be at the partner level and which parts should be done within your own application?

Mojtaba Sarooghi 00:36:45 That’s a good question. Right now, what we are thinking is that this needs to be woven to each other. It’s not just two separate system that’s working. So, we need to provide some kind of information and then give it to the partner and partner give us some signals that we react on that signal. So, it is more like that. If there are separated, usually I can think of it for example, just for a simplicity, think of a valve scenario. You put a number of requests that goes to that endpoint and then you block is more than that. In this scenario, when we are doing an or end, we know that a specific endpoint for a specific, session cannot have more than five. In this scenario, that is what we do. In the partner scenario, if these two are separated, if these two are separated is that partner knows what kind of IP rangers are from data centers for example, they can block it before even getting to us.

Mojtaba Sarooghi 00:37:46 But the path that we are working on and this specific help scenario, it is more like we provide the environment for the partner, they will give us signal for blocking. So, we give the environment and time and all those specific awards we say that oh this endpoint is important, look at this endpoint or this endpoint can be called multiple times and then partner will detect that one based on all logic and then give us signal and we block. If that is not in picture, then we use our own specific bot detection tool and we always say that sure we do help you on this one but bring some kind of bot detector on the place as well because it’s always better to have two than one.

Jeremy Jung 00:38:34 Yeah and the example you gave of potentially 98% of the traffic being bots that it sounds like this almost becomes a requirement in a lot of cases where you can’t sell anything unless you have some way of stopping bots.

Mojtaba Sarooghi 00:38:48 Yeah, I would like to invite you in our product groups because you actually mentioning this one and that is exactly what we are saying, that this is part of our product that we need to provide. So for us, if we want to have one of our main slogan here is that even I think it’s part of our kind of like a company code is that we bring the what I think I’m sure it is like we bring the fairness to the visitor user journey. And if we cannot prevent this bad actor to buy that product so we cannot bring that fairness in.

Jeremy Jung 00:39:21 Yeah, it’s almost like you provide this queue for people to line up in but if you can’t also guarantee that that queue isn’t just full of bots, then it’s sort of like well what is this queue for?

Mojtaba Sarooghi 00:39:34 True, true. That is a reason of the business. So, it is really important for us to be able to handle that scenario and it’s not an easy thing. Yeah, it is like an arm wrestling, we try to innovate the bots also try to innovate and then we go head-to-head and we hopefully we are the winner one and we try to be the winner one on this.

Jeremy Jung 00:39:56 Yeah, it’s in interesting that you mention how high the percentage of bots is because I think there’s a perception from a lot of users when they go to buy a ticket for a popular concert or a popular product and when they have a bad experience and they can’t get that item, like the site is frozen or it’s just loading. I think almost universally people say like well the bots got all of them, that’s why I couldn’t buy it. And sometimes what a user thinks are not always correct, but it sounds like in this case maybe it really is correct

Mojtaba Sarooghi 00:40:30 In some scenario it is, yeah it is like how much compute power you have and you can access that endpoint even I saw scenario that I was talking to kind of like a customers and they wanted to be your customers as well and it was like the bots will try to crash the server so then the normal people will just, they say okay I cannot buy but then bots will go and buy or we saw a scenario that people put this stuff on the cart and block the whole other user cannot put anything because the system says that I don’t have anything remained in my stocks. So there is a lot of scenario that is part of the bot stuff and I have seen scenarios that when you open up the cell it is gone after one minute, less than one minute because all the bots is ready so they are pulling or they are calling that endpoint the time that it’s start until I as a user click add to cart. They already did add to call, and they already went through the purchase flow, so everything is scripted, do this, add to cart, go a purchase, put my information, ship the product here. So, some part of it is the bots most part of it and the other part is the amount of people are interested on that one and the server cannot answer.

Jeremy Jung 00:41:52 For that second part where the bots are onto the customer’s site and adding things to cart and checking out. Queue-it is supposed to be in front of that part. But for the customer’s origin, for their own site, are there things that you recommend they do to account for that or is it more you think you put all that protection into the queue and then you don’t put that into the actual origin?

Mojtaba Sarooghi 00:42:19 No actually we are also part of dashboard as well. One of the things here is that the protection should not be on the main page. Imagine you send everybody to the queue on the main page but add to cart is not protected. We have this way of you select where do you want to protect and then if you just choose that I want to protect URL XYZ and then not add to cart X, Y, Z, then actually you are limiting the real user because real user goes and wait and the bots will bypass the queue. So, it’s important how your setup is and what is the protection plan that you have. I recall one of the scenarios that’s one of our customers, again one way of doing the cart updates but not the other way around. So, the bot figures out that they can do a purchase by doing another flow or imagine that you have two different website that at the end will go and do a product.

Mojtaba Sarooghi 00:43:15 They had the older version; they forgot that one so that was there. So this is pretty important that your ends should be protected and also for us is important because at the end we are provide, we are helping our customers and we are part of the user journey to bring this good user experience and on the session website and the customer, we have this static and also we have a dynamic way of making sure that this session is a valid session to do a purchase on that one. For example, customer can prevent the repurchasing so every ticket can buy just one or all other scenarios from that perspective. But no, the answer is that we are responsible partially on the other, of the whole user journey as well.

Jeremy Jung 00:44:00 That’s also an interesting point too where you were saying how there’s a lot of parts where kind of these main pages where you don’t necessarily want to stop the user because if I go to the origin to the user’s page and I get blocked with like a capture or something and I’m not even at the point where I’m going to buy yet, I might just give up. And so, there’s this balance of I need these ways of blocking the bots but at the same time I don’t want to make real users give up. Whereas the bots they go, well there’s this capture I need to solve but it’s a bot so it’s totally fine.

Mojtaba Sarooghi 00:44:38 Yeah, that’s correct. Also, this is also a good part about the way, if you protect a specific part that is related to this specific interesting product, all the people that are shown a capture on the waiting room, they are willingly to solve that one because they want to wait. So, there’s also another point that this makes sense for those hot items or hype items.

Jeremy Jung 00:45:01 Oh okay. So, if I’m a website selling products, maybe most of my products they don’t get a whole lot of traffic but a new game console comes out and everyone’s just hitting that URL whether it’s bots or people. So, the rest of my site I may not be putting those people in a queue because the traffic there is normal. I want them to be able to buy things normally it’s just that I’ll put this queue in front of these specific products that I know are going to be a problem.

Mojtaba Sarooghi 00:45:34 Exactly. Correct. And also, usually what a customer does, and what you sample it, it is actually I was working with a customer related, that’s what we have these two protections. We say that put the high outflow for all the other products, and when it’s the high outflow you usually do not see a waiting room because your outflow is less than that and then put the lower outflow for a specific product like the going console that you mentioned and people wait until they buy, they solve the capture, they go through that process and then doing, so that’s 24/7 protection with the higher outflow on the main, it’ll help you not crashing your main website because the time that you want to sell. Maybe people will get confused and go to all the other part of the website so still is a high traffic, but they recognize that okay I don’t want to do that now or, they continue. But in the unspecific product you go to another queue with a lower throughput and all the randomization and capture because you want to buy that product.

Jeremy Jung 00:46:37 So it’s something about Queue-it is because it is in front of a lot of the interactions that a user’s going to have, it’s very important that it stays running and if it goes down then you’re really stopping the customer from being able to sell whatever it is they’re trying to sell. Given that every application is going to have incidents or downtime. And so, I wonder if you can talk through maybe an example of when you had an incident and what the cause was and how you dealt with that to address it in the future?

Mojtaba Sarooghi 00:47:18 Oh my God this is a really a hot topic, hard topic. Yeah. First of all, it is part of our, when we sit down together developer and the department, we say that the main one thing if you should do is being reliable. Reliable product is what we need to do. We are 24/7 as you pointed out. This is the most important part. And then when you say okay, it’s reliability important, then you design by that one design for failure. Simplicity is really important. Don’t create a complicated web service, they cannot scale, don’t do a lot of Io, don’t call the database if you cannot call the database, do a lot test all those stuff. Preparing this system that is highly available, a reliable system and cannot scale. But as you mentioned there is a scenario that you might get some kind of incident on that regard, what we have is that we are designing some part that’s possible to move the infrastructure across different logical boundaries.

Mojtaba Sarooghi 00:48:21 What does that mean? Means that imagine the waiting room is here and then the service behind that one is getting huge DDoS attack and that endpoint, that IPs or load balancer even cannot stay alive because whatever server you at, it’ll crash in the place that the TLS handshake will crash. So, something like that or the traffic is huge, the whole network cannot, what we kind of like do is that okay, can we move this traffic to somewhere else or can we move the other kind of like a good traffic to somewhere else healthy and that was actually one of the incident that we work on that one. And we were really under a really huge load, until our VAF and we update the VAF, we pointed the whole traffic to somewhere else, the good traffic. So, the bad traffic was hitting somewhere, it was not issue.

Mojtaba Sarooghi 00:49:13 There were, even the server failing. It was not causing an issue for the good traffic at least. And also this is hard how you recognize who is good and who is bad, but playing with that endpoint that you at least divert the traffic and bring up new server that’s now my server here is down, now I will up a totally free server can handle more all the deadlocks if ever anything here, all the stale connection will be a new one that created in the new environments. That is how we try to handle this one, you would say.

Jeremy Jung 00:49:51 In that example you’re saying you got a really large number of loads. Can you just give an example? What is that load? What’s considered large from your…

Mojtaba Sarooghi 00:50:01 I’m talking about when the traffic spikes come, the traffic spikes should gradually come into the system. Yeah, if it’s a new user and usually our system is over provision and it can handle, I don’t know, 10X factor and then you’re scaling, when it’s come to picture take 1, 2, 3 minutes to come up servers. Yeah? Pretty fast. So, you are preparing for 10X, your server can scale every two minutes. You understand that okay, traffic will come 2X times more so until it gets to 10X you have already new servers in place. But imagine that the traffic goes from hundred thousand to hundred million. That is like, how hundred thousand 10 times and then a hundred thousand times more. Yeah? So, the system cannot scale that fast in that regard for this specific scenario that I’m mentioning. When the server comes up until they load and try to get, they die again.

Mojtaba Sarooghi 00:51:01 So what we do is that we redirect the traffic to the new environment, totally create a new environment with, imagine that hundred servers and when they are in a stable situation, we point the traffic to that environment. So doing that will help us to get rid of that starting point at least in that scenario we had. Until the server comes up and the hash get warm, there was some time that they would crash because the traffic wouldn’t let them to warm up. So, we warmed them up before putting them behind and behind the load balancer and then we directed traffic to them. That was something pretty complicated in that scenario.

Jeremy Jung 00:51:43 When you’re talking about warming up, is that the runtime or what part needs to be warmed up?

Mojtaba Sarooghi 00:51:48 In this scenario, that I’m specifically talking about, it was the — imagine related to the, let’s say about the whole, what is the word for that? How much visitor session are trustable? Yeah. For the bot protection. You create this cache that’s understand this specific visitor has a, this level of trustability, the other one has this level of trustability. We would fetch this information inside the servers to be able to start fast on that scenario, and it would take something like one minute or two minutes to be able to warm that one up. The design we change it later on. But that was how the design was done. So, when we would add a new server, it would take three minutes for that server to be able to serve the responses. So, there is already a huge traffic, this server will come up until they be able to handle that traffic.

Mojtaba Sarooghi 00:52:46 At the same time they needed to update their memory with the data of the visitor score in the memory. This would cause the server crashes themself. What we did is that we just waited, we brought them up without adding them behind this huge traffic and the load balancer. So that load balancer was safe, no traffic, they came up three minutes, the data was on the servers, then we pointed the traffic to those. It’s cause the system comes up. But it was not that easy that I’m explaining because it’s took us some time to make that decision pretty fast because if the end user seeing the capture cannot generate it or something like that, it’ll prevent the events go forward. And as you mentioned, it is like, we call it critical incident when it’s talking about the visitor experience because it means that we are doing a disturbance of the customer sales on their most important days. That is a really hype sale going on.

Jeremy Jung 00:53:47 Oh, I see. So that warmup is your services when they first start, they need to retrieve data maybe from Dynamo, maybe from some other store to store in memory to be ready to process the users in the queue. In your production instances, they were getting too much traffic to even get to that point. Like you would try to auto scale to create a new instance. It tries to get the information it needs so that it’s ready to handle traffic, but it’s getting too many requests so it just crashes.

Mojtaba Sarooghi 00:54:23 Exactly. That request to getting the data plus the request of answering means that we were having a threat acceleration. So, I start the threat to get the response from my cache because all my threats were answering the request coming. There was no threat to getting the answer from that’s a storage part. So that was causing an issue for us.

Jeremy Jung 00:54:43 I see. So, the amount of traffic increased much faster than you anticipated. Yes. It’s not so much that there were a hundred million connections or a hundred million attempts, it’s that it went from maybe zero to a hundred too quickly.

Mojtaba Sarooghi 00:54:58 Exactly. Yes. That was like the case again, we should be able, we could handle that one if the system was designed not having that cold start. And that was also what we changed on that scenario. That okay, if you don’t, that is now we going to that eventual consistency part again. For example, the revise of this one, we said, okay, if you don’t have information about this score, maybe it’s not really important on the first request. Just wait and make that decision maybe on the 10th request until gradually you got the data that you needed to make that decision. Don’t wait that, okay, you don’t have the data you need to first get warm. No, you can answer the client and little bit say that, okay, I don’t have a data, sorry, wait for me until I get my data instead of waiting to get that data and then answering.

Jeremy Jung 00:55:43 It’s almost like a form of, I’m not sure if this is the right term, but graceful degradation of I can’t get the most current information or the best information, but I’ll at least do something with what I have.

Mojtaba Sarooghi 00:55:55 Exactly. And that is part of the system design. I would say that is the most important part. You look at the business that is at least what I learned during these 10 years of handling these huge traffics with all the limitation we have at the end. We have limited resources, how fast they can scale. At the end, you need to find possibility of tolerance in the business. So, I can answer to the client, client said, make it next in instead of I blocked this request and show the person that you are a bad request, I can do it in the next time. Any way the person is waiting on the waiting room. So, I don’t need to act at this request a specific one. So, as you mentioned, kind of like a fallback, not the back of scenario. In this scenario.

Jeremy Jung 00:56:41 And this incident, I’m curious how did it get handled in terms of, did somebody on your team get a notification that there’s a bunch of users trying to get through the queue? Do they get notified and then do you all get together in some kind of chat room? How does this get managed?

Mojtaba Sarooghi 00:56:59 It is actually like what you are mentioning, we have this dev duty team or the people that’s always, some people have this alarm on the phone. Their phone needs to be on, they get notification and then when they get this notification, we have also alarms from all load balancer how many traffic we have per minute. What does it mean normal? What doesn’t mean normal? Because, you might expect this kind of traffic because there is a really hot event and then that is a normal traffic. But then when it jumps in one minute, going from a hundred thousand to a hundred million for example, you understand something is wrong. And then we have also a response time and 5Xs. We monitor all those stuffs. So, when there is a huge 5Xs or when you have a high latency, then you understand system is not healthy enough.

Mojtaba Sarooghi 00:57:47 So the healthy is there and then when you get this one, you try to look up what happened in this scenario. We have this incident group that the room will be created, somebody get the incident management to inform the stakeholders that in this scenario, it really get escalated to the higher level. That’s right now there is this huge amount of traffic. How many customers, how many end user are affected, how many customers are affected. And it is not a funny day, or funny times, but different people like a platform people, look at the health of the load balancer, what we can do. One group of or colleague brought up the backup environment with warmup server. When that was ready, then we pointed out to the new environment in a state of the old environment. So, we updated that one. We had design system in a way that you can diverge the traffic to another environment. So that really help us. So, generally we have this in our system that the system should be resilient from that fact that we can move the traffic to another server that help us. Yeah, but it was a pretty tough, I would say that because when there is this, it’s not just that specific cells that might get affected. Also, it might affect the other cells as well, which is not the best situation.

Jeremy Jung 00:59:10 And starting from getting that notification to resolving the incident, it sounds like you have to determine what went wrong. You have to create this other environment, you have to configure it to use that environment and make sure everything is working. Can you give a sense of how much time that is between knowing there’s a problem to the resolution and what the end user actually saw or experienced during that time?

Mojtaba Sarooghi 00:59:39 It is different, depends on the scenario in this specific one. I think that as far I remember something between 30 or 40 minutes. It took us to be able to put system totally on the healthy scenario. During this time, in the beginning couple of minutes we had quite high amounts of the failure. In the JavaScript side, what end user sees doesn’t, again this one is part of a design that is, we call it again backup or fallback or on the client side because or JavaScript make a call to the server, server Canada answer. They just say okay wait more. That is also a design practice that we do. Again, this is a possibility that systems give us that, okay, a user is engaged, he wants to redirect right now what he redirects two seconds later or what will happen if the data that is shown your term will come.

Mojtaba Sarooghi 01:00:31 For example, imagine I’m showing this you are number 100, but I should show you are number 98. This is not a big deal because I couldn’t update the data so gracefully, I would say that okay, on the client side I can do graceful and show them the old data in a state of the newest data. That is their check. That was most of the developer there is this, what will happen on the first request in that scenario, we had some user that they saw 5Xs, in the first five minutes until we figured out what the system kind of, because also system needs to understand that now you should not return exactly what you were returning before because you are under the load. So, during those time we were showing some kind of 5Xs from the server. That is the worst for us.

Mojtaba Sarooghi 01:01:21 Especially if this is the first page. Because if the first page get that one means that you will be presented with this bad user experience. Although when the user processes a five, he gets the page. But just imagine that you are waiting for a ticket and suddenly you say 5Xs, something has happened. Some 5xs were return it to the bots. We are not angry with that one. But anyway, to understand after that, after postmortem we did also see that how many of them what for, most probably for the bad actor because we had the request for IP address, we have to understand that oh, this is fine, this is returning to the bad actor. The other one is more for the real users. But we had some degradation, especially during that five minutes. Some of the requests, because again you are behind the load balancer. If your request hit the unhealthy server, then you get the issue. If it hits the good server, you are fine with that one. Those that they are not pressing a five or they already determined that what is the state and on the client side we were having gracefully kind of like a not showing any issue.

Jeremy Jung 01:02:29 Yeah, just showing them in the queue. And maybe the position’s not changing or maybe it’s behind, but to those users they may not even be aware something is happening. That you’re busily scrambling in the background trying to see what’s happening.

Mojtaba Sarooghi 01:02:46 That is actually part of, again this is part of a design that we need to, because the client said if we were not designing in a way then client said also might show a bad experience. But why doing that if we have this possibility. So, and again this is also part of our design that we say that the first request needs to go for example from a CDN, that that does never fail. So even if I’m not returning a response, maybe I just show them, please wait, we are doing something. So, this is what we learned also after that one that this is the direction we need to go. Never show the failure. If you can recover in a short time. Because at the end, if I’m waiting one more minute, I’m fine. But if I’m saying, oh everything is on fire, even I might leave the queue and do not do my stuff. But it was an intermittent issue, and it’s happened for everybody. Yeah. You cannot be always kind of like without breaking undercover to the things.

Jeremy Jung 01:03:44 And is the design such that if the user was on the page that just looked like they were in the queue, normally if they open the developer tools and they look at the network tab, are they actually seeing there’s requests being made that are returning five minutes?

Mojtaba Sarooghi 01:03:59 It could be, yeah, it could be. We can also, for example, if you don’t return a Java server, you don’t see it in console, but if the request fails, you can see in the network tab. And I think actually it’s fine. Totally. We are not hiding that something has an issue. We are hiding the bad user experience. And I think that is a still is a valuable thing on that one.

Jeremy Jung 01:04:22 For the normal user, that’s all that matters is what they see. And I think the opening the developer tools is more just if you’re a curious developer and you’re trying to get tickets for this event and you’re like, well what’s going on? Then, you might see some hints there.

Mojtaba Sarooghi 01:04:37 Exactly. But at the same time, yeah, we are saying that we don’t for example, redirect them without the turn. In this scenario what we do is that you need to wait, sorry, you need to wait more than what you should because all the other people also need to wait anyway. So, if you were in, for example in failing open imagining this scenario, I would say okay, just redirect them. That would be also another design. In some scenario maybe that is the best design that you, because you don’t want to make people wait more than what they should because one minute is important, but in this specific one that I’m just, okay, if I’m waiting like 25 minutes, okay, I can wait 26 minutes. There is nothing again part of the business how that is business. I would, I call this one business tolerance. When you understand that business and user flow, you can design it for that specific business.

Jeremy Jung 01:05:29 And in this specific case, earlier you mentioned how the amount of traffic is spiked a lot more than you expected. You also earlier said how in some cases 98% of the traffic is bots. In this particular example was most of the clients getting these errors and trying to hit the site, was most of its bot traffic in this case?

Mojtaba Sarooghi 01:05:52 That was correct, yes. But again, it is pretty important that because at the same time you have other cells going on and your infrastructure anyway, some part is related to each other. You should just do it in a way that isolate this specific one, do not mess the other one. And then in this one, try to isolate the bad with the good one. So, this is also part of the design of the system that needs to be able to do that well.

Jeremy Jung 01:06:17 And if people want to learn more about what you’re working on or what’s going on with Queue-it, where should they head?

Mojtaba Sarooghi 01:06:24 We have this Queue-it.com. There are all the resources that we have. We have also on the GitHub slash Queue-it, we have all the open-source part like connector out there. And we have also a lot of podcasts as well. Like you are doing it professionally. We are also trying it to do on our end and we would be happy to hear from all the audience.

Jeremy Jung 01:06:46 Very cool. Well, Moji, this has been an interesting conversation. Thanks for taking the time.

Mojtaba Sarooghi 01:06:52 Thank you. And it was nice to be here with you.

Jeremy Jung 01:06:56 This was Jeremy Jung for Software Engineering Radio. Thanks for listening.

[End of Audio]