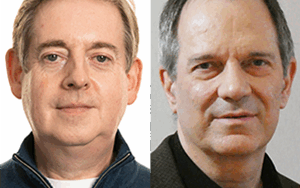

Jeremy Howard from fast.ai explains deep learning from concept to implementation. With transfer learning, individuals and small organizations can quickly get to work on machine learning problems using the open source fastai library and desktop graphics hardware. Jeremy and host Nate Black discuss neural network architecture and deep learning models, using pre-trained models from a “model zoo,” why coding ability and tenacity are key skills for success in deep learning, which tools are essential for practicing deep learning, creating a language model for natural language processing, and cool things you can do with validation sets. Jeremy also answers: What can you learn with the fast.ai course? Which parts of the pipeline will you learn to implement from scratch? Why are some portions of the fast.ai course and the fastai library moving from Python to Swift?

Show Notes

Related Links

- SE-Radio Episode 286: Katie Malone Intro to Machine Learning

- Fast.ai: a high-level deep learning library on top of Pytorch

- Pytorch: an open source machine learning library

- Pandas: Python Data Analysis Library

- Keras: a high-level deep learning library on top of TensorFlow

- TensorFlow

- Universal Language Model Fine-tuning for Text Classification

- Accelerated active learning with Transfer Learning

- Jupyter Notebook

- Matplotlib

- Cyclical Learning Rates for Training Neural Networks

SE Radio theme: “Broken Reality” by Kevin MacLeod (incompetech.com — Licensed under Creative Commons: By Attribution 3.0)

Really enjoyed this podcast. I learned so much about Machine Learning, thanks for putting this together.