Jacob Visovatti and Conner Goodrum of Deepgram speak with host Kanchan Shringi about testing ML models for enterprise use and why it’s critical for product reliability and quality. They discuss the challenges of testing machine learning models in enterprise environments, especially in foundational AI contexts. The conversation particularly highlights the differences in testing needs between companies that build ML models from scratch and those that rely on existing infrastructure. Jacob and Conner describe how testing is more complex in ML systems due to unstructured inputs, varied data distribution, and real-time use cases, in contrast to traditional software testing frameworks such as the testing pyramid.

To address the difficulty of ensuring LLM quality, they advocate for iterative feedback loops, robust observability, and production-like testing environments. Both guests underscore that testing and quality assurance are interdisciplinary efforts that involve data scientists, ML engineers, software engineers, and product managers. Finally, this episode touches on the importance of synthetic data generation, fuzz testing, automated retraining pipelines, and responsible model deployment—especially when handling sensitive or regulated enterprise data.

Brought to you by IEEE Computer Society and IEEE Software magazine.

Show Notes

Related Episodes

Related Episodes

- SE Radio 534: Andy Dang on AI / ML Observability

- SE Radio 610: Phillip Carter on Observability for Large Language Models

Other References

- Coval

- Your AI Product Needs Evals

- What We’ve Learned From A Year of Building with LLMs – Applied LLMs

- Unit Testing LLMs/RAG With DeepEval – LlamaIndex

Transcript

Transcript brought to you by IEEE Software magazine.

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected] and include the episode number and URL.

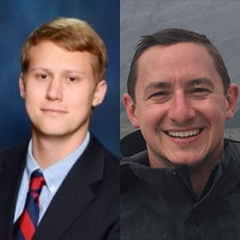

Kanchan Shringi 00:00:19 Hello all. Welcome to this episode of Software Engineering Radio. Our guests today are Conner Goodrum and Jacob Visovatti. Conner is a Senior Data Scientist and Jacob is Senior Engineering Manager at Deepgram. Deepgram is a foundational AI company specializing in voice technology and enabling advanced voice applications across many businesses and sectors, including healthcare and customer service. Deepgram solutions include conversational AI agents. Welcome to this show Conner and Jacob. Before we get started, is there anything you’d like to add to your bio, Conner?

Conner Goodrum 00:00:55 No, that about sums it up. Thanks very much for having me. Excited to talk today.

Kanchan Shringi 00:00:59 Jacob?

Jacob Visovatti 00:01:00 No, thank you. Likewise. Very excited to be here. Glad I’ve got my man Conner right alongside me.

Kanchan Shringi 00:01:05 Thank you. So our topic and our focus today is testing ML models for enterprise use cases, enterprise products. Just to start context, could you explain the relationship between a data science model, an ML model and an LLM?

Conner Goodrum 00:01:26 Well, I would say that everybody’s got their own vernacular about how all these things fit together. Largely the way that I consider them, an LLM is just one type of ML model and similarly we use data science approaches to train various types of models, one of which could be an LLM, but they all have their sort of specific use cases and applications.

Jacob Visovatti 00:01:47 Yeah, maybe just to build on that Conner, when we think about the field of data science, I guess I could say traditionally, even though it’s a relatively new discipline, I think we see a lot of initial applications that maybe grew almost out of the big data movement that was the key buzzword but 10, 15, 20 years ago, right? And we see things like teams of analysts inside a larger enterprise that are developing models maybe to forecast revenue growth across market segments. And we have generally well-structured inputs applied to a narrow range of questions and mostly for an internal audience. And of course there’s a lot of people doing great work there. And I don’t mean to oversimplify how complex that kind of work can be, it’s extremely hard stuff and forecasting revenues is pretty darn important for any company to get right. And I think what’s really interesting now and what I think provokes this kind of conversation is now we see the intense productization of those techniques at a greater scale, especially insofar as they more and more approximate human intelligence and therefore are justifiably called AI. So when we think about machine learning models in this context we’re thinking about things like accepting unstructured data and the model is no longer a limited set of results that are going to be curated and delivered in human time to a known audience, but it’s going to be delivered in real time to wide audiences with consumer focuses without any human in the loop checking on those results in the meantime, which of course exposes a whole host of concerns on the quality front.

Kanchan Shringi 00:03:23 Thanks for that Jacob. So I think that leads me to my next question. Given this expanded focus, is that what leads companies to think of themselves as an AI-first company or a foundational AI company and what is the relation between these two terms?

Jacob Visovatti 00:03:41 I think justifiably AI-first companies are those whose product really revolves around delivering value to their end customer through some kind of AI tooling. I think that the really useful designation or distinction that you brought up there is foundational versus not. So, there are a lot of “AI-first” companies that are delivering really cool products that are built on top of other more foundational technologies. And the difference between some of those companies that are doing really neat things and a company like Deepgram or other big players in the space, like open AI and Anthropic, is we’re developing new models from scratch — maybe influenced by what’s going on across the industry, informed by the latest developments in the research world, the academic world, but we’re essentially developing new things from scratch, empowering other people to build all sorts of applications on top of almost infrastructural AI pieces.

Kanchan Shringi 00:04:36 The kind of testing that a foundational AI company has to do is also different from what potentially an AI-first company that uses AI infrastructure would do and it would probably build upon the testing that a foundational AI company has in place. Is that a fair summarization?

Conner Goodrum 00:04:56 Absolutely. I would say in building upon other people’s models, it’s easy to sort of point the finger when something goes wrong and be able to say like, oh well we’re using this provider’s model to do this part of our software stack and therefore we can really only test inputs and outputs. Being on the foundational side, we really have the control to be able to go in and tweak parameters or adjust the model itself in an attempt to design them out rather than working around them. And that’s a huge, huge advantage.

Jacob Visovatti 00:05:26 Yeah, I think a neat pattern that we’ve seen emerge is our customers are oftentimes AI-first companies and they’re building upon Deepgram as one of their foundational AI infrastructural pieces. But these are still AI companies offering AI enabled products. And so the kinds of testing that they do of our system is a certain kind of testing in the AI world. One of the most common ways we see this when we’re talking with potential customers is the bake off, the classic bake off that’s been in practice for decades across so many industries where they take their actual production audio that they might want to turn into text and run it through different providers. And despite what you may read about these different providers and whatever benchmarks they’ve previously published, really the thing that matters most for these customers is how do the different options you’re comparing do on your production audio, not on the benchmark set that you read a paper on, but how does it work for your customers? This is actually a place where we try to really thrive because we play hard and compete hard in the customization space and try to work with our customers in a really high touch weigh in that manner. But that’s a serious form of testing that has a lot of nuances. And then of course on the Deepgram side we’re thinking about that generalized across our entire customer base, all the different market segments that we’re addressing, all the different domains that we seek to represent well in our modeling.

Kanchan Shringi 00:06:50 So how exactly is testing ML models different from traditional software testing?

Conner Goodrum 00:06:55 Well I can say from the sort of data science side of the house testing ML models for enterprise, enterprises tend to be far more risk averse than your normal user. Enterprises have established software stacks. They generally have much stricter requirements around latency and accuracy uptime, all of which need to be met in order for them to be able to serve their products effectively. And enterprises typically span many use cases. A single enterprise customer may contain and use our software for many different product lines or for many different languages with very specific and niche applications. They may be serving internal customers or external customers. And this poses many operational and model development challenges that are really, really interesting. One of the key differences here is that in traditional data science testing, as Jacob alluded to earlier, we generally have a quite well-defined and narrowly scoped problem and to answer some sort of question, for example, maybe we’re trying to make predictions about some data, how well can we classify the domain of some audio given some features about that audio.

Conner Goodrum 00:08:07 But when it comes to enterprises, oftentimes there’s a much higher level of system complexity both within our system and the enterprise system that requires testing of both on the model side, which is the side that I’m more familiar with and on the production side of serving. And so when a customer encounters a problem, there’s a decent amount of testing that first needs to happen on our side in order to determine did it happen somewhere on the request side, was it an issue with how the user submitted audio? Is it something specific about the audio itself? Did something go wrong in the production stack or is it actually a fundamental issue with the model? There’s a lot of interdependencies between the model and the production code. There are also interdependencies between Deepgram’s models and how they’re used by the enterprise customer. We’ve also got a much, much larger scale of data that we’re talking about here.

Conner Goodrum 00:09:01 Traditional data science, we may be talking about tens or hundreds of thousands, millions of test examples, but our stack is serving tens of thousands of requests per second. And so this poses very unique data, capturing, storage, filtering challenges in actually targeting the right type of data. And then we’ve also got the case that the edge cases in which they’re used are enumerable. There are many, many parameters to investigate across user API parameters that are specified to audio specific parameters that could be things about audio quality or acoustic conditions that are present. Could be down to inference parameters that we have set up on the model itself. This could be things like the duration of the audio. Are people submitting very, very short segments of audio and expecting the same types of behavior as submitting minute long clips? These are almost impossible to design out. And I’ll let Jacob speak to some interesting cases that he’s definitely run across on the edge case side.

Jacob Visovatti 00:10:04 Yeah, we should get into some more stories here at some point Conner, that’ll make for some fun listening. Maybe briefly Kanchan, you asked a bit about how this compares to software testing traditionally speaking. And one fun thing here is I have to say I only have a certain limited perspective. I haven’t worked across the entire AI industry. I do have a useful comparison here because before Deepgram I worked in kind of traditional software consulting doing typical big enterprise application development, things like payments processing and record storage and that sort of thing. I think that one of the big ideas that has emerged from software development very generally over the last few decades is we have a testing pyramid and of course many of your listeners be familiar with this, but you can walk through, you think about some application where there’s a user interface and the user can update his birthday and there’s probably going to be some unit testing that says, hey, if I pass in this object that represents a person’s information, it gets updated with the birthday.

Jacob Visovatti 00:11:01 Okay, we can ensure that that part is correct. And maybe you have an integration test that asserts that this object as you’ve modeled it in your code is correctly mapped into the domain of your database schema. Maybe you have something like a higher-level integration test that asserts that all this works through a backend API. And then finally like at the tippy top of your testing pyramid, the thing that’s the slowest most expensive to run but really the most valuable for confirming that things work is you have some sort of end-to-end test maybe running through Selenium in a browser and you’re testing that this works through the user UI. So that’s your traditional testing pyramid. And really in a sense, this testing pyramid doesn’t go away. We still need to have lots of small test cases that are the foundation and we build up to some more expensive ones that operate near the top.

Jacob Visovatti 00:11:50 It’s just that what those layers look like have really changed because we’re no longer testing the storage and retrieval of data in a data model, which is the vast majority of probably software applications that have ever been written. And now we have this compute intensive world. So at our lowest level at this unit level, now we’re thinking about operations in a neural network and their mathematical correctness. In one sense this is still a foundational unit test. We can model this in functional code in another sense it’s kind of tricky because now you’re taking on this more intense mathematical domain but then you move up a level in the stack and many of these lower-level functions become a full model network or maybe there’s even a pipeline of models whose inputs and outputs are wired up together. So now you want to test that that flow works probably in most AI systems.

Jacob Visovatti 00:12:40 You have a variety of features since now you’re testing that that flow works with intersection of various parameters that users tune, right? You see a lot of modern AI APIs where a lot of power is put in the hands of the user to configure things under the hood like the temperature used in different inference worlds. And so we’re gradually working higher and higher up the stack and you eventually get into the world of okay, we can confirm that we get exactly the right outputs for exactly this one given input. And then you get into the data diversity explosion that Conner mentioned, the fact that in Deepgram’s world we’re taking in arbitrary audio and audio can be encoded in a lot of different ways. You can say all sorts of things within it. And so now you’re trying to deal with that space and then finally you get into, I think this is the most powerful world for Deepgram and our customers to collaborate on, but it’s the downstream integrations between say a foundational AI system and our customer systems.

Jacob Visovatti 00:13:37 We’ve even seen cases where you can have AI outputs that improve, but if say Deepgram’s speech to text outputs are then used by a customer for some sort of NLP process. And so now they’re doing something like looking for product names mentioned, or key topics discussed in internal meetings. Well if your transcription output changes significantly in some way, then you might have made an improvement but thrown off a downstream model. And so you actually care about that very full end-to-end flow and that it’s the same testing pyramid, but it’s just cast in a new light.

Kanchan Shringi 00:14:13 Thanks for highlighting all those challenges. So who exactly is responsible for doing this testing? Is it the data scientist, is it the ML engineer? I’ve heard this new term of an AI engineer. So who is it? Which role?

Conner Goodrum 00:14:29 I wish there was a single answer for you. Unfortunately or fortunately, the first part of this process is always to identify where exactly something is going wrong and that comes with high levels of observability throughout the system, whether that’s in the production system or whether that’s within the model. Who owns it? Well, when we’ve identified where in the system things are going wrong, then that sort of dictates whether it’s more on the ML engineer let’s say, or on the engineering side or on the research side. If it’s a fundamental issue with the model where the model is producing hallucinations under some bad conditions, then that warrants a retrain and that warrants basically a revision to the model to ensure that that doesn’t happen. We want to, as much as possible, make our models very robust to many different types of audio conditions and acoustic parameters. And so we want to make sure that we design that out as low as possible because that really helps the production side of things and making that far more streamlined.

Jacob Visovatti 00:15:34 Yeah, I think these questions of roles are so fun, right? Like when the world is kind of shifting beneath our feet and all these new tools for developing technologies are coming out, I’m reminded of things like when the industrial revolution came about, all of a sudden you have like a factory floor manager and there’s just no parallel for that for workout on a farm field, right? And you have this new profession that emerged. And so I think you’re actually asking a pretty profound thing here. Really the software engineer’s answers is we always want to just throw product managers under the bus, right? So it’s whose responsibility is it’s got to be product. I say that with a lot of love for our team. We can shout out Natalie and Sharon and Evan and Peter and Nick because they do a great job for us here at Deepgram.

Jacob Visovatti 00:16:18 But I think Conner kind of put the nail on the head here, which is that it has to be an interdisciplinary effort. There’s a certain amount of rigid, low level functional testing that a software engineer is going to absolutely knock out of the park when you ask him to look into this kind of area. And then there’s thinking about a wide data domain and like even are you sampling correctly across the entire distribution of data that represents your production environment and what kinds of potential biases might you be encountering in trying to construct your test set? Look, those are questions that are really well answered by somebody like Conner with a PhD that I very clearly lack. And so I think we’re just seeing that the age old story quality is always an interdisciplinary problem and we’re just finding new ways to weave together the right disciplines to address the quality issue.

Conner Goodrum 00:17:08 Yeah, and you mentioned the AI engineer role and while we don’t have an explicit AI engineer role at Deepgram, really what I think about, and everybody’s got their own preconceived notions about what an AI engineer does, but it’s really somebody who can sort of understand, I think both sides of the coin. Understand both the production side, the hosting of models, but also the training and model development side of the house. And you may not need to be sort of an expert in both, but the ability to share a common vernacular with say folks who are more on the production side and perhaps folks who are more on the research side, being able to have that shared understanding and especially when developing the model, being able to understand the implications of design decisions that you’re making there. For example, if you made a model that was 70 billion parameters from an accuracy perspective, however you want to measure that, it will likely do very well. However, from a latency perspective, you’re going to take a massive hit there without a significant amount of compute. So being able to understand those types of interdependencies and where trade-offs are worth making is I think where the quote unquote role of AI engineers going and is going to be critical in handling these very interdisciplinary projects and types of problems.

Kanchan Shringi 00:18:31 Thank you. That helps. So how does LLM make this already difficult problem even worse?

Jacob Visovatti 00:18:39 Well, LLMs, we use LLMs a fair bit here at Deepgram. We’ve got a new feature, a new product line that’s our voice agent, API. And really what that is, is we have the ability for users to bring their own LLM to a voice agent party where you use Deepgram’s speech to text on the front end and Deepgram’s text to speech on the backend with your own LLM in the middle. LLMs are notorious for hallucinations and a whole host of other problems if they aren’t formulated correctly. But it makes evaluating the quality of LLMs quite a challenge and especially in the context of this voice agent API, our models expect a certain type of input and a certain type of output. And so stringing them together always presents unique challenges. For example, on the text to speech side of the house, oftentimes there are ways that things are said that when they’re written down they become nebulous.

Jacob Visovatti 00:19:39 For example, if you typed in or if you were to write one four km, it could be that the person said 14 kilometers. It could be that the person said 14 kilometers, it could be one four km, it could be 14,000 M meters, right? So there are lots of different ways that that could actually be vocalized. And so when you’re building a voice agent, if the outputs of your LLM are let’s say formatted in strange ways, then that can often lead to the text to speech side of the house mispronouncing things and that comes off to a user as maybe something’s wrong with the system when in reality it is maybe a misconfigured prompt in the LLM or hallucination of the LLM that can lead to very strange outputs in a voice agent context.

Jacob Visovatti 00:20:28 Yeah, I think this is some great points and when I think about the problems with LLMs, I actually think that there are things that anybody in the speech world has been thinking about for quite a long time. The cool revolution of sorts that’s occurred with the popularity of LLMS and the APIs behind them and all the really neat things that people are building on top of the open AI and anthropic APIs and gosh so many other providers now grok is one of the big ones is they’re thinking about the challenges of dealing with natural language as an input and as an output and all the things that go into that. Conner mentioned like the one four km, we’ve been thinking about some of the ambiguities in natural language for a while. I remember one of the first great examples that I heard was I’d like two 12-inch pizzas delivered to two 12 Main Street.

Jacob Visovatti 00:21:17 And it’s funny, the degree to which a human can instantly recognize exactly what you mean there. That there was two and then there’s 12 inch and the different parts of that, you parse it without a problem because you get the context. And even LLMs can do this well depending on how you set them up. And then depending on how you’re trying to parse like your user input or the LLM output and trying to make sense of it, all of a sudden you run into a lot of huge problems. And this is where you see people playing all sorts of games with their prompting. This is why prompt engineering is almost a sub-discipline nowadays and why you see emerging concepts out there, like this model context protocol that’s just kind of been becoming viral over the last several weeks I think where people are attempting to introduce additional structure to these interactions specifically because the way that humans deal with this unending flow of natural language actually proves to be extraordinarily difficult to model when you’re trying to get down to the level of writing specific code.

Jacob Visovatti 00:22:18 An interesting problem we’re just thinking about today at Deepgram is you have a system where somebody’s calling in and maybe checking on an insurance claim and they need to provide a birth date. And so say, yeah, it’s 1971, well there’s this large gap in there. And how do if I say 1970, it may be that I’m about to continue and give another digit such that it’s going to be 1971, 1975, what have you. And maybe I paused, maybe I had a tickle in my throat or something like that. Or maybe I was done. Maybe it was just the year 1970. And again, humans rely on a lot of somewhat inscrutable clues to intuit when somebody is actually done speaking rather than when they merely stopped speaking. But we still get this wrong. We certainly do, right? Any conversation you have in this podcast interview, we’re kind of very polite and waiting until somebody’s very obviously done.

Jacob Visovatti 00:23:11 But when you’re talking with friends, you start speaking and talk over each other and say, oh sorry, sorry, I thought you were done. Humans don’t get this right perfectly. And so of course our LLMs are not going to right now, of course the code that we write to parse inputs and outputs to LMS and deal with natural language is not going to get that kind of thing right. And that’s just a fascinating area that I think a lot more of the programming and software engineering world is getting exposed to because of this technology that’s allowing people to deal with natural language in a new way.

Kanchan Shringi 00:23:39 I do read that D brand delivers voice AI agents, so you already have the speech to text and text to speech and the LLM in the middle and perhaps RAG to go with it. So how do you guys test? Have you developed new quality metrics for hallucinations and inconsistencies? What’s the methodology?

Jacob Visovatti 00:24:01 Kind of, maybe it’d be helpful to actually like just start with the speech detect side where there’s a whole host of interesting metrics that we’ve thought about over time.

Conner Goodrum 00:24:09 Yeah, absolutely. I think from the speech detect side, we’ve got sort of established industry-wide metrics that are commonly used to compare providers and provide some notion of ASR quality. These are things like word error rate, word recall rate, perhaps you have punctuation error rates and capitalization error rates. You have a whole slew of text centric metrics which are compared to whatever the model produces and some ground truth. There are a number of, let’s say benefits and drawbacks of these types, but oftentimes those are insufficient to get down to the level of detail that are required. Word error rate as an example, if somebody says a single word and a model produces two words in that you are going to have a word error rate that is massive and it is going to come off as that the model is very wrong. In reality, it may be that the ground truth word is like a hyphenated word, but the model predict two independent tokens and therefore it doesn’t quite tell the whole story.

Conner Goodrum 00:25:15 We had some interesting instances where we had this like silent pathology as an idea of one of these hallucinations. In instances where audio would be provided with speech to our model, the model would come back empty. And what we found was that through training there’s a fraction of silence that needs to be included during training such that the model actually learns to ignore silent pathologies and actually not predict any text when there is text that exists. And so the ability to uncover nuances like this through really detailed inspection of the data, things like deletion streaks, insertion streaks, actual more nuanced metrics beyond just your single sort of high level industry-wide standards really helps paint a much deeper picture about where the model may be, let’s say being too verbose or too silent and then that indicates instances under which we should remedy that.

Jacob Visovatti 00:26:15 Yeah, I think you were talking about some of these challenges involved with natural language and maybe to put kind of a spot on some of the things Conner was pointing out there, it’s almost trivial to construct some examples that where by a certain traditional metric, maybe something like speech recognition output looks extremely good and then when actually put in a businesses context it’s woefully insufficient. I could give you an example, like let’s say that we produce a transcript of this conversation and most of the words that we’re using are normal everyday words. But then if we start talking about specific aspects of neural network architectures and then we start using some software terms like POJOs and POCOs and your ORMs and your SQL light and web sockets and yada yada, we use all this jargon, maybe a very general purpose speech recognition model is going to miss those.

Jacob Visovatti 00:27:06 Now it’s going to get the vast majority of the words right in this conversation. It’s going to miss a handful of those. And so maybe you want to paint a picture, say, oh the model was 90, 95% accurate, doesn’t that sound great? But then Kanchan, you go back and you look at your transcript and you say, hold on, this stinks, you didn’t get any of the words that are most important to me and my listeners, you totally missed all of my domain jargon and that’s actually some of the stuff I cared about the most. I would’ve preferred that you have errors elsewhere. And so this is a really interesting thing that if you only rely on one particular metric, you can really miss the forest for the trees. And this is a problem that we’ve ran into time and again and why we’ve gradually expanded our testing suite to try to encompass what is important in this domain. And almost always when we have a customer who’s talking to us about needed improvements, one of our first questions is like, let’s get really nitty gritty, what is important to this customer? What really matters? Because we need to understand that otherwise we could go optimize entirely the wrong things.

Kanchan Shringi 00:28:07 So you do get a lot of feedback from customers as they test the product with their use cases and they provide you feedback. So what do you do with that? How do you roll that into your tooling?

Conner Goodrum 00:28:19 The first challenge with that, and that’s a really unique challenge to enterprise, is the added difficulty in that communication pathway. It’s not as easy as being able to look into sort of a database and do our own data mining there to determine what is and is not going well. We really do rely on feedback from our customers to indicate where are things going wrong and we have a customer success team who is there gathering feedback from enterprise customers to capture that and that’s a critical role. If that’s not done well then there can be information loss between what the customer actually wants and how that gets communicated to a technical team. So how do you actually go about incorporating that? Well, it starts with having a very clear and specific idea of what the problem actually is and that sort of provides a north star of where to start the investigation.

Conner Goodrum 00:29:09 We can look back and say, okay, was it something that went wrong somewhere with some formatting in our engineering stack? Okay, no then is this actually something that is wrong with the model itself? Well let’s go and try and reproduce this issue under a large number of different circumstances and see if we can actually find out if this is something that is actually something that is a model specific issue. And if so, then great, then we can come up with a decision to train that out of the model. And this whole process is iterative, right? We get feedback, we incorporate these changes into a model retrain or perhaps somewhere in the engineering pipeline itself, in the production pipeline. And we make the change, we push the change, communicate the change to the customer and wait for more feedback. And it’s a very important loop that happens there and we want to make sure that if we were to fix a problem, let’s say for one customer, that we don’t negatively impact others. And so we really want to make sure that we’re consistently improving.

Kanchan Shringi 00:30:11 So I think at the high level, abstracting this out, you’re really saying that whatever training you have done is really on offline data initially. Once the model is used in real world production, there is real time feedback. And so just thinking about that, what would your advice be to any other company that wants to do, making sure that their models are well tested, incorporating this real time feedback over what the model was trained in, what should their approach be?

Jacob Visovatti 00:30:41 Yeah, a couple of thoughts on this one. This is one of the really cool areas of development inside Deepgram because this is one of the interesting aspects of trying to, I think to really make your company AI-first and to really be foundational AI, what a human, the really noteworthy thing with a human is that I’m thinking about my work day today and I’m thinking about days that I feel like I was successful, got a lot done, made a good impact versus not, and I’m able to tune that over time, right? There’s always that that live active learning, that feedback loop in the bigger picture. And that’s what we want to approximate in one sense while still having well testable models that we know aren’t going to go way off the rails when they’re running in production. I think what this behooves us to produce and what we’ve already made a lot of great progress on is an overall loop whereby you’re able to look at some of the data that’s coming in and running through your models in production and you’re able to understand in various ways where are you probably weak on this?

Jacob Visovatti 00:31:41 And then how do you pull that and automate some loop to kick off new training, validate an updated model and push it out into the world like Conner was describing. Now, there’s a lot of ways you could approach that. One is that you could say, well just from knowing about our models, I know some things about where they’re weak and I can describe them to you heuristically. And so maybe I could say, hey, I know that if a customer talks about some of the following things, we’re probably going to wind up struggling. And then you could set some heuristic based almost triggers or filters and start selecting some of this data in order to do training. Ultimately where you really want to push that I think in a really foundational AI manner is you want to have something that’s not rules based, not heuristics based, but is again, model based determining where are you strong and weak, just like a human’s kind of intuitive judgment.

Jacob Visovatti 00:32:36 Actually being a software manager works, this is kind of my day-to-day experiences. Hey, I’m not an expert in this but I know who is, I know who’s going to struggle with this thing and who won’t. And so this is actually increasingly, I think an important part of the world to build out is that we need models that know where the other models are strong and weak and we want models that select the right model to run at runtime. That’s hard because you needed to be extremely low latency in order to continue meet meeting real-time use cases. And then we want models running post facto in order to say where weíre probably weak, let’s select the right data and retrain and improve.

Conner Goodrum 00:33:12 Just to expand on that a little bit, production data is key here. I mean the whole point of this is how can you get the most realistic in distribution data that your customers are using into your testing suite such that you can be proactive in doing testing rather than reactive. I know personally from having trained models, I know many instances like Jacob was mentioning, where our models are likely to be weak and that’s really helpful feedback in being able to incorporate that. But like I said, it’s an iterative process, being able to pull in conditions early, whether those are similarly or in distribution in terms of let’s say acoustic parameters or, parameters about what’s actually being said versus parameters that are likely to be seen in our production system. For example, the duration of audio or are people using us in a streaming context or in a batch context, even if it’s offline data, trying to duplicate and do your testing as close to your production system as possible will help elucidate some of those potential failure modes early. So even if the data itself is not, let’s say one for one, that’s something that can be iterated on, but you can get a long way there by testing as close to your production parameters as you can early on.

Kanchan Shringi 00:34:34 And do any kind of intermediate environments, alpha beta testing help with this? Have you experimented with that?

Jacob Visovatti 00:34:42 Oh yeah, absolutely. Like we talked with the testing pyramid earlier, there’s a whole host of knowledge accumulated over recent decades that still very much applies even in the like the AI-first software space. There are pre-production environments, there are checks run in CI. We want to make sure that the full software stack is deployed in a staging environment and run a suite of end-to-end tests against it before promoting a new version of a key service to production, that sort of thing. And likewise for our models which are deployed separately from the software components themselves, we train a model, put it in its actual finalized packaging as if it were going to prod and then run your battery of tests against it. I think it’s absolutely essential that you do these things pre-production or else your defect rate is going to look pretty poor.

Kanchan Shringi 00:35:30 So in this pre-production testing, how does scalability and performance testing differ when you are talking models, what kind of resource constraints this introduces with you needing additional GPUs, how much memory, what kind of latency do you consider in this testing? Anything you can talk about that?

Jacob Visovatti 00:35:51 Yeah, I mean this is a huge fascinating space. Maybe it’s helpful to start just by backing up and kind of give the Deepgram view of this kind of thing. Just like there are foundational AI companies that are thinking of not from what can I build using the cool pieces that are out there, but really what are the things that I can create with raw materials from which AI networks are made. I think that there’s also, are you thinking foundationally about the enterprise use case or are you trying to get there later on? So what all that adds up to is, you do still need to decide on the right metrics, latency and throughput are probably two of the most important characteristics that anybody ever tests where latency, you’re caring about that end user experience in a serious way.

Jacob Visovatti 00:36:36 But throughput is an interesting one too because, we certainly care about that on the Deepgram side, increased throughput means more business per unit of hardware, which means either we have healthier margins or we can pass on lower costs to our customers, compete on price, right? That’s a really key factor for us as well. But Deepgram also thinking about this enterprise use case, we offer a self-hosted version of our software. So some of our customers will run the exact same Deepgram software services models that we offer in a hosted cloud environment. They’ll run this in their road and data centers, VPC deployments, et cetera. And what all that adds up to is that they certainly care about the throughput quite a bit as well. Then all of a sudden if your new model has these amazing capabilities but requires 10X the compute resources, well they’re not so amazed anymore because now they’ve got to figure out how to go to their AWS route and scale up their quota 10x or they’ve got to go talk to the infrastructure team and figure out how to expand the data center footprint 10X.

Jacob Visovatti 00:37:34 And so when you’re really focused on the enterprise use case, you have to care about this throughput testing. So what that looks like is, is you need a set of benchmarks and you need to verify that later re-releases of software are upholding previous benchmarks. So that’s the sort of performance regression testing that we run on each release in a pre-production environment. One of the interesting things there is that hardware configuration really matters. Certainly everyone’s aware of GPU models being a big deal, NVIDIA releases the newest GPU model and everyone’s rushing to use that. But then even things like how well are you funneling work to that GPU your CPU configuration, your PCIE bus your motherboard, all this stuff winds up mattering quite a bit. And so we often talk, a key phrase that comes up in the software engineering world at Deepgram quite a bit is we need to test this on prod like hardware. We don’t even use the exact same hardware across every server, but we at least need it to be not the local GPU in my laptop, which is not anything like the data center class card, but we needed to be on prod like hardware in order to verify a lot of this performance. I think I might’ve answered your question or I might’ve taken that in a different direction Kanchan, so tell me where you’d like to go.

Kanchan Shringi 00:38:45 I was just wondering like maybe give an example of where doing scalability and performance testing for models has been particularly different task perhaps maybe just an example would help.

Jacob Visovatti 00:38:57 Sure, yeah, I mean, I’ll give a fun on, well one of the big parts of a release is what are we going to charge for it? And part of that question of what are you going to charge, of course is market driven, what is the competition doing? What will your customers sustain? But part of it’s going to be based on your costs. We don’t want to sell this thing at negative margins. We’re not giving away ASR that’s not our business. And so that means that rightfully so our product team comes to my engineering team and says, hey, what does it cost to run this model? Well it turns out that that in itself is a complex question, well, on what hardware, at what batch sizes with what audio inputs, because actually the, the performance character characteristics wind up changing slightly depending on things like what language is being spoken.

Jacob Visovatti 00:39:40 But it’s our job to try to boil all that down to, okay, here’s this complex multi parameter space, let’s try to get this down to a couple straightforward answers such that we can say compared to the previous generation, all in all on the kind of data we’re serving in production here’s the difference in what it costs us on the infrastructure side to host and run this model. And now that that delta, whether it’s, more expensive, less expensive, whatever, now you can make some decisions at the business level on the pricing strategy. I think that’s a really fun, interesting thing to try to address in this world of AI, software engineering,

Kanchan Shringi 00:40:19 Anything you can talk about other aspects of testing for enterprise apps. Let’s maybe talk about security and privacy. How is that different when you’re talking models?

Conner Goodrum 00:40:31 Well, it presents a whole host of fun challenges. Many customers may be under GDPR or HIPAA restrictions and therefore are unable to share data with us at all. And in some instances, they will share data, but then we need to be extremely careful about how we handle HIPAA data, for example. So those enterprises have industry standards that they need to comply with and similarly we need to make sure that we are also compliant and being extra careful in the steps of when we’re training these models. We want to make sure that an instance where, let’s say you have a mist transcription, or you are using an LLM for something that you don’t accidentally blurt out someone’s social security number. And so we try very hard to design them out from the get-go, like I said, from the sort of model first perspective. But doing so is very challenging in the face of being very data sparse.

Conner Goodrum 00:41:33 And so we rely on things like synthetic data to be able to generate similar sounding or in distribution instances of these types of keywords that we can actually train our models on to improve performance for our customers. And when we maybe put it out in beta and have folks test it, then they’re able to test it on their real-world data and provide us feedback on areas where it’s working well or maybe it works well for social security numbers but doesn’t do very well on drug terminology. Well that’s very, very valuable information for us. I can then go back and work on improving the model performance on perhaps key terms or more instances of social security like digits voiced in many different voices in very different acoustic backgrounds, all in an attempt to sort of expand the robustness. And so yeah, data sparsity and data governance makes this a very challenging problem, both from designing it from the ground up, but also in even getting examples of when things are failing. So definitely a unique challenge

Jacob Visovatti 00:42:43 Thinking from the software side. There’s again, a lot of bread and butter that, that certainly applies across industries. The data needs to be encrypted in flight and at rest you need to have a well-constructed access policies, a whole host of things. I’m really thankful we have a great information security team here at Deepgram and we’ll do another shout out for EAB who’s the director over there and um, they do a great job helping us understand our compliance obligations. And so there’s quite a bit there. Don’t log customer data in your logs, it’s a bad idea, but going beyond some of the, some of the simple parts and the basics there. Conner, I think one of the interesting things that we’ve seen in the world of AI issues where you have say an LLM trained on customer A’s data and customer B uses it and actually some of customer a’s secrets leak to customer B.

Jacob Visovatti 00:43:31 Thank goodness we’ve never had that exact problem at Deepgram. But I think, we’ve all seen some, some scary headlines along those lines and that’s why for Deepgram where we do a lot of model customization we have to ensure a certain amount of isolation as well. So if a customer is sharing data with us for the purposes of model training, we have to be very clear about whether we have the rights to bake that into our general models or just into custom model for just this customer. The custom models are our isolated in their training in certain ways from others and that there’s a role-based permissioning system making sure that user A can only access the models that user A should have access to. So there are some interesting new concerns in the world of AI two in that you can have new kinds of leaks by training a model on inappropriate data and that’s where you need great systems internally ensuring that only the correct data is used to train the correct models.

Kanchan Shringi 00:44:26 And that is a very useful example where you mentioned, so definitely for enterprise customers it is very important to know which data you can actually use for training cross training. Okay, thanks for bringing that up Jacob. So I’m going to talk about languages now. Let me confirm what you earlier said Conner and a lot of your customers are building and LLM in the middle with the speech to text and detect a speech on either side. And I did that recently. I have a cooking blog and I used it with one of the open AI models to do RAG so that they understand my recipes and only my recipes. I don’t want any general recipes. And then I integrated that with Deepgram, what should I do now to make sure my system behaves as I would like it before I roll it out to some of my friends? How do I test this? What would your advice be for me? And especially talking multiple languages because I’d like to share this with my mother-in-law who doesn’t speak English well.

Conner Goodrum 00:45:30 Well that’s a great question. So what you would want to do in this type of instance is to have observability at the many different stages of your pipeline. So you mentioned having an ASR system, which is basically your ears of your system. And so you want to make sure that what comes out of that is in fact what you said. Similarly, you then want to check the second stage of your LLM, which is sort of the thinking portion of your system and you want to make sure that what comes out of your LLM is actually sort of the correct thought of your system. And if you were using the text to speech side of things, then you would want to see what was actually put into that portion of the model and is that actually what you heard your model respond to you? And so this observability at the many stages throughout the pipeline is incredibly helpful.

Conner Goodrum 00:46:20 In this instance it might be challenging, but an ideal scenario would be you would have a human labeled version of what you said when you said it such that you were able to, for example, calculate word error rates or deletion streaks or the various other metrics that you would use to classify the quality of your ASR. And similarly have metrics that you could apply at the various stages to understand how well the various aspects of your system are performing. The same thing holds for different languages. For example, if you were speaking Spanish to it, the same accuracy metrics could be applied on the ASR side, but then there would come an added level of understanding or perhaps prompt engineering understanding how well that Spanish is actually converted to English, presuming your RAG is built on the English side of things. And then when it’s voiced back to you ensuring that if you want it to respond to you in Spanish, perhaps it warrants some notion of similarity of the TTS quality versus perhaps like a, a human preference type of score.

Jacob Visovatti 00:47:23 This kind of testing is fascinating and I think one thing that we have to note is that starting from scratch, it definitely is a big workload and that’s why looking at even the most recent Y Combinator crop of companies, I think there were four or five different voice agent testing focused companies where their entire business was to help solve this sort of problem. So really if I were somebody just working independently trying to put together a cool personal tool like this, that’s one of the first places I would reach. I would go for one of those tools and see at least what can I get? But I do think that if I was to try to think about the problem from scratch, Conner pointed out a lot of the really important aspects of like, you need to understand that you have a pipeline of operations speech to text, maybe translation as a step in there and then knowledge retrieval and information generation and then speech synthesis.

Jacob Visovatti 00:48:16 And you want to be able to think about these in sort of an organized framework as discreet pieces because you can roughly think about each one in isolation. And probably one of the most interesting cases there is going to be the LLM centric portion and that’s where thoughtful curation of a test set is probably going to be the most important for this case. If your own recipe catalog pretty well, like you have it roughly in in your head, then you can do some, probably one of your fastest options is going to be to do some live human in the loop prompt engineering where you play with, okay, given this prompt and what I know of my recipes here’s these 10 different questions that I need this thing to be able to ace. And if it’s acing these, then it’s probably going to be on the right track. That’s sort of the rough way you think about it. And of course is you want to get more and more robust that number 10 becomes 100 or a thousand.

Kanchan Shringi 00:49:07 So definitely that number of test cases, but also, like Conner mentioned, observability and continuous monitoring is key. So again, shifting to roles in the typical enterprise, this was done by SREs, so how does that change who does this continuous monitoring and observability for AI models?

Jacob Visovatti 00:49:31 Yeah, that’s a fun question too. And I think it’ll probably be useful for us to think about this again from our, the viewpoint of our two worlds. One thing I’d say is that what is SRE versus what’s the app? The responsibility of the application team versus maybe even yet another separate operators group. This is still one of the big things our industry is, I don’t know, wrestling with or varies a lot this term DevOps is used in about every possible way that it possibly can, such that if somebody says like, well I’m a DevOps engineer, I learned almost nothing about what that person does other than it’s probably interesting, hard, cool work. But I still need to ask a bunch more to know what that means. I can tell you that at Deepgram the pattern that we apply in the way that we think about this term DevOps is that the people who write the application are the same people who deploy it and monitor it and respond to incidents.

Jacob Visovatti 00:50:24 So the engineers who work on my teams who are building out our production inference, API, they write the code for these services, they run the deployments or automate the deployments and they instrument these services, especially for performance and reliability concerns. Classic things like error rates, latencies and so forth. And when the services are having a bad day, it happens sometimes, they’re the ones who might get called in the middle of the night or interrupted during the business day to address it. We think about the software stack in fairly traditional ways in that sense. But Conner, I guess the, one of the growing areas of exploration that you and actually a new team have been thinking about quite a bit is how do we monitor things like data drift compared to the model’s training and what does that imply about like a new kind of observability, right?

Conner Goodrum 00:51:13 That’s right. So the ability to set these, let’s say, triggers across data that’s coming in, being able to understand when a model is perhaps seeing more data that is out of distribution than it was trained for is incredibly, incredibly valuable because it indicates to us that a retrain is likely necessary to uphold the quality. And so being able to identify those instances and then being able to capture that data and store that data, process that data for training, filter it and evaluate the model across a whole slew of metrics out of distribution can mean a lot of things. It can mean increased word error rates, it can mean longer streaks of deletions. It could mean that an enterprise customer had initially been using us for one portion of their pipeline and have now added us to another portion of their pipeline. Where the data is slightly different and maybe they’re using the same model. So being able to understand trends in these things over time helps us select and prepare data to automatically retrain these models to improve over time, such that in an ideal world, the customer never even knows that their model’s been retrained. They just are still sending queries to the same model and their responses have improved in terms of quality. So this data flywheel is incredibly, incredibly powerful and something we’re super, super excited about.

Kanchan Shringi 00:52:40 What’s an example of an emerging testing tool or framework that you found useful? As opposed to, like I said creating test cases with the human. Are there cases where you can use AI to test AI?

Jacob Visovatti 00:52:56 Certainly kind. I think there’s probably a big one in synthetic data that you’ll want to talk about. I can say from the software side of things, there’s technique that’s not unique to AI or necessarily brand new, but is really, really important, which is, which is fuzz testing. And there are a lot of application contexts where fuzz testing is not relevant, but it’s darn relevant in the world of AI. And this is essentially the concept where you can say, okay, we cannot fully enumerate our input space compared to say birth date entry. If that you’re only going to accept dates between 1900 and the current day, then you could theoretically enumerate that entire space. You might not write a test case for every single one. Maybe you’d find some way to break that down in an useful way, but you could theoretically enumerate every single input possibility there.

Jacob Visovatti 00:53:44 Now once you expand your potential input space to a thousand times beyond that or 10,000 times beyond that, it’s no longer feasible to run a test on every possible input. And this is the kind of thing we see with, I don’t know, certain matrix multiplication mathematical operations that are core to a neural network. And so instead, this fuzz testing technique says, okay, let me run 100 or maybe a thousand randomly generated inputs on every single test run. So every time a developer is just running the unit tests as part of a local development cycle or pushing something to CI, or the end-to-end tests are running for pre-production verification, you’re just getting another several thousand test cases. And what this means is that you don’t have certainty that you handle that entire input space, but over time you probabilistically increase your confidence in handling this general input space. This turns out to be a pretty powerful technique for a few different areas within software engineering and certainly one that we’re increasingly applying in the AI world. But Conner, I feel like the synthetic data piece is really the cool thing to talk about here.

Conner Goodrum 00:54:51 It is super cool and definitely the thing that I’m most excited about, the ability to sort of, to your question, can you use AI to test AI? The answer is overwhelmingly yes. You can, using an LLM, you basically take the two last stages in this voice agent where you input text, perhaps it’s keywords of interest, perhaps it’s certain sentences, perhaps it’s strings of numbers. You pass that to an LLM and have it generate you instances used in natural conversation of that term or those digits, and then you vocalize them in M voices could be varying accents, could be various languages. And then you are able to apply augmentations to that. You make it sound like somebody’s answering a phone in traffic standing next to a highway. You make it sound like somebody is speaking in a busy phone call center.

Conner Goodrum 00:55:42 There are ways of doing that in a pipelined approach. There are also ways of doing that in a fully profitable sense, but the ability to generate large swaths of synthetic data that you’re able to run tests on is very, very revealing for limitations of the model and where the model excels. And being able to incorporate that data into training. If you are able to sufficiently, let’s say, replicate or make the synthetic data as in distribution as possible for your production case, then it becomes very, very valuable in your ability to improve the models overtime and also understand its shortcoming.

Kanchan Shringi 00:56:18 Thank you. So I think the two things come to mind today from everything we’ve talked about is production data and synthetic data. It’s really important to get that right.

Conner Goodrum 00:56:28 Absolutely.

Kanchan Shringi 00:56:29 What recommendations would you have for engineers and listeners looking to improve ML model testing strategies, abilities.

Conner Goodrum 00:56:38 From the synthetic data side, I would say explore all the different options that are out there from a text to speech perspective or if audio isn’t your domain, think about methods that you can implement LLMs and various AI applications to generate test data for you and think critically about what your production data looks like. How are your users interacting with your system most frequently? What parameters and conditions are they using? And then how can you best replicate that type of data if you don’t have access to production data in order to be more proactive in your testing. And to think about edge cases, even though they’re sort of innumerable, the ability to generate edge cases with synthetic data opens up a whole swath of possibilities in expanding testing beyond having to set up manual test cases or go out and curate data manually. It certainly expands the numerability, if you will, of the space.

Jacob Visovatti 00:57:34 I think that those are pretty amazing suggestions. I worried that I might get it completely fired from Deepgram if I don’t mention the longstanding knowledge that we have here, which is, there’s an informal company saying, which is to listen to the bleeping audio intentionally censored here for your audience. One of the important things that we’ve seen is that you have a question about something that’s going wrong in the world of AI where you’re dealing with unstructured inputs, unstructured outputs, and pretty complex computationally intensive processes going on in the middle and it’s easy to jump to too high of a level of tooling sometimes as important as that tooling is. One of the bits of wisdom that has really helped to Deepgram through interesting problems time and time again is in our world we’re so often dealing with audio inputs and outputs, listen to the audio.

Jacob Visovatti 00:58:29 If I was testing LLMs and wanted to make sure that an LLM centric system was going well, I would be read the inputs that users are sending, read the outputs. And I would do that before worrying about reinforcement learning with human feedback before human preference testing, before any of these dataset characterization things. I would start to form my own human intuition just by getting my hands dirty in the soil, I guess is, is kind of the way of it. I’ve always found that Deepgram point of view, very intuitive because I came from a world in which I taught music, and if you really want to understand what’s going on with some students playing like you need to listen carefully and you need to look and get a feel for what’s going on. You can’t say listen to one recording in isolation like you really need to get a more holistic sense. So that’s a little bit of wisdom that I would just really encourage is look at the data, even if it’s raw bites, look at them.

Kanchan Shringi 00:59:29 So you’re saying sure, of course. Use tooling, scale the testing, generate synthetic data, monitor, but also do just basic validations.

Jacob Visovatti 00:59:39 Oh, yeah. Yeah.

Kanchan Shringi 00:59:41 Awesome. Is this anything you’d like to cover today that we haven’t talked about?

Conner Goodrum 00:59:46 No, not from my end. Thank you very much for a super, super interesting conversation.

Jacob Visovatti 00:59:49 Yeah, likewise. Just really grateful to talk about these things. I guess the final thing is that Deepgram’s always hiring. Check out our website, because we, I’m sure a lot of your listeners would be great candidates for us, so check out that website and we’d love to talk to some of the listeners that way.

Kanchan Shringi 01:00:04 Thank you so much for coming on.

Jacob Visovatti 01:00:07 Thank you. Thank you. [End of Audio]