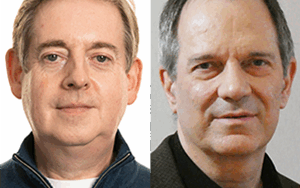

Diomidis Spinellis talks with Marc Hoffmann on code test coverage and the tools that can analyze it. Knowing the percentage of code that is covered by tests, can help developers assess the quality of their test cases and help them add missing tests and thereby find and remove software faults. Marc is a key developer behind JaCoCo, a free code coverage library for Java. JaCoCo works directly on Java bytecode, without requiring access to the corresponding source code. It is integrated in mainstream IDEs and can be easily used as part of the software’s continuous integration process. A controversial issue associated with test coverage is the percentage of code that should be covered. Here Marc offers a valuable insight, recommending that code coverage metrics should be used to improve test quality during development and that developers should focus more on the quality of the test code rather than the amount of test coverage. Adding test cases after the code has been written, aiming to increase test coverage typically amounts to window dressing.

Show Notes

Related Links

- Java Code Coverage for Eclipse

- JaCoCo Java Code Coverage Library

Related SE Radio episodes - Episode 256: Jay Fields on Working Effectively with Unit Tests

Related IEEE Software articles - Automating Software Testing using Program Analysis

- Comprehensive Multiplatform Dynamic Program Analysis for Java and Android

- Unit tests reloaded: Parameterized Unit Testing with Symbolic Execution

Transcript

Transcript brought to you by IEEE Software

Diomidis Spinellis 00:00:18 Hello, this is the Diomidis Spinellis for software engineering radio. Today I have with me Marc Hoffmann, and it will be talking with him on quote coverage analysis and the JaCoCo tool. Marc Hoffmann is considered the leading expert in bytecode coverage analysis. Marc wrote a clamor, a free Java code coverage tool for eclipse, which one of the best open source of click based developer tool community award in 2008 later, Marc started their JaCoCo project as a modern quote coverage backend for the climber and other tools, which is now widely used as a code coverage engine, including for open JDK development for his contributions to the community. Marc was recognized as a Java champion in 2014, Marc. Welcome to software engineering radio.

Marc Hoffmann 00:01:05 Hello, Dimitris. Thank you for having me here today.

Diomidis Spinellis 00:01:08 What is called coverage analysis?

Marc Hoffmann 00:01:10 Well, it’s a dynamic matrix, so you will execute a piece of code or your application under test and record the pieces of code, which has been executed, like which line of code, which branches has been taken, which instructions. So you get an detailed metrics of what has been executed. You typically use that for automated tests. So typically for unit tests, you have a unit test suite and the coverage analysis tells you something about the quality of your unit tests, especially the completeness. So what parts of the, of your code base has been executed by your test?

Diomidis Spinellis 00:01:49 Sweet and why is this useful to know?

Marc Hoffmann 00:01:53 Well, this gives you a good idea, especially about the untested parts of your code base. So you can identify the places which meet more testing, where you should write more tests and have a, have a look at them. Maybe there’s a chance to also delete code. So there’s this, maybe the code is not required at all. So it helps you to keep your code base clean and test it

Diomidis Spinellis 00:02:20 In project. Say, how will the projects typically use code coverage and test coverage analysis?

Marc Hoffmann 00:02:26 First of all, you need to somehow integrate it into your tool chain. So the simplest way is that you have the tool, the code coverage tool directly running it, your ID. So, uh, most ID use like eclipse support code coverage out of the box today. Eclipse clips, for example, is now back to a flee climber plugin. Um, our first plug in which is now part of the eclipse platform. Um, this is the simplest way to get started, but then you should really thinking about making it part of your continuous integration pipeline. So make it part of, um, for example, you even build and integrated here also Gradle all of the boot systems have integrations for code coverage nowadays. So you can continuously monitor the code coverage and you might also put a tool on top of it, for example, SonarQube, uh, to get like a time serious, how your code base evolves, um, regarding respect to various metrics. One of them might be called

Diomidis Spinellis 00:03:23 So often in a continuous integration. You have something that fails the continuous integration. Do you have a specific limit regarding code coverage? Do you have a metric and devalue that you use it for that purpose?

Marc Hoffmann 00:03:36 Actually, um, I get this question quite a lot. So what is the good percentage or whatever the issue here is that the fact that a particular piece of code has been covered only means it has been executed there’s no, no meaning about the, the, the, the quality and the value of the test. For example, think about unit tests, which simply invoke code, but they do not have any assertions and this is what you really want to avoid in your project. So my typical advice is to keep an eye on the untested code. So we, where do you have areas of untested code in your code base and think about how you can add valuable tests to this untested code, or maybe think about just leading this code, maybe it’s not necessary anymore. So what I recommend teams typically is not care about percentage, but care about the total amount of untested code and over the course of the time, um, make sure that the amount of untested code, the absolute amount of tested code does not increase

Diomidis Spinellis 00:04:38 That’s. The developer should look at the code that has not been tested in a qualitative manner and see what type of code it is and whether additional tests are needed. That’s your advice.

Marc Hoffmann 00:04:50 Absolutely. And keep an eye on the untested code. So don’t rely on the fact that you have like 80 or 90% code coverage, because the unit test could be useless. And especially, um, if you have like an, if you create like a competition between teams, and if you make it a management metrics, there’s always the possibility that developers start writing useless unit tests just for the sake of the metrics. So this is local optimization

Diomidis Spinellis 00:05:18 Makes sense. It happens with many metrics and can allow you to see such things such gaming, or is it just the result of bad management focus? And there’s nothing that can be done about it.

Marc Hoffmann 00:05:29 No, the co the co the tool or the pure code coverage tool can not tell you about that. There are other tools like mutation testing, mutation testing actually checks whether the unit test checks the behavior of your code. So in mutation testing, which is quite an expensive metric, you modify the code under test. For example, you change boundary checks in the production code on the fly and tool, um, verifies that a corresponding test will fail. So if you can, for example, replace a less than operator with a greater than operator. And there’s no test failing. This means that your test suite is probably not, not complete on there’s no proper ability

Diomidis Spinellis 00:06:14 Exercising that code as it should be. It’s not properly testing it.

Marc Hoffmann 00:06:18 Maybe it’s exercise, maybe it’s exercising, but it doesn’t care about the recite because he completely inverted the meaning of the condition and nobody was noticing it. So, um, that’s, that’s a more available, um, uh, metric, but it’s, it’s depending on how your exercise is a very expensive metric and takes a long time to actually execute and build such metric

Diomidis Spinellis 00:06:40 And continuing on metrics. I have two questions. First of all, there are also multiple understand metrics for code coverage. So those function statement, branches conditions, and so on. Is, are there particular things we should be aiming for those metrics, or in general, you are looking at just code and untested code.

Marc Hoffmann 00:06:59 Okay. There’s a, it’s depends a bit on your use case. So obviously, um, what, it’s very easy to understand this like line coverage. So you can think of, um, lines of source code, and everybody can think about this, and this is like a very simple metric, but they are more advanced metrics. I really recommend to have a look at, for example, the chromatic complexity. This is an old form of definition, which basically gives you an idea about the number of independent test cases. You need to test a specific method. So if you’re a master test is the chromatic complexity of five. You need at least five independent test cases to make sure all branches and all paths within the methods are executes executed. And this is quite interesting. So we entered it into integrated this in Chaco and provide a metric, which is called, like missed complexity, covered complexity or miscon complexity. So if our method given from the example with complexity five and has missed complexity of two, this means there are two units missing. And this is quite interesting. If you have like a large project and you see like, okay, to get a full coverage, the miss complexity is around, let’s say 500. This means, um, it would cost you to write 500 unit tests to get full coverage on this product.

Diomidis Spinellis 00:08:22 So, you know, the amount of work that is required right, right. And full coverage is something that we should be aiming for, not as a metric, but as a goal. Is that right?

Marc Hoffmann 00:08:32 Um, no, actually the approaches there is, it depends on the approach. So if you have it, it’s nice, but just to do it for the sake of coverage, um, this typically leads to completely useless unit tests. This is what I was talking yes before. Um, but if the process is that you work in a complete test driven way and, uh, you always work test first. So whenever you fix a bark, you write a reproduce the first, which is a unit test. If you’re implementing a new feature, a new component, you’re always right to test first. Um, this is, this is definitely the best process you can follow. And this is what I always recommend, uh, to do this because you’ve besides code coverage, you get other benefits in respect of, in, in terms of quality for your code base. If you write unit tests first, think of the unit test is the first client of your API. So if 15, and if you design an API of a component, um, the unit test, um, is the first verification, whether the API is usable and better, the API makes actually sense.

Diomidis Spinellis 00:09:38 And if you follow that process, you will arrive at full test coverage, but as a result of the process, not as even an explicit goal, right? Yeah,

Marc Hoffmann 00:09:46 Absolutely, absolutely. This is, um, and you get more value than just code coverage, code coverage, as such as no value to your project.

Diomidis Spinellis 00:09:54 I think there’s something our listeners will appreciate as an insight. And can you give some examples from industries or projects that achieve high coverage or where high coverage is required?

Marc Hoffmann 00:10:05 Of course, um, there’s a, like in a industry like railroad, which deal with safety components or safety relevant, softer components, or in airplanes, um, there’s also specifications which require a certain or full coverage or full test coverage of a code, but this is not what we can provide with the Java tooling because the Java platform itself is not certified for such environment.

Diomidis Spinellis 00:10:31 Uh huh. So you need the certified tool

Marc Hoffmann 00:10:33 Or that, and a very restricted runtime environment. So all the dynamic like memory location, what we have in shower is not allowed into requirements in this environment.

Diomidis Spinellis 00:10:44 to finish up with the coverage discussion, is that a sweet spot that we would say that additional coverage is a lot of effort with minimal benefits, say something like 80% or something. It depends on the project or a, is there a situation another way to judge when we have enough testing other than intuition as we discussed it?

Marc Hoffmann 00:11:09 Well, it depends on the nature of the tested code. So I always recommend us to separate, uh, or you split your components in components, which are well unit tested, especially, um, your business logic. So on the core value of your application and separate this clearly from, uh, I would say glue code, for example, to wire to, um, let’s say a database connectivity or to the file system. And I always recommend to provide abstractions, for example, for, um, file system access or database access. So, um, you can create the real unit tests for the core part of the application without having a database in the backend and for the glue code as such. It’s probably not that veil valuable to spend too much, uh, with unit tests and, and mocking on such code. So here I recommend really try to do integration tests. So make sure that the glue code and the whole application actually works as a real database, but this is a different test set

Diomidis Spinellis 00:12:15 And test coverage, more useful for exercising and looking at your unit that the value of your unit tests or is important for integration testing for

Marc Hoffmann 00:12:24 Test. I think this is a primary value for integration testing, integration tests always should be feature driven. So your software, your application has a certain set of features, and you should have a clear mapping between your integration tests and your features. So you have the proof that a particular features has actually been tested when you’re down and on, on, on unit tests, on the level of unit tests, the components that you’re testing is like Java classes. And typically it’s pretty tough to map the single Java class to a feature to your application. So, um, it’s more about functional coverage when it comes to component testing, but still, um, it’s a nice metric to look at. For example, um, you still can identify areas in your coat or maybe modules, which are not tested at all with your, uh, component tests. So you might identify use cases, uh, which has been missed, missed in the test scenarios,

Diomidis Spinellis 00:13:23 New test scenarios in order to exercise those right. And moving down to specific methods in Java have often an exception, highly try catch blocks. How do you deal with that? Do you typically, do you find useful to add and test the code coverage, both in their code and their part that deals with the exceptions, or is it very difficult to do that?

Marc Hoffmann 00:13:49 That’s an interesting question. First of all, it’s difficult to do it. If you want to do it, you need to have proper abstractions and you need to be able to mock for example, they analyze the underlying system to emulate the exception. So for example, let’s say where you have like a database driver and there’s a connectivity problem, whatever, then you need to find, um, a way to mock the database driver and actually simulate this exception. But this is actually something you really should focus on. Um, not only in testing also in, uh, the design, how you anticipate failure, but we’ve seen a lot in system is that they actually error handling is at the least tested part. And that the original era, like let’s say a ProCon database connection is not the problem. The problem is the broken arrow handling in your application. Let’s say you have like a web shop, whatever, and there’s a spontaneous database connection drop. And one user might get an error if he let’s say performs a certain operation. But what typically happens that the, um, the actual error handling causes much more damage, for example, by doing endless retries and breaks down the whole system, this is what we’ve seen a lot in the past. So error handling is quite critical and actually testing it, especially integration testing it. Um, this is what I recommend

Diomidis Spinellis 00:15:19 . So it’s back to integration testing. Absolutely, absolutely. Right. And let’s turn our focus on, uh, tours, which are, as you said, very important for test coverage, how are exactly tools used to achieve tech test coverage? What are the specific steps one should take in order to utilize the tools for measuring test coverage? Okay.

Marc Hoffmann 00:15:45 And maybe I tell you about the, um, the technical background test coverage actually, um, uh, measured. Then we can see how this actually is integrated in various tools. So what the trick here is, um, the Chaco code coverage tool is completely based on travel bytecode. So the only input it needs is your classifies could be in charge and VARs forever wineries, binary, binary. So we don’t care about the source code at all. This bytecode is so-called instrumented instrumentation means that the control flow, if in your code, if in the method is inspected and additional cord is inserted, we call it is called props and appropriate courts. The fact that a certain piece of code is, um, has been executed. And from this information, we can in fear the coverage of every line, every instruction, every branch. So this is a trick behind it to do this modification of a user hook and the JVM, which is called the Chaba agent.

Marc Hoffmann 00:16:44 The travel agent is the extension to the JVM. Uh, you can load it startup typically specified by the command line. And this agent allows various interaction with the JVM. And one possible interaction is to transform the binary classifies before they are actually loaded in the JVM. And this is where we hook in. So the first and only point of integration to, um, measure code coverage for an application is to specify this JaCoCo Java agent, which is basically a small command line option. And to do this, you can just do this manually and the command line, for example, if you start an application server, but all of our tools provide little utilities which provide the correct syntax for you and edit to the respective configuration. For example, if you have a Maven build infuse, maybe sure-fire to run your unit tests, um, the, um, the chakra Maven goal will prepare a specific property for the, uh, shoe fire runner to add this, um, chakra agent to, uh, to, to your birds.

Marc Hoffmann 00:17:56 And this is the first part. This is, um, maybe the simple part, but JaCoCo then produces is an, uh, so-called execution data file, which is again, a binary file where the information about execution of the probes is pestered. And in the second step, you want to create a nice report like HTML report X, a report, and you want to see the total figures and here you need another tool, which is the report generator, which takes the execution data file and the original classifies and create a report out of this. And for typically, for example, for Maven, we have two tools. One tool is a tool it’s in case of Maven, it’s quite prepare agent, which it’s the agent to your on time at the, uh, before you start your test. And the second step is then create a report out of the data which has been gathered at right time. And this is basically the integration you do with every tool. And for example, for the eclipse IDE, this is completely hidden by the claimer plugin. So the claimer plugin does all the magic for you and behind. So when you lounge any kind of travel program in coverage mode, the travel agent is automatically added to the command line that is fired in the background.

Diomidis Spinellis 00:19:15 And is this the running community? So if you run multiple instances of your application, are the results collated together? How do you clear the results between rounds or when you decide to have changed the code?

Marc Hoffmann 00:19:28 And by default the video start the new JVM, the ancient assume nothing is executed for sure. Um, it has no memory about previous runs, but if you specify the same execution data file for multiple runs, the data will be accumulated.

Diomidis Spinellis 00:19:48 So somehow your, your tool looks at the executed fail and determines if it is the same or not. And if it is the same, it accumulates the data,

Marc Hoffmann 00:19:59 Oh, it’s simply a Pence new data to the existing file. And once you create a report, a report generator, and it reads in the execution data file, it’s simply at all the data, which is found in the fire. So it’s as simple as adding a pending new data to the existing.

Diomidis Spinellis 00:20:16 And does the test coverage quote interfere with the measurement of the metrics or you don’t care? You treat it as any other code?

Marc Hoffmann 00:20:24 No, I, it does not interfere with the original Java code, so it should not have the, should not change the behavior of the code. This of course would be a box that should be reported immediately. And I don’t think we have that, but there are some interference with other tools which also use on the fly by instrumentation. So they get into again to, again, to, to its way, um, specifically for JaCoCo the issue is that, uh, we need the classifiers at two places at runtime to actually instrument them and insert the probes. And later on, uh, at analysis time, I’ve read may more information out of the class time for transferred, for example, the, the Libra information. So we can in fear line coverage. So we get the line coverage, or which line has been executed from the Deepak information. And for this purpose, that is quite critical that we see the same class file at execution time and at run time. So we mentioned there’s different set of methods at the FileNet execution time. Then at the file, that analysis time, we cannot match the data. And here’s the critical part. Some tours actually also modify the, uh, the classifiers and change the structure of the classifiers, for example, at new methods to it. And then, so there to cook around times sees a different class file. Then the Coco report generator, and this course is actually,

Diomidis Spinellis 00:21:54 And could reflection also be a problem, or is it transparently handled?

Marc Hoffmann 00:21:58 No reflection is not a problem at all. Reflection does not change the, um, uh, the structure of a classifier, so you can access the members and the fields, but you can net more, you cannot modify the structure or the behavior of a Java class definition of reflection does, this is not an issue for us, but about, um, if you call a method via reflection, yes, it will also be, um, it will be shown as covered. Um, like it would be a normal,

Diomidis Spinellis 00:22:25 You mentioned that the joke was used by Maven and eclipse other ideas that supported Jocko and Def environments.

Marc Hoffmann 00:22:35 Sure. So we have, so at least we know about, I think, 15 integrations in commercials and noncommercial tools. So integrations, we do not on our own. Um, there’s basically integration for every, for every IDD IDE like, um, an ed beans or IntelliJ chain. They all have integrations for that, but not, you’re not providing this integration. The ID vendors itself picked up trickle as a tool.

Diomidis Spinellis 00:23:00 And you also mentioned the sonar cube and is it also used by other similar frameworks environments?

Marc Hoffmann 00:23:09 I don’t know exactly what, um, close source tools are into using it, but I know for example, about team city or urban code, um, or also visual studio team services, um, these commercial tools also provide dashboards and for the continuous integrations or continuous integration services. And they also use JaCoCo as far as I know,

Diomidis Spinellis 00:23:33 Are there other tools in this space, in the code coverage analysis for Java?

Marc Hoffmann 00:23:39 Sure. There’s the other byte code base code coverage tool. This is called, which is also quite a oil, which uses basically the same principles then chakra does. And then there is source code instrumentation based tool, which means the source code is modified rather than the byte code, the byte code, and which is clever. It was a commercial product by, um, uh Atlantean but just recently, um, um, they went open source of it. It’s called open open club. And now I think you can find it on Alton, clever.io. And this is the original source based snow provided for the community. And it’s still maintained by the, uh, original authors and it’s pretty nice tool. They offer something like protest coverage. So you can have a traceability between our specific unit tests and the lines touched by this unit test,

Diomidis Spinellis 00:24:35 Which cannot be done through binary instrumentation.

Marc Hoffmann 00:24:39 Uh, I think it could be done. Um, yes, we are targeting large-scale, um, products and like protocol, like 1 million lines of code, or let’s say a hundred thousand unit tests. And up to now, we haven’t found a clever data model to, uh, have a scalable model to store per test coverage for such projects without an, um, relevant decrease in performance. And this is probably the, uh, the main benefit of JaCoCo that they there’s literally no performance regression. If you run JaCoCo in your,

Diomidis Spinellis 00:25:17 I was about to ask you about how does one choose a tool to use for coverage analysis and where there are drawbacks or advantages specific tools or approaches.

Marc Hoffmann 00:25:27 Oh, they are. So one thing is like, uh, the, uh, the runtime footprint. So how much, for example, does it slow down your, um, your build or your test execution? This is one thing you should look at. The other thing is of course the feature sets, what features do we actually need, but as one crucial thing, this is also, um, the supporter Teddy cave versions. So if the new Karen’s of JDK releases, um, for the tool vendors is pretty tough to provide a support for the latest, um, Tyler on terms. And we are pretty eager to catch up with the, um, Jedi K release schedule. Uh, we are working closely together with the open JDK project and, uh, we just recently released support Java 10. So just a couple of days after the, the official release was announced,

Diomidis Spinellis 00:26:19 It’s tough to keep up with a new release unit to adjust their instrumentation for every new JBM release.

Marc Hoffmann 00:26:28 Uh, it’s very tough. Um, the issue is that, so with Java traditional needs, a specification is released with the implementation. So we don’t have a timeframe to adapt to the new features before we see the final release. So most likely there’s not many changes at the end of the, or just before the release, but still we don’t have a formal specification of the new bytecode formats before we see a release. And also the, for example, the Chicago library also needs a, a build stack. So we also use tools like Maven to build, uh, the library. So when here we have a chicken egg problem, you know, I mean, we don’t have the tools yet for child 10 available, but people expect us to release, um, a library for chapter 10.

Diomidis Spinellis 00:27:14 And when you say release J a labor for a job that does this mean that it will support the new features of Java 10, or it will not run at all, unless you have the new version of their laboratory. So these are the new release of JV and breaking the existing tools and the support that you have, the instrumentation for byte codes.

Marc Hoffmann 00:27:34 Um, you get it. So the minimum, um, issue you have that you get a new bytecode version, the open TDK project decided to increment the bytecode version with every release. So there’s no semantic, uh, there’s no semantic versioning. So, uh, with every lease we need to, um, understand it, new version of bytecode. This is typically only a header information. Uh, this is the easy case because it’s just about a new number. We have to understand that the header, but, um, the interesting cases and there’s new functionality in the bytecode, for example, new opcodes or new structures and new way how to invoke methods. Or for example, if we got into trouble eight, we got, um, methods, different methods and interfaces, and these are things we need to deal with, of course, with the travel called coverage.

Diomidis Spinellis 00:28:23 That’s Java Coco also work with other languages that create JVM. So

Marc Hoffmann 00:28:28 Of course, JaCoCo does not care about the source language. It’s only cares about classifies and whatever runs on the JVM. Uh, you can create code coverage for it. And it actually works pretty well with many other JVM languages. Let’s say like Ruby or Lombok, Lombok is not our language translation, of course is just imitation framework. It also works with Kotlin. For example, we have a repository with a long list of different JVM languages. With examples. The issue here is that many of these, especially high level programming languages like Ruby, they produce a lot of synthetic methods. Like they create additional methods, um, additional code that is created by the compiler. And you might see this code as uncovered. So the metrics you get out of for these languages might not be that what you expect. So we currently working with this, um, on this with the respective pro, um, projects, for example, with groovy and they help us, they create annotations class, visible annotations, which Marc byte code, which has generated. So we can easily exclude despite code from the reports.

Diomidis Spinellis 00:29:39 And then you need to adapt for each, have some adaptation adaptation layer in order

Marc Hoffmann 00:29:44 The reporting level. Right. Right, right. So once the, um, the language like a groovy agreed on a specific annotation, we, unfortunately, there’s not a standard specification for that is a problem there, sanitation in the Java JDK, which was quite generated, unfortunately, this generated annotation has a retention source code. So once it’s compiled to a classifier, this annotation is not visible anymore. It’s not included in the class for anymore. And this is the problem by we cannot use the standard up. Annotation would be nice to use it, but, um, all the, uh, languages created their own application, what we, they own annotation. And what we basically do a, we add filters for this annotation to check Coco. Uh, we have this, for example, for Kotlin we have to Sugrue we, uh, we’re waiting on our, we have this, um, for Lombok, we have it for groovy. Uh, but for example, if we’re still waiting on getting such an annotation and such an hint for the, uh, Kotlin language, that would be really nice and Kotlin users are really expecting to use code coverage towards also on Kotlin sure.

Diomidis Spinellis 00:30:50 It seems that there’s a great benefit in working with bytecode because you can cover more languages. Are there any advantages in working with source code

Marc Hoffmann 00:30:58 Food in source code? So the, the granularity, if you make it back to source code is just line level. So we know that that particular line has been executed or not, but we know the, the, the bytecode instructions from that line, but we don’t know about the internals of the line. So let’s think about a Boolean expressions of set of separate parts in a single line. Uh, we can hardly interfere which parts of this expression has been executed because by default, the Java compiler only emits, um, debug information online granularity. So if you’ve worked on source code instrumentation, you can of course, instrument the line and it probes with multiple props within a single line, for example, to, um, know which parts of the source code has been of this piece of line has been executed. Exactly. So this is one of the benefits, if you do a source instrumentation, but on the other hand, the drawback is that integration in your tool stack is way harder because you have to cut in before you actually compile your source code. You have to create a copy of your source code instrumented, and then ComPilot. So you get artifacts for the purpose of code coverage only, uh, with the, uh, Clem approach, especially with the, on the fly instrumentation. You can, um, add code trap code coverage very late in your, uh, big chain. You can even attach an agent to an existing productive system and measure code coverage under protective systems, which

Diomidis Spinellis 00:32:32 People do . I said, Java champion. Did you ever ask for specific directions in their JDK development, no. To improve the code coverage analysis, or is it everything you wanted has always been there?

Marc Hoffmann 00:32:45 No. So actually there’s some city K comes with its own or internally used code coverage tool if it’s checkoff. And there’s some interesting experiments they’re doing, for example, they have an option in the compiler to create additional annotations with source code mapping within the line. So this is something we could facilitate in JaCoCo in future. If we get this as a default for the travel compilers,

Diomidis Spinellis 00:33:12 Why did we actually do all, we work very close

Marc Hoffmann 00:33:14 With the open JDK project, especially if they break some of our functionality that sometimes happens. We have a nice integration test suite, which means we compile different pieces of source code, run a unit test on it and see whether we get the expected coverage. And this test suite, which is quite big in the meantime, released revered, uh, quite a few bucks in new JDK releases. And we’ve worked very closely with the open trade K project to report these issues. And they take our reports quite very serious, and we get very quick turnaround and feedback on these issues. I think in the meantime, we, one of the top, uh, back country, external contributors of the project.

Diomidis Spinellis 00:34:03 Let me get this out. How, how, what is the process in which you uncover those bugs?

Marc Hoffmann 00:34:07 Uh, it’s an integration test suite, which involves two parts of the Cedi key, the compiler, and of course the Java runtime. And from our perspective, we want to see whether the code coverage tool, which a cocoa actually produces the expected results from the perspective of code coverage, but implicitly we’re testing the compiler and the Java runtime. So for example, um, we uncovered, um, arrows and the line mapping tables. So the mapping from the byte code back to the source code sometimes, um, if they do optimizations and the ComPilot is mapping is broken, and this is what we immediately uncovered with our test suite, because the expected resides, it’s not what we defined in our tests, in our tests set up. And so we quickly identify such problems, for example, in the Java compiler. The other thing is that we have a lot of tests for, uh, I would say, um, corner cases of the Java specification of the tower bytecode specification. And please, for example, sometimes see crashes, um, of the Java runtime with certain, uh, corner cases in the, uh, in the bytecode structure. And this is also what we constantly report

Diomidis Spinellis 00:35:25 Amazing. Dude, when developers see the report in what form is the report produced is integrated with the ID, does the ID highlight code sections that are not covered? This is a typical integration

Marc Hoffmann 00:35:38 For the idea. So I can speak here for the eclipse integration. So here you see you get threes live, um, an overview. You can drill down from project to package level down to class level, uh, but you also get highlighting directly in the editor. So you seek like a colored highlighting of the covered or missed lines within your text editor. It’s not a separate view. It’s the same text areas where you can just keep editing in the same editor in the same editor. You see annotations for branches, you get tool tips about the branch coverage status. Uh, you can also integrate it in your standards. She was like your project Explorer or whatever, and you get annotations there with the coverage. You can configure that within your ID, Eva ever. You want to see the code coverage, you can open property dialogues on every child element and see overview of code coverage of that element. So it’s, um, eclipse, um, officer nice set of extension points to hook into the ID and present information right there, right where the user is

Diomidis Spinellis 00:36:37 Expected. Yeah. I presume you want to see the, also the pole project or an a class or a specific method and then drill down to code, right?

Marc Hoffmann 00:36:45 Yeah. This is exactly how it works in the ID for the tours, for a report. The report works very similar. As for example, the HTM or HTML report, you get an, a three of your project structure down to package down to classes down to method. And this is like a tabular view. And then you can jump right into source code and you can see the highlighted source code. It’s pretty much looks like within the ID. Of course you cannot edit it. And it’s a read only report, right?

Diomidis Spinellis 00:37:14 And when you go to a continuous integration pipeline, is there a way to turn red when something happens when they’re present declines or the coverage less than a level, how do people usually do test coverage and integrated with their continuous integration

Marc Hoffmann 00:37:31 As a, we provide tools for that for the Maven or for the ant integration. We have check goals where users can specify certain metrics, for example, a given percentage on line coverage, or maybe an absolute number. So I can say, say there must be not more than five closets are not covered at all. You can specify a lot of limits, which are then tested during the build and you can fail actually the birds, if these minimum numbers are not,

Diomidis Spinellis 00:37:59 I reached, and you mentioned this are per class or in terms of lane numbers, percentage w what are the most useful or which one would do typically use in your projects?

Marc Hoffmann 00:38:09 Actually, I do not recommend to use these checks at all, to be honest, what you can do is you can, um, do it as like, as like a sanity check. So you do like, okay, we’ve been, we in the project, we have a, uh, let’s say, 80% code coverage, and 90% code average. Then I would do a check of, let’s say, 50 or 60% of AB load and the current, uh, the current coverage. So we have a sanity check to make sure that the tests are actually executed and the code coverage has been implemented properly because sometimes this can break. So you have like a green bird, but it’s just because your tests have not been executed at all. So you have some confidence that most of your tests has actually been executed, but if you really want to monitor the code coverage, I don’t recommend to set limits, which are like really just below the current status for this. I really recommend using a tool like sonar cube to keep track of your coverage over time.

Diomidis Spinellis 00:39:07 How is that different from integrating it into the continuous integration pipeline?

Marc Hoffmann 00:39:12 You can integrate it. For example, the Jenkins plugin for Chicago gives also gives you a kind of a timeline, so you can actually use power spot for the build itself. So for the, for the Maven bed, for example, I wouldn’t try to update, uh, always update the check, um, and, uh, just always adjust the limit. So the latest status of your project, and whenever you, um, a gift, let’s say 1% more of code coverage to adjust the limit. Again, this does not really add value to your project.

Diomidis Spinellis 00:39:43 whereas with Sonic, you have, you see your overall trends and you maybe spot things that are going the right or the wrong direction,

Marc Hoffmann 00:39:52 And you get, you can relate it to, um, to other metrics. For example, you get code culture and new code. So sonar cube consumes a lot of inputs. For example, also the change history of your code. So to an acute knows, which is the coverage of new code in a given timeframe. Um, so if you have a huge legacy code base, you don’t, maybe you don’t care about the overall code college because, uh, the project is just too big, but the teams are working on some small areas for new features, and you want to monitor the new code. For example, this is something what continuous inspection tours give you.

Diomidis Spinellis 00:40:28 Can you elaborate please, a bit more about the continuous inspection tools and how they work with the code coverage

Marc Hoffmann 00:40:34 Inspection, monitors, the several aspects of your, of your code base, for example, rules, whether the coding guidelines are followed or there’s a lot of rules about typical coding errors. So with static analysis, you can recognize, for example, a resource let’s say input 3m has been created, but it’s not closed. These are all rules that can be identified by such continuous inspection tours. And they give you an overall overview of the quality of your project and code couches, just a piece, or just an aspect of the overall quality of your product.

Diomidis Spinellis 00:41:12 you mentioned that the Jocko itself is using a jockey to measure coverage that gets coverage

Marc Hoffmann 00:41:18 From the very beginning. So this was by night and I created Chicago as a prototype from the beginning. I was thinking about releasing it as an open source project, but my, uh, personal my stone was, um, able to release it as, as I can create coverage for JaCoCo itself, then the tool can be released. And this was actually the case from the very first release, which was zero.one.zero, somewhere back in 2009.

Diomidis Spinellis 00:41:45 And at what level was the coverage? What is it now?

Marc Hoffmann 00:41:49 Uh, it says somewhere around 95 or 98%. So a Tacoma was fully test driven. The number of use cases and the complexity of the integration is simply in a scale that there for the small team, it would be completely impossible to do manual testing on this tool. So we heavily rely on the unit tests and integration tests we have for HSA and whatever we do. Uh, let’s say bug fixes, uh, let’s say new features is always test for us. So we always think about the test strategy, and we think about how to approve that the functionality actually works. And this is the only way how we add features. And this is very tough, our contributors, because sometimes we get contributions without any tests. And, uh, we don’t, we cannot accept such contributions. It will simply add too much technical desks to, uh, to our project, if we have features, which we cannot prove.

Diomidis Spinellis 00:42:46 So they have rocking. These are very wise words. Do you happen to know their courage? And I survey open JDK? Is it measured with make sense to measure it?

Marc Hoffmann 00:42:56 As far as I know they do. They have their own tool called Jacob and they measure, they, they keep an eye on code coverage, as far as I know, but the tool I have never used the tool. Um, I think it’s pretty tough to use it outside the open dedicate project. So I cannot touch about a tool or how it’s actually used by the open project. I’m not very familiar with their big pipeline and their continuous integration pipeline, um, open tradie case, not that openness then, uh, uh, as the name might suggest,

Diomidis Spinellis 00:43:25 Yes, the old was the built structure and how the project internally builds. And what it requires is kind of can be a difficult part for a project to accept contribution, be truly open. Do you happen to know other figures from other projects? So the coverage figure so that our listeners can get a feeling of what they should aim at. So

Marc Hoffmann 00:43:45 Typically see, as I’m sort of project it’s really does test driven development. They always end up beyond the 80 or 90% test coverage. If they have the right process to think about testing first, create a test first and then actually write the code.

Diomidis Spinellis 00:44:00 And do you happen to run into interesting bugs? You have uncovered by increasing test coverage, something that’s particularly stayed in your mind.

Marc Hoffmann 00:44:08 It’s not right now. It’s, um, uh, as I said before, it like, uh, if you’re in, if you want to increase the, the test coverage after the fact, um, you lose a lot of, uh, benefits, like creating correct code from the beginning or creating proper API APIs. This is all benefits if your practice tests first. So adding code crafted after the, to our project is always tough, especially if the structure of the project does not allow us. So if the project does not actually have real components, if it’s like just one huge block of interconnected components, and you can not isolate a single parts to test those parts, um, so testing or adding coverage after the fact is something I really don’t recommend, and which is like really tough. And typically goes along with refactoring, the original code based, we have actually test the boot components.

Diomidis Spinellis 00:45:03 And of course the project will not have tested with components because it was not implemented in a way that would, that would together with unit tests. So it’s all right, right. Two things go together. And are there any drawbacks associated with it tracking code coverage? You mentioned about gaming, the metrics, other things people should look out for?

Marc Hoffmann 00:45:21 No, it’s actually, it’s basically about setting up wrong expectations and just looking for, uh, for, um, for code coverage metrics. Also what I’ve seen, which is which I consider very dangerous because it don’t, you lose all the benefits of tests first that a unit tests are created by separate teams. Like you have like an off shore team. And the offshore team is just responsible to add unit tests to some code base. And this is like, really, this is not the way how you do it. So this will not add any quality to your original code.

Diomidis Spinellis 00:45:52 It sounds really misguided, but I can really imagine companies doing it. Yes. But this is a fact. Yeah.

Marc Hoffmann 00:45:58 Is this a, this is a matter of fact, and I know projects which work exactly like this, and I would really not do it this way. Not at all.

Diomidis Spinellis 00:46:05 Dilbert comes out of real life. It’s not some imaginary thing.

Marc Hoffmann 00:46:10 Okay.

Diomidis Spinellis 00:46:11 Eh, so what should I tell my managers to persuade them to adopt test coverage analysis?

Marc Hoffmann 00:46:16 Don’t tell them at all, don’t tell, just do it, just do it for your team, just heaven, working agreement within your team that you do test for us, but don’t make it an official metric. So this is what I always tell him. I talk. So don’t tell your managers about code coverage, just do it and keep an eye on it.

Diomidis Spinellis 00:46:33 They would, they would try to get in there. They will make it a metric attack, real metric, and then it will lose value because people will manipulate it.

Marc Hoffmann 00:46:40 Absolutely. This is, this is what will happen. If it’s a management metrics, then it’s an easy metrics. Yeah. It’s just a number and it’s easier to understand for management, but it will not add value if there is no proper process behind

Diomidis Spinellis 00:46:54 Marc. Is there anything else I should have asked you, but didn’t something you would like to share with our listeners, maybe

Marc Hoffmann 00:47:00 New Java versions and challenges with the new, the new chart.

Diomidis Spinellis 00:47:04 So what are the challenges of new Java versions

Marc Hoffmann 00:47:07 Have with, uh, the latest child versions or maybe even starting with Java seven Java eight is that we do code coverage on Java bytecode and, and the early childhood versions, like say to chapter five for Java six, there was a one to one relationship between source code and byte code. So we could easily map the, the code, uh, to source code, uh, with new versions, we see new synthetic sugars, something like Lambda or something like tribal resources. And for this, the mapping is not as simple anymore, but what, because of what the project is, so project decided is to put more functionality into the compiler. So things like tribal resources ends up in a complex nested structure of try finally blocks in the class file and the issue that we have with the code coverage tool to map back, or to recognize these structures in the, in the, in the classifieds and map it back to the source code during the reporting time. And this is getting more and more tricky with the Java language and the new features of the

Diomidis Spinellis 00:48:15 Shoveling, which is a BI bytecode, eh, is Justin creating any additional problems, no

Marc Hoffmann 00:48:23 Being on bytecode level. And just in time, compilation goes down to the machine code. So just the time compilations kicks in after we instrumented the, uh, the byte code, this is for the, for the structure, but what we actually see if you have a, um, library, which that’s like really a number crunching, we can see some performance aggressions here because due to the additional probes, for example, in lighting is to save it because the method is growing. So if you have like a simple set on Geta, which is typically inline by the, just in time compiler after instrumentation with check Coco, the method on bytecode level growth, too big to be in line. So this is the only point where we interfere with just in time compiler, is that due to the increased size of the methods, we see some performance drops, especially at hotspots verus a lot of execution on small and tiny misses.

Diomidis Spinellis 00:49:19 And Marc, thank you very much for being with us. Where can listeners find out more about the topics we discussed,

Marc Hoffmann 00:49:25 Of course, on the chaco.org website. So there’s complete documentation. Uh, this is one thing we try to do, so it’s not just a test for us. We also do documentation first, whatever we add is and features, um, that you connotation would be updated. And, uh, we try to provide a consistent view on the tool from the documentation and the functionality point of view. Also on the website, you find, you will find a long list of conference talks and presentation we have done, especially also if Guinea monthly COF was main contributor to the project, there’s a lot of funny and interesting presentations about how to use it. And how about also about implementation details? So you can learn about how it is implemented and what’s, what are the tricky parts we’ve sent you? Um, Jedi cave versions, for example. Yeah.

Diomidis Spinellis 00:50:13 Excellent. Thank you again. Okay. Thank you all. Thank you for having me here today. This is the only to Spinelli’s for software engineering radio. Thank you for listening.

[End of Audio]

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected].

Marc made so many great points about using coverage metrics, integration tests and features, etc.

This was an interesting one. Thanks for putting it together, SE Radio team!