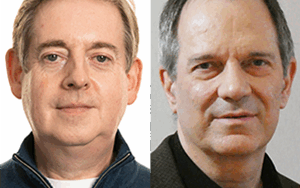

Maxim Fateev, the CEO of Temporal, speaks with SE Radio’s Philip Winston about how Temporal implements durable execution. They explore concepts including workflows, activities, timers, event histories, signals, and queries. Maxim also compares deployment using self-hosted clusters or the Temporal Cloud.

Show Notes

Related Episodes

- SE Radio 502 – Omer Katz on Distributed Task Queues Using Celery

- SE Radio 351 – Bernd Rücker on Orchestrating Microservices with Workflow Management

- SE Radio 198 – Wil van der Aalst on Workflow Management Systems

Related IEEE Computer Society Articles

Related Links

- Temporal

- Previous Systems

Transcript

Transcript brought to you by IEEE Software magazine and IEEE Computer Society. This transcript was automatically generated. To suggest improvements in the text, please contact [email protected] and include the episode number.

Philip Winston 00:00:18 Welcome to Software Engineering Radio. This is Philip Winston. I’m here today with Maxim Fateev. Maxim is the co-founder and CEO of Temporal. He has worked in lead roles at Amazon, Microsoft, Google, and Uber. He led the development of AWS Simple Workflow Service at Amazon and the open-source Cadence workflow at Uber. Maxim holds degrees in computer science and physics. We’re going to talk today about automating business processes with distributed systems, but let’s start with a term, not everyone might be familiar with durable execution. Maxim, what is a durable execution and why is it a useful abstraction?

Maxim Fateev 00:00:58 Durable execution is a new abstraction, which we kind of introduced and it’s not very well known, but I think every engineer absolutely should have it in their back pocket of tools. The idea is pretty straightforward. Imagine runtime, which keeps the full state of your code execution durable all the time. It means that if a process crashes or any other infrastructure outage happens like a network event, power outage and so on, your code will keep running after the problem is resolved. Or if the process crashes it will just be migrated to a separate process. But if the whole region is down as soon as it comes back, your code will continue executing. And I’m not saying process, but actually the full state. So for example, if you have a function which is blocked, like executes, let’s say three API calls A, B, C, and it’s blocked on B and then this process crashes, this execution will migrate to another machine and it’ll be still blocked on B and B result can come two days later and then it’ll continue executing. So all local variables in the whole state, including block and calls, are fully preserved all the time.

Philip Winston 00:02:00 Great. We’re definitely going to get into how that works. But before we do that, we’re going to do a little bit of history and how we got here. But let’s just describe Temporal as it exists today.

Maxim Fateev 00:02:10 So Temporal is an open-source project. It’s MIT licensed, so you can absolutely run it yourself, including both his DK libraries and the backend server, which keeps the state. And so this project we started it at Uber. It was initially called Cadence, and then four years ago we started a company, we worked the project and we call it Temporal. And it’s been around for seven years in production at high scale and used by thousands of companies around the world for mission critical applications.

Philip Winston 00:02:36 Let’s go back even further. Before Cadence, you worked on the simple workflow service at Amazon. Can you tell me a little bit about how that project started and what were the goals of the project?

Maxim Fateev 00:02:48 So I probably want to start even earlier. I joined Amazon for the first time in 2002 and back then Amazon was actively moving from monolithic architecture to microservice architecture. It wasn’t called microservices back then. It was called just services. Later, it was called Saw. But the basic idea was the same that this monolith was unsustainable and just re linking that binary was taking 45 minutes on the high-end desktop. So it wasn’t practical to do any development. So Amazon had to do that. And we found that practically we used a standard approach even back then of using queues to connect our multiple services together and practically execute all these backend business processes. And I was tech lead for the pop ups group. We, I was leading the group, which was owning all backend pop ups of Amazon. I worked on the distributed storage replicated storage for Q in it still, I think it’s used by Simple Q service at its backend.

Maxim Fateev 00:03:38 And so I, I was in the middle of a lot of conversations about design choices. When you build these large scale systems using queues and event driven architectures, it quickly became apparent that it’s not the right abstraction. Events and queues are not the right abstraction to build such systems. So one very important point there is that they’re very good runtime abstractions, right? So event-driven systems and queues give you awesome runtime characteristics because for example, your service can be slow, but other services are not slowed down by that because things can be buffered in queues or your service can be even down and the other services don’t even notice that. They just see messages maybe not coming or buffer it. So runtime characteristic of these systems is awesome, but as a design choice, they actually create very brutal systems because everything is connected to everything and there are no clear APIs and it’s very hard to create the state machine in every service and support all the business logic.

Maxim Fateev 00:04:32 So our team recognized that and we started to think about an orchestrator and Amazon had an internal orchestrator based on petri nets idea based on top of Oracle and it was used to actually orchestrate orders and we started to think about more scalable solutions. We had three different iterations, three versions of the service internally and only the third version was public and it was released as a simple workflow service, AWS simple workflow service. So it took us a lot of kind of try different attempts to get it right, but I think even simple workflow I don’t think is really, I wouldn’t say we’ve got it right because it’s still not very popular service, but we actually learned a lot doing that. And then what happened is that we kind of left to other companies and co-founder of Temporal, Samar Abbas, who worked with me on a simple workflow, he went to Microsoft and at Microsoft he wrote a durable task framework, which later was adopted by Azure functions and now Microsoft has Azure durable functions. And then we met at Uber and we started to kind of, we created this similar, we like took all these ideas and created the next generation of the technology, which we are kind of right now. You can think of this Temporal, Temporal being the kind of continuation all these previous attempts over 10 years of iterations.

Philip Winston 00:05:40 So can you tell us the process of choosing to fork Cadence and create Temporal as opposed to say start over again? How did you make that decision?

Maxim Fateev 00:05:50 The reason you would start again if you believe that the existing solution is not good enough? Right. The new Cadence was solid because we ran it in production for over three years, two at large scale, and there were over a hundred use cases using that for mission critical applications. But we also knew that there were a lot of things we wanted to fix because over three years we learned a lot, but we had a promise of full backwards profitability and live upgrades so we couldn’t break anything. So getting from this point A to point B without breaking it would be extremely, extremely hard. It’s possible, but it would be hard. And when we fork the project, we practically had all our single time opportunity to fix a lot of these little issues and just basic one for example, moving from fifth to protobuf and from custom, Uber specific, protocol to GPC, that’s the main things which gives us a lot of benefits from security point of view and so on and so on. And there were a lot of other little things which we had to fix. And we spent a year since we forked the project, until we released the project and said it’s ready for production. It took us a year just working on the fork before we released it because we put so many changes there.

Philip Winston 00:06:58 You mentioned the MIT license. What parts of Temporal are there to be licensed? I guess there’s the client SDKs and there’s the server or the web interface, like what are the different parts of Temporal?

Maxim Fateev 00:07:12 So Temporal has a backend cluster which has multiple roles. Obviously you can run all these roles in a single binary, you have single binary version for example, you use for local development, which also includes UI, but you also can have all these five roles and run them as a separate tools of workers, tools of processes and backend service also needs a database. So we in the open-source we support, MySQL, Postgres and Cassandra and SQL Light also as, officially supported databases. So this cluster connects to the database, exposes some JRPC interface for its frontend role and the whole cluster is MIT licensed. You can run it yourself and then when you write your applications, external to the cluster, they contain all the code and all business logic and this application just connects to the backend cluster for the JRPC connection. And these applications don’t need to open any ports, they only do outgoing connection to the cluster. So yeah, all these components MIT license, I think Java is Apache two licensed for historical reasons because we initially worked the implementation of the Amazon framework, which I built at the simple workflow.

Philip Winston 00:08:16 We’re going to go into core Temporal concepts including workflows, activities, timers and other things. But first I just want to mention some previous episodes that are related to either workflow systems or task systems. Episode 502, Omer Katz on Distributed Task Queues Using Celery, Episode 351 Bernd Rücker on Orchestrating Microservices with Workflow Management and Episode 198 Wil van der Aalst on Workflow Management Systems. That last episode is 10 years old, but I was surprised that a lot of the concepts are still being discussed and still relevant today. So going into these core concepts, you don’t necessarily refer to Temporal as a workflow system, but workflow is one of the core concepts. So what can you tell me about workflows in the context of Temporal?

Maxim Fateev 00:09:10 I think it’s very important to understand that when I say workflow in context of Temporal, I always mean durable execution, exactly the concept I described and you write quote in top level programming language, you can write quote in Java, go type script hp.net and this quote is durable. So we preserve the full state, including as I said, block and calls and all variables state all the time. And this is very highly applicable to all sort of scenarios, right? Every time you need durability, every time you need to guarantee of completion, every time you need to saga or run compensations, Temporal is a very good fit there. The problem is that when you usually say workflow engine people imagine exactly the solutions you mentioned which are just all YAML, XML JSON based and oh like they draw pictures. And there is a reason these solutions never became popular, like really popular because engineers will not even consider them for the majority of the use cases because these systems were never designed kind of to be developer friendly, right? Like to be developer first. There is always this promise that non-technical people will write workflows because they can do pictures. Reality is it never works, right? Engineers still have to do this XML in JSON files or draw pictures and this is not what engineers want. They want code. That’s why when I say workflow always mean durable execution and I think it should be very, very clear, right? And it is very different from when anyone else sells workflow. They always mean some JSON file, XML file or something like that.

Philip Winston 00:10:32 My experience with AWS step functions in Google Cloud workflows where you do specify the workflow as JSON or YAML, is that JSON or YAML are maybe really good for configuration, but what happens in those systems is you’re essentially writing imperative code in the restricted language. And that to me is where things start to get a lot less comfortable to do that. So tell me some of the technical challenges in implementing Temporal that does support arbitrary programming languages or at least a good handful of programming languages. I realize that each language has a different SDK to support it, but there must be some common technology that’s enabling this to happen.

Maxim Fateev 00:11:16 Yeah, so if you think about it like there are only two approaches really to preserve the state of the code all the time. One approach would be that you snapshot memory of that code after every code progression and you store it somewhere and then if you need to activate that execution on a different machine, you would load that memory and you will continue executing. The problem is that you could probably do it with some run times. For example, you can do it with web assembly, but you cannot just take Java for example in serialized full state of Java execution, especially when it’s blocked on some crates. If it’s synchronous code, the runtime, do the same thing with go code, right? You cannot just take go blocked on some code. Technically you could, but you need to hack runtime pretty deeply.

Maxim Fateev 00:11:58 The same thing for TypeScript and so on. So the other approach would be to practically use what we call replay. You run the code from the beginning and then every time code execute some external action, you intercept that and then if this action was already completed, you just give result to that code. So if you, let’s say as I said, your code blocked on function B and this process crashes, you’ll have a history of what we call event history of the workflow when it’ll be recording that action A was requested and it was completed and action B was requested but not completed yet. And then you will restart that, practically that process recover, you’ll run this function, you will call A, verify that call to the A is in the history, then you’ll give result of A to the code and code will go from line A to line B, then you’ll validate that call B was done right in it is in the history and then we know that this quote is blocked on B right now and then we need to wait for B result to deliver to the B.

Maxim Fateev 00:12:56 There are a lot of details there. For example, how do you make sure that the result of B is routed to the process which is currently waiting for it because the previous process already crashed. So there is the whole routing layer there and there are a lot of other things. And one big assumption is that the code of the function is deterministic. What it does it mean, it means that the code, if you execute it again, will reach the same point. So for example, if your code says if random more than 50, take branch A and run for example, make API call A. And if it’s less than 50, make API call B. This code is not deterministic because every time you run it from the beginning it can take a different branch. So if you need to use random, you practically need to use deterministic random which will be random the first time you call it and then it’ll return the same value you replay. And Temporal provides special APIs for all these situations, for random, for time, for creating threads, because threads obviously are also not deterministic unless you use our own runtime.

Philip Winston 00:13:57 How about a simple example? I have my email or messenger and I have a function where I can send a message delayed by any amount of time I specify. And so this workflow, it’s launched after some endpoint and the user has communicated how long the delay should be. When I’m looking at the workflow code, it looks linear, it looks like it starts out, there’s a sleep, say a timer, and then we actually post the message what happens the first time through when it hits that timer, does it exit or what exactly happens that is going to allow it to be continued later.

Maxim Fateev 00:14:34 So what will happen is that workflow code runs on the application space, right? It’s not, it doesn’t run within the Temporal cluster. And usually what you will do, you will create, so we call worker processes workflow worker process which host workflow code and this worker process, you can think it as a queue consumer, it’s the same like for example, you consume message from Kafka, you’ll have always running process which can queue consumer. The same way Temporal worker, it’s always running, it always listens on a specific queue inside of Temporal called task queue. And it’ll say I’m listening for task queue full, right? For example, for my workflow full register with that task queue. So when you start workflow, it’ll create a task in data state inside of Temporal itself and then put the task in that queue and it’s all transactional so we don’t have a risk condition there.

Maxim Fateev 00:15:17 And then this worker will pick up this task, it’ll contain arguments of the workflow, like information about it and we’ll start executing that. Then it’ll hit the sleep and it cannot make more progress and this sleep will be converted to the create timer command and this command will be sent back to the Temporal server and Temporal will create durable timer inside of its kind of infrastructure and store this timer in the database. And then if you have sleep for example, two weeks, two weeks later this timer will fire, it’ll create new task and new worker, some different process will pick up the task, recover workflow state, unblock the timer and continue.

Philip Winston 00:15:52 So the worker doesn’t exit but the task has finished when that sleep starts potentially.

Maxim Fateev 00:15:58 Exactly. So one more optimization is that we will cache that workflow state for some time inside of the worker because if sleep is short or just waiting for an activity completion there is a very high probability that this workflow will be needed again very soon. So we don’t want to recover it every time. So we will go and practically cache that workflow for some time on the worker. So fast running workflows are always cached so we only deliver new events to them. We don’t need to do recovery, but this is purely optimization.

Philip Winston 00:16:27 So talking about durations, what in your view or in Temporal’s view is sort of a short duration workflow and what’s long? I know in my experience often I’m having conversations about workflows and I’m thinking of just a very different scope or very different duration than the other person. So I’m just curious from Temporal’s point of view or from your experience with Temporal users, what’s the range of duration?

Maxim Fateev 00:16:50 So first Temporal, durable execution doesn’t care about duration. So it can be used in situations when it’s very, very short and it can be used in situations when it’s very, very long. It’s all about durability. Do you need guarantee of completion? And especially compensations for example, there are use cases when people use it for instant payments. So you have a very small time balance, right? Like to actually execute that operation. But why do you need something like Temporal? Because if some of your API calls fail, you need to run compensations, right? For example, you took money from one account, you’re trying to put it in another account and another account fails for some reason. You return error to the user very fast. But you need to make sure that you will return money to the original account and you need to guarantee that.

Maxim Fateev 00:17:32 So as we don’t have transactions across services these days, Temporal is the best way to practically do this replacement for transactions in the distributed architecture. And this is a very common use case and it works even if you have very short duration and you have people waiting. At the same time, we have use cases when workflows are always running. For example, if you have Workflow we call them entity workflows. So for example, if you have a workflow which represents a user and that workflow will just handle all the communication to the user. I can give you one practical example. Imagine you are an airline and you want to have a loyalty program. So you have points. You can absolutely have a workflow per customer running all the time listening to events like for example flight completed events and every time you complete the flight it gets the length of the flight and increases the number of points based on that.

Maxim Fateev 00:18:20 And points can be stored just as workflow variables because they are always durable. And then if you reach a certain number of points, you can run some actions like activities to notify other services that you are now a golden member. And if you want to have a timer inside of workflow and once a month send an email saying okay, this is your new number of points by the way, do you want this promotion? You can also implement all of that as a single workflow. But the point is this workflow is always running, there is no end until the customer wants to quit your system, which practically never happens for these types of scenarios.

Philip Winston 00:18:52 I did run across that idea of workflow as entities and I do want to get into that a little bit, but let’s go back to just the core concepts. We talked about workflows, let’s do activities next. So I mentioned before in my delayed email case I was going to use a sleep or a timer, but an activity is a more general concept. Can you tell me what makes an activity in Temporal? What are the properties that it should have or needs to have?

Maxim Fateev 00:19:18 So as I described the approach Temporal takes to recover workflow state, it does it free replay. So practically the main requirement is that the code is deterministic. Any external IO is not deterministic by definition because it can succeed one time, it can fail another time, it can return a different result. So that’s why Temporal intercepts those external calls, practically set places a rule, all external communication goes through activities and activities are just, in every language just a function. So it’s like a task, right? So you can the same for example, you have queue consumer can have task which process that queue the same way in Temporal you write an activity which is usually just a function and then this function is invoked every time you in invoke this activity from the workflow, the code of this function, we don’t put any limitation what can be in that function.

Maxim Fateev 00:20:02 Nice thing about Temporal activities compared to queue tasks is that first Temporal activities can run as long as necessary. You can run them for a year if necessary. Obviously for long run activity you want a heartbeat, you don’t want activity which you’ll have one year timeout and wait one year until time’s out. But it can heartbeat every minute and as soon as your process crashes it’ll be retried after one minute for example. Also you can add information to the heartbeat so you can store some information about your progress in the heartbeat. So for example, if your activity scan a huge data set, it can just heartbeat progress on every record and then if activity fails it’ll be restarted. You can say get the last heartbeat data, get your progress and continue from that set. So you can have activities like that. And also activities are cancelable.

Maxim Fateev 00:20:47 So if activity heartbeats, it can be canceled. So it can gracefully handle cancellation and also we can route activity to specific hosts. So for example, if you need to run activity on host B of your fleet because activity A downloaded some file there, you can route activity to that host specifically. So we have a lot of features and also if the activity fails, they retry exponentially. We have exponential retry and retry can be as long as you want. So if you want to retry for a week, you want to retry for a month, it’s absolutely supported. It’s very different from queues because the duration of activities and number of retries is usually very limited.

Philip Winston 00:21:20 So activities could definitely be on a remote system, some kind of remote procedure call or web interface. But are there cases where even though it’s in the same process, you’d want to consider it an activity?

Maxim Fateev 00:21:34 So practically it’s a Temporal kind of limitation. I wouldn’t say limitation is just it’s design choice that every external IO should live in an activity. So we have a concept of a local activity which runs in the same process as a workflow, as an optimization in certain scenarios it allows to do very, very low latency scenarios that might require that. But again, if the activity sends an email, this should be an activity and then workflow will call, send email activity.

Philip Winston 00:22:00 I think we talked about the need for determinism. This is another concept that always comes up with distributed systems is item potency. I’m guessing that all your activities need to be item potent or are they only going to ever be called once?

Maxim Fateev 00:22:16 That is a very good point. So Temporal doesn’t retry activities blindly. If activity fails or times out, there is an associated retry policy. If you specify max attempt in this policy, maximum number of attempts to one, it’ll not be re-tried. But what will happen in this case, in this case workflow will get an error, it’ll need to handle that right? And it’s just the usual language abstractions. For example if it’s Java you would say try catch, catch this exception activity failed and then you can handle it anyway. For example, you can run compensations, different activities. So it’s a little bit more sophisticated than just oh we would blindly retry. But in reality the most common approach to deal with this type of scenario is to make activities idempotent and just do blind retries by default we retry them. But I think the important point is Temporal is a fully consistent system so it doesn’t do blind retries only if you ask them, but if you specify max attempt to one, there is possibility that your activity will never run right because maybe it’s deliver task is delivered, timer is started and then process crashed.

Maxim Fateev 00:23:15 So in this case Temporal guarantees that after timeout, the exception will be erased and it’ll be delivered to the workflow. So it cannot lose that activity, but it can get to the situation when it’s never executed. If you have max attempt of one and then you will need to handle the error yourself. So yes, but if you do it idempotent then you don’t think about it, right? You just say okay we try forever until it’s done.

Philip Winston 00:23:36 So you mentioned saga pattern a while ago. Is that something that Temporal helps you implement? I guess first of all, what is the saga pattern? I think it relates to this idea of I have multiple activities, maybe I’ve successfully performed some of them but one of them fails and I want to undo the other ones. Or can you just kind of tell us how that works?

Maxim Fateev 00:24:00 I’m not sure how it’s exactly formulated , but let me describe how I see it. The idea is exactly that. That if you execute multiple API calls and this API calls probably perform some storage changes and perform some changes. And as you don’t have transactions across those calls, for example the most obvious one: take money from one account, put money in another account, you took money from the first account and then you cannot put money in the second account because maybe your account ID is wrong. So what do you do in this case as there is no transaction, the only thing you can technically do is run a compensation action and compensation action would be put money back on the account. A right and this scenario appears all the time. So you practically need to run compensations and saga is just a kind of pattern which says yes if you run multiple activities.

Maxim Fateev 00:24:45 So you run like 10 of them and, eight of them succeeded, but nine failed. How do they go and run compensations for the previous eight? And I think important point about Temporal comparing to any other framework, especially all these, existing frameworks based on JSON and YAML, that because quote is very dynamic, it makes implementing sagas very trivial because think about what is sagas guarantee of compensation. Temporal guarantees that your function will keep running even if processes crash or other outages happen. So Temporal can easily guarantee that this process will keep running if something happens. And if your process has try/catch block and if you’ve got exception in catch block, you run compensations, it’s a very easy guarantee for Temporal. And then you can write special classes. For example, Temporal has saga class in Java when all it is is just a collection of callbacks, right?

Maxim Fateev 00:25:29 And then when you say compensate, we just go and execute these callbacks. An issue with the callbacks can contain some compensation logic. The important part is because it’s dynamic. Imagine you have like 50 possible activities and you have complex branching logic that every time you execute some transaction you execute five of these activities. But every time which five activities you execute is different. With Temporal, you’ll just pass this saga down. Every branch you’ll accommodate which activities were executed in the least and then when you compensate, you’ll compensate those five activities. Try doing that. Any static deck or kind of graph generated through YAML or XML because you don’t have dynamic compensations, you practically need to go and model every path and compensation path separately. It practically becomes unmanageable after a few possible branches. We found that this is a very common misunderstanding how sagas should be implemented and something which is trivial in Temporal, partly impossible to do in any kind of other configuration based language.

Philip Winston 00:26:23 So in that case it sounds like the per language SDK is providing a pretty sophisticated little piece of technology to help you with this saga. Whereas in a workflow system based on a configuration language that just isn’t that element unless the system were to provide it.

Maxim Fateev 00:26:41 The interesting thing that saga is not sophisticated because sophisticated part of the SDK is providing that your quote will keep running right? And the full state is preserved. Saga itself as I said is a class which you can write in 20 minutes because all it is just list of compensations and then you just say compensate. You just go over a list and run the this callbacks, I wrote this saga class in like what less than than an hour. So anyone can write their own and they can write their own code. You can write your own libraries, you can write your own frameworks and Temporal has runtime guarantees, completion of code and guarantees variability. So one way to call this is call fault oblivious, right? Your code doesn’t even need to know that some fault happened because it recovered seamlessly.

Philip Winston 00:27:20 That brings to mind the term like separation of concern. So the workflow code as I’m looking at it, is focused on this happy path of things working and I don’t necessarily have to worry about the retries. That’s kind of the goal.

Maxim Fateev 00:27:34 I think there are two types of failures. There are failures which are infrastructure failures and the process crashes network going down, right? Like power outages, like things which are not related to your business logic and Temporal takes care of all of them seamlessly. You don’t need to even think about that. They’re not part of your code and you don’t need to persist state to survive and recover because state is always persisted. Every local variable in your code is durable. But then there are business level failures for example, as I said for example, if you say deposit to account and account ID is wrong, not much you can do on the infra level, right? This is your business logic and then you can practically grow an exception and you can, deal with that exception. And in activity you can differentiate which exceptions are retriable and which exceptions are non-retriable.

Maxim Fateev 00:28:15 You practically say okay run account ID is non retriable exception. Then it’ll be propagated to the workflow and then workflow will run saga compensation to return money. But if it’s like activity failed because of timeout or database or some other random thing or now pointer, it’ll be retried. So that is kind of the idea. Another interesting thing is that intermittent problems can become problems which you fix in two hours. For example, you get now pointer, you look at the quote, oh yeah I have a bug for example, this is a different quote path. Activity keeps retrying. You just go deploy a new quote with the bug fix and two hours later it can on the next retry it goes through, you don’t need to do anything, your workflows just keep running like nothing’s happened because no deployment will ever interrupt them. There is a very powerful paradigm that you can fix things in production while they’re going without losing.

Maxim Fateev 00:28:55 When people come to Temporal, their first approach is, oh let me fail workflow in an intermittent error. And they’re like No you don’t need to do that. You can keep retrying as long as you want, right? So you don’t need to even think about this like, try to introduce manual processes which people right now deal with manual process. They have that letter queues which should be manually done. These all sort of processes, scripts and all of that goes away. It takes some time to internalize. It’s not something people are used to that you don’t even need to think about these type of problems. But I think it’s super powerful after you launch Temporal.

Philip Winston 00:29:25 So you’re talking about a developer who’s maybe working with operations or is dealing with operations and they identify a problem that’s going to involve probably the web interface of Temporal. Can you tell me a little bit about what information that provides and what’s the usefulness of it?

Maxim Fateev 00:29:41 Nice thing about Temporal because as I said, we record every event in the workflow. So we use practically event sourcing to recover state of the workflow. It means that we record everything. So every activity in vacation you see arguments, every activity completion you see result. If activity fails and your workflow stack, you can see why activities fail, how many retries where when the next three try. So you can see all the information, you can see all steps which executed so far with all the arguments and everything. So in all timing like every timer and so on. So you can easily understand what’s going on. So you have deep visibility in the worst case scenario, let’s say you’re getting now pointer and you don’t understand why you’re getting that because it’s very complex code path. You can press a button, download the workflow history in this zone format, plug into your debugger practically in your code, in your debugger and replay that workflow as many times as you want.

Maxim Fateev 00:30:27 Reproducing that problem on your local machine. So it’s super powerful like troubleshooting technique. So UI gives you also the visibility into single workflow including history and different views. Also we allow your listing because you can have hundreds of millions of workflows, billions of workflows running in your system. So we have index and your customer attributes and you can list workflows, filter workflows based on different criteria. Also count them based on that criteria so you can find, okay, gimme all workflows from this customer which failed yesterday and they can list them and they can go investigate deeper issue of these workflows. Also Temporal is integrated with, usual metrics, context propagation, login. So you get all the normal integrations which your company has with metrics for example. So to be alarmed for example if something goes wrong because we meet metrics automatically on every activity, every workflow, every re-try. So everything which is important is emitted. So you don’t need to actually add any custom metrics to start making sense out of your application.

Philip Winston 00:31:21 I think we’ve covered all the core concept workflows, activities, timers, how it runs the event history, replaying retries on errors and web interface for debugging. Let’s go back to something you mentioned earlier, this idea of workflows as entities and I think in that case these are workflows that never finish or never terminate unless the entity goes away. I guess. And I think you alluded to two things there, signals and queries. Can you talk about signals and queries? Because those didn’t immediately seem like workflow concepts that I was familiar with.

Maxim Fateev 00:31:58 So we now have signal queries and updates. Update was recently added. So a signal is a one way call into the workflow. So practically it’s a delivering an event to the workflow to a specific workflow instance. So you say signal workflow, you give workflow ID by the way workflow ID is, provided by the application developer and we guarantee uniqueness by workflows by ID. So there is hard guarantee that you know you’ll have two workflows with the same ID running at the same time in the system. So you get practically exactly once execution guarantees for workflows. So signal is just event and how it’s handled, it depends on the language. For example in Java it’ll be just a callback. You say for this signal type, this is my arguments and every time this event is sent to the workflow, the callback will be called and workflow can react to that and it can be fully asynchronous kind of piece of code.

Maxim Fateev 00:32:44 A query is the way to get information out of the workflow synchronous layer. So when you say query, you call back inside of the workflow, this callback cannot mutate workflow state, it only can return data but it return any data out of your workflow, it’s your code. So you can return picture, you can return just status, you can join whatever and caller blocks synchronously. So if you want to show something in UI about this workflow, you will say query it’ll block. As soon as data is returned it’ll show something in the UI and the update is kind of both together. So update is you send the request to the workflow and this is a call back into the workflow. Workflow will block this call back as long as necessary and then it’ll return result at some point and your caller will be unblocked. And there are two ways to invoke an update for they can be synchronous for short lived requests, for example instant payments and things like that.

Maxim Fateev 00:33:29 But it also will have asynchronous update when it can be actually taken much longer time and you’ll do some optimized long poll for the result on the background. But conceptually you have signal which is one way event query which is just get information out of the workflow and update which is practically say imagine you have this entity workflow and you want to say I want to take some promo and do something. So you’ll be update workflow, will updates it state, maybe run some activities and then unblock your update call giving you information about success or like or rejection of this promo.

Philip Winston 00:34:00 Actually give me one more example with update. I’m not totally clear on that, just walk me through it again.

Maxim Fateev 00:34:05 Okay, let me give you a different example. Imagine you want to implement page flow like wizard, right? Show page one. Like, I don’t know you are implementing a checkout pipeline, right? I worked at Amazon at the ordering service so I kind of have some ideas there. So you have checkout pipeline. So you show something first page for example your shopping cart, right? Then you select a few buttons, they say next, based on what you selected, the next page will be different. Then you do whatever payment information you click next and the next page is different. This update you could have let’s say checkout pipeline workflow and then you say we’ll be blocked waiting for that page to return data. And then you will say okay here’s my data. This will unblock workflow can execute annual logic including writing activities. And then it can say okay now show different page, right? And it’ll return practically new page information and also you’ll get blocked there. So you can model the whole wizard as this practically blocking workflow. Imagine, right? Show page one, blah blah blah, business logic show page two, blah blah blah business logic. And it can be as complex as you want because it’s code and for it practically stateful session is a workflow. That is a very good way to implement that.

Philip Winston 00:35:10 How about interceptors? That’s something that maybe is strictly on the client side. I wasn’t sure.

Maxim Fateev 00:35:17 So at this point we only have client side interceptors. We are considering server side because there are some scenarios for them. But the idea of intercept is that sometimes you want to create functionality for specific business use cases. For example, can be as simple as, I want to save every time some activity completes, I want to save it in some emit event, right? An event to Kafka to go to my warehouse. You can write an interceptor because we intercept all the API calls to workflow in front of the workflow and you can say intercept, which will run an activity which publishes to Kafka for example after every activity completion. And then you just register this interceptor with the worker and every workflow will automatically get that function. And there are obviously a lot of use cases for interceptors. They were not allowed to wrap APIs because before interceptors existed people would either say oh now I need to wrap Temporal APIs to get this functionality. But now you can write an interceptor and your normal workflows using standard APIs will get these benefits out of the box.

Philip Winston 00:36:13 So you have some cross-cutting concern that you want to kind of inject into every workflow you’re writing.

Maxim Fateev 00:36:19 Exactly or activity.

Philip Winston 00:36:20 I think that’ll let us segue into talking about the client SDKs, the per language SDKs. So I think we covered the core concepts and we covered some more additional concepts. So you have SDKs for Go, Java, PHP, Python, TypeScript and coming soon is .net. I read though that at least some of the client libraries are built on a rust core. Can you talk about how that came about and yet there’s no rust SDK so can you kind of just tell us what the structure of the client SDKs is?

Maxim Fateev 00:36:54 So our clients are pretty fat clients because they implement a pretty complex state machine because they need to make sure that this code execution is correct and the API of the server just gives you a history. So you practically get a bunch of events and you need to convert that bracket through a lot of state machine to actual code execution. And we also want to make every SDK as native to the language user as possible, right? So if you are using TypeScript, you want to feel normal. TypeScript you don’t do anything unnatural, right? If you are using another .net, you want to use normal ISync from .net, right? If you are doing Python for example, we use ISync io from Python and if you do Java we actually do block calls, you just do synchronous calls because Java doesn’t really have something like a way to ISync yet, right?

Maxim Fateev 00:37:39 And then every and if you do go you want to do channels and select and so on. So it means that every case pretty first contains this logic how to make it to as language native as possible. But then underneath is very complex state machine and we found is that this common state machine is probably 80% of the code and the complexity and what we did, we decided to write a single library which will kind of encapsulate all this complexity and we kind of thought about what we would write it in and we found that Rust would be actually very good fit first because Rust integrates a lot with a lot of languages pretty well. So there are very good native integrations with Python for example with, like a lot of languages, which also allows you to do C APIs as well if you need.

Maxim Fateev 00:38:19 And so, and also we just, our development team just like Rust for obvious reasons like it’s, let’s say this way after we wrote the project it took a year, it’s a very complex project. I don’t think we ever had any crashes, right? Or anything which is beyond logical bug. We had logical bugs on it obviously, but we never had like bug related to the implementation which is amazing for this such a complex piece of technology. So the Rust core is just a library and then iphone, .net, TypeScript, they all sit on top of that library and then just much, much simpler implementations. Our Java and Go which were written before the core still implement these state machines independently. There are some benefits to that because mostly for example, I know that Go and Java developers don’t like libraries for example, Python for developers are okay having shared library linked into their code, but Java developers don’t like that. So we are not sure if you’re going ever like put quarter there. We are thinking about different ways to do that but conceptually it would be ideal if we could put every SDK on the core but we’ll see. And one day we absolutely will have Rust core SDK on top of this core SDK, we didn’t have enough time and resources to prioritize that.

Philip Winston 00:39:25 One workflow feature that I imagine is required in all of these languages would be parallel execution. So I have five tasks I want to run all at the same time. I don’t necessarily know which one is going to take the longest. Can you describe that process in whichever language you like to use in as an example, how do we do parallel execution?

Maxim Fateev 00:39:45 Yeah, it depends very heavily. It depends on the language. Let’s say Java, you would just invoke them asynchronously, get a back promise. Promise very similar to future but it’s simple or specific for practical reasons of determinism and then you will just say loop over those promises and say promise dot get and block there until, or you can just say wait for first promise to be ready from this list. If you want a more complex logic and if you’re using something like .net, you’ll just use all ISync functions, right? And then you’ll await on those tasks which were returned from those calls. So you’ll just use normal language constructs to do parallel execution. There is nothing magical about that. So in Go you could use Goroutines so you could run just five parallel Goroutines. Other option is in Go you can invoke activities and it’ll return future. So you can just use a Sync type of program using future get. So you have options but you have full power of Gol language including Goroutines to implement parallel processing.

Philip Winston 00:40:37 So echoing back to how Temporal works and how replaying event works, but tying it to this parallel execution case, suppose I do launch five tasks in parallel and let’s say they’re all long running. I’m curious what happens when one of them finishes. Now let’s say my code is going to wait on all of them to finish. Does my workflow keep getting rerun sort of as each one finishes or does the system know to wait for all of them?

Maxim Fateev 00:41:04 Right now we don’t have server site wait for all of them. Workflow will be notified about each of them completing and then it’ll continue blocking. So they are not very long. Runion workflow will be cached so it won’t be very expensive but still very some overhead there if they, very long running, yes workflow will be recovered multiple times but again if they’re so long run probably it’s not a problem. We consider it adding some feature more like okay 95 workflow only when all of them are ready. But so far just it’s an optimization. I don’t think it was really pain point for everyone so far.

Philip Winston 00:41:35 So let’s finish up with talking about the client SDKs then I’m going to move on to Temporal cluster and Temporal cloud. Let’s start with the cluster. Can you talk about what is involved in setting that up as a developer? I know there’s a single binary how that works and then how would you move on to a production implementation?

Maxim Fateev 00:41:54 Yeah, so for local development, if you’re on Mac you just do pro install Temporal, you get Temporal binary Temporal CLI plus full feature it server in a single binary and then you just say Temporal start dev server and by default it can start server with in memory SQL I database. So it’s pretty good for development because every time you restart that you just remove all the state which is nice. You also get the UI so you just have a UI right on local host so you can web UI. So as soon as you have the single binary have full environment to do, to do development. I think one thing I forgot to mention, we also have unit testing frameworks which don’t require server. So it means that for example, if in Java you want to unit test your workflow, you just use our supporting classes and they will walk even if your workflow blocks for a long time.

Maxim Fateev 00:42:40 For example, if workflow says sleep two weeks and unit testing frameworks will discover that and jump time two weeks ahead, the moment workflow is blocked. So you can test in milliseconds workflows which run for weeks it’s unit testing. But then if you want to do real development then you do single binary but then you can deploy to your infrastructure and then it depends how you also can locally. Some people use docker compose. If you want to have military installations, you absolutely can use docker compose which runs Temporal roles as separate docker containers. And, you can for example if you want to look how metrics look, then you would use docker compose because single binary doesn’t include Prometheus for example. Then how people deploy Temporal itself is just binary compiled from Go backend server written in Go. So you can deploy it any way you want.

Maxim Fateev 00:43:25 So if you want to run it on bare metal, you absolutely can deploy it in portal binaries, SCP, those binaries to 50 machines and configure them correctly. Make sure that these machines can talk to each other because simple requires direct communication and after that it just will walk. And then you probably, you want to make sure you put load balancer in front of your front ends, but obviously these days most people don’t do that. They use Kubernetes or use some other similar technology and then you just deploy Tempotal through Helm Chart or you write your own Helm Chart to deploy that or you use whatever ECS and so on. So Temporal is absolutely not opinionate how you run those binaries, right? There are certain requirements around front end load balancer and communication between nodes and then you need database, you need to provision database and need to provision schema and then you just give connection Temporal cluster to the database.

Philip Winston 00:44:14 Speaking of database, you mentioned the in-memory I think it was MySQL for the single binary

Maxim Fateev 00:44:20 SQL light

Philip Winston 00:44:20 Oh sorry, SQL light. And then you mentioned restarting it as in a way a good thing because it resets the state. So what exactly is in the database? Is it strictly workflows that are currently executing with maybe some history for debugging or what else is contained in there?

Maxim Fateev 00:44:38 So it contains workflow state history of the workflow and index of workflows. If you need to list workflows across like, list multiple workflows and, there are technical things for example, durable timers that because workflow state by itself is not enough, right? For example, if it’s sleeping for 30 days, you want to have a durable timer and you have a lot of kind of other moving parts. But conceptually from your point of view, yes workflow, state history tasks and index in production deployment we recommend, especially if you have large scale, we integrate with Elasticsearch for that. So index would be in the Elasticsearch

Philip Winston 00:45:11 Going back to the workflows as entities, if in that case some of my workflows will run potentially forever, is there a decision to be made about how much state I allow to be in the workflow versus still having a separate database of my own? Is that a decision to be made and what do you feel people tend to do with that?

Maxim Fateev 00:45:32 It’s a very good question. So for example, the use case I described for airline points, I know very large scale production use cases which don’t use database, they just store state there at the same time. It’s not that much state, right , it just points and maybe the ID to dupe of events like some recent events to dupe. So, but just some basic limitations. We don’t store state of the workflow directly, we only store state of the results of activities because we record events. So workflow, input, arguments and activities stay results would be the state. So if you active and we limit the size of the history to 50 megabytes. So if you have every activity result cannot be larger than two megabytes. So technically even, 25 activities return in two megabytes will exceed that limit. So we don’t recommend that. So if you need to pass large blobs Temporal is not the technology to pass large blobs around.

Maxim Fateev 00:46:22 We recommend storing blobs in some other system and passing pointers around. So we’re not big data system. One caveat with always running workflows with entity workflows is imagine they run forever. Let’s say they have some event loop processing events and size of this history becomes unbounded, right? Because it’ll keep growing. So we have special mechanism, we call it continue is new when you can practically kind of do this tail recursion elimination when you kind of call this workflow again from the beginning and you pass state explicitly and then history is reset to the new to zero. So practically this workflow is expected periodically called this separation to reset the history size. So this way they can run forever and have unlimited practical duration and length. That is kind of the mechanism we provide. Also, it allows to upgrade code because upgrading code is nontrivial when workflow already started. So if you call this continuous periodically there is guarantee around code upgrades. So it makes it also easier.

Philip Winston 00:47:12 I think you’re alluding to workflow versioning, which is something I was going to touch on, but now might be a good time. That does seem like a challenge. So especially if you have these workflows that are running a long time, the code can potentially change. How do you know if a workflow execution is compatible with the new code or vice versa?

Maxim Fateev 00:47:30 Yeah, that is in general a hard problem. Imagine updating code while something is in flight, right? So there are two approaches to versioning in all kind of workflow like long running systems. One is you just pin the version the moment you start the workflow, you practice, say this workflow started with version one and then you deploy version two and you say this workflow is pinned to version two and then you cannot really fix the back in version one, right? Because all new workflows will benefit from the changes. It works very well for relatively short workflows. If your workflow’s up to one hour, like a day, it’s actually very common approach and we recommend that because it just makes your life easier. But if you have workflow which runs for a month and it doesn’t call continuation because it just sequence of steps, which takes a month, then being able to deploy and run a pool of workers for every version becomes a daunting task.

Maxim Fateev 00:48:19 And it can be just not practical. So Temporal provides a way to still provide what we call it patching, being able to patch workflows which are already running. And we do it by just practically keeping two versions of the code. You have an old version of the code, a new version of the code inside of your code. And you can put it anywhere. You can be a library, it can be just one function, you can do it multiple places, but you just say if old version, keep old code, new version, new code. And then when you have API code, you can verify that all workflows on the old version completed, then you can remove old branches. But it allows you practically to fix bug in workflows which already running and guarantee that they’re compatible because we have special practical conditions in versioning. And then we provide ways to test that. So we have unit testing support and replace support to ensure that you didn’t break compatibility by mistake.

Philip Winston 00:49:04 Versioning does sound like it can get complicated, but I imagine if you didn’t even have an explicit representation of your workflow at all, then versioning is still going to be a huge problem. If you just weren’t using a workflow system like this,

Maxim Fateev 00:49:19 It’s much worse. It’s impossible because you can do three real changes. Back is compatible change, but the moment you need to do it incompatible change because your logic is spread out across multiple services. So your sequencing logic is, just everywhere and it’s not in one place. It’s practically impossible. I can tell you we had customers, we said they’re stuck, they cannot do changes. They’re scared because they don’t know what’s going to break. because like event driven systems, especially like choreography based systems, you can think them as like one big global set of global variables because when people say oh it’s good that you can publish message and some service will consume it without ever me knowing it’s not a good idea. It’s like global variable. You update it, you have no clue which other parts of your code will be broken. So this systems extremely hard to maintain and, guarantee that they’re not going to break with changes.

Philip Winston 00:50:02 So these next topics about Temporal cluster I think are going to lead us into talking about Temporal cloud because we’re going to talk about when you need to scale up, what happens and how do you manage that. So there’s an idea of multi cluster replication. Is that for performance or for reliability or both?

Maxim Fateev 00:50:22 It’s purely for reliability. And let me talk about performance first and scalability and then we can talk about multi cluster. Temporal as it’s designed is designed to scale, almost infinitely. Practically it can saturate any database. So far we couldn’t find database we couldn’t saturate. So it means that if you have an application on Temporal and it’s configured correctly and your cluster is configured correctly, the bottleneck is always a database and a CP database. So what happens is that MySQL and Postgres, there is no sharding built in so you can only scale up. I know that some companies use Vitas so it’s possible to scale them out. We don’t provide direct support for that, but in most cases you kind of can get the biggest instance and you can scale up to certain point but then you hit the wall. If you use Cassandra, Cassandra allows you to scale out even more hosts and this Cassandra can go much larger scale.

Maxim Fateev 00:51:10 So we seen people writing a hundred node Cassandra clusters to run certain workloads and Temporal can keep up like cluster itself can always keep up with database. So that is how you would do that. If you use cases a low rate, you can pick MySQL or Postgres fine. But if you need to go high rates then you will need to probably Cassandra if you are running a self-served version of Temporal and going back to the multi cluster. So Temporal has very unique way to guarantee reliability across, regions and across availability. Like so every cluster can be deployed to availability zones, right? You can absolutely deploy Cassandra to be in three availability zones. You can deploy Temporal cluster to be distributed so it can sustain single zone outage without problem. But what happens if the whole region goes down or database gets corrupted? So Temporal allows you to run more clusters in different regions and this cluster is absolutely independent.

Maxim Fateev 00:51:58 They have their own storage, even different type of storage. So one cluster can run mySQL, another cluster can run Cassandra for example. And they communicate for top level like high level application protocol, which is Temporal specific. We should practice ship events, right? We ship the same events we put into the event history and what it means is that Temporal, these cluster so independent that there is no blast radius problem, you cannot give a single command which would break for example both clusters. So architecture is much more available system with anything you see out there, especially databases which are multi-region databases because these databases you can always give single schema update or single bad command which will bring out the whole system. As we know most of the outages are operator error, upgrade error, version error and IFT portal allows you to run these clusters even on different versions.

Maxim Fateev 00:52:42 So by design this multi-region set up and for lowers are very, very highly available. And it’s also because it’s a synchronous replication for because for performance we don’t want to do like wait for round trips to the remote region. We actually have conflict resolution code because databases, they just look at the last timestamp and drop data which is beyond that. Temporal actually detects conflicts and has conflict resolution code which allows for them not losing data and events even in case of conflicts for example if there was not every event was propagated before failover, things like that. So certainly our multi-region support is very powerful and a lot of companies when it was written at Tuber that was main requirement to run like important workloads. You couldn’t run tier one workload without having this surviving full data center outage or multi full region outage. That doesn’t mean that it doesn’t create performance, right? This is purely for availability because every cluster is independent, it has its own scale limits assumption is that remote cluster can keep up with the same scale. So usually they’re the same size but each individual namespace can be fell over individually. Temporal has notion of namespace, so it’s multi-tenant system so you don’t need to even fill over the whole cluster. You can fill over individual namespace if necessary, but it does make cluster faster. It just makes it more reliable.

Philip Winston 00:53:54 So I think that’s a good introduction to Temporal cloud because you mentioned namespace and I think you’re billing the cloud as a namespace as a service and not just a hosted cluster. Can you tell me the difference between those two concepts and what are the advantages of namespace as a service?

Maxim Fateev 00:54:10 So open-source has notion of namespace. So if you deploy a cluster you can create multiple namespaces in that, but you still have a cluster. In Temporal cloud, we just give you namespace and cluster concept is not even exposed. Obviously behind the scenes we still have clusters but it’s our problem. It’s not problem of the user of the cloud, it’s the same as you go to S3 and you say create new bucket, you’re absolutely unaware about the backend of S3, right? Maybe they have clusters, maybe they don’t, right? Maybe it’s one, I doubt it’s one, right? But it’s absolutely hidden. The same thing with Temporal cloud. You get a namespace, you practically go through APIs, so you create a new namespace, you get namespace on point, you can start using it right away and it’s important to provide scalability and reliability of that namespace and we can scale up practically infinitely on a single namespace.

Maxim Fateev 00:54:54 We ran tests when we ran 300,000 actions per second and we could go higher just we didn’t want to pay higher at the best bill for that. So we can go very high throughput use cases on that. And the reason we can do that because we practically wrote our own storage, as I said storage is pluggable so we can have different databases and we wrote our own database and the reason we can do it because we wrote database practice for a single API call, certainly we don’t have resources to write general purpose database and maintain our system. But what we did, we realized that Temporal has very specific access patterns and it’s built for very specific update and read patterns and we wrote databases for that practice with a very narrow use case. It allows it to be super efficient and super fast and scalable just for that use case. It’s certainly not a general purpose database but it allows our cloud scale well beyond for example, anything you can reach with Cassandra or and also provide high availability than any of the existing databases out there.

Philip Winston 00:55:48 You mentioned S3 and I imagine your situation is similar where you need to accommodate a large dynamic range of customer sizes. So you might have a lot of customers starting out, they’re just getting used to Temporal and they’re really using a fraction of a cluster on your side. But then you have customers that have hit their stride, maybe multiple teams are adopting Temporal, they could scale up and essentially be using multiple clusters worth of compute behind the scenes.

Maxim Fateev 00:56:14 We are multi-tenant, we actually don’t provide clusters as I said. So every cluster can run multiple customers namespace for multiple customers and we certainly perform some logic to allocate namespaces to clusters to make sure that they are evenly distributed. Also, we obviously give enough headroom and we also have scaling features to be able to sustain spikes and we can have customers which have very high throughput and low throughput. Also, as you said, if you have multiple teams using Temporal, every team probably will have their own namespaces so they don’t even need to be in the same cluster. But at the same time, if you have namespace and use case which requires extremely high rate, we absolutely can support that. An example, every Snapchat story update is a Temporal workflow going through our cloud. So we can go very high rate, an imagine number of Snapchat stories at New Year’s Eve or Super Bowl. So one of the reasons SNAP is using our service is they don’t need to think about scaling up for these events because it’s on Temporal service itself.

Philip Winston 00:57:10 How about retention with Temporal cloud or analytics? I imagine that you don’t want the same database being used for the real-time workflows as I might want to use to run analytics on the last year’s worth of jobs. Is there any combination for that?

Maxim Fateev 00:57:27 Yeah, so you can store workflows in, side of namespace up to three months into pro cloud right now. We might increase that in the future, but after that or before it can, anytime you have option to configure export feature. An export is practically just taking your workflow history in appropriate format and just, put that into your own S3 bucket. So practically all after workflows complete, we give it to you and then you can do whatever you want with them. So you want to run, just keep them for compliance forever or you want to run some produced job on them, you absolutely can do all of that. So that is a very common scenario for export.

Philip Winston 00:58:03 And that kind of relates to observability in general. Since this is running in the cloud, what sort of hooks do I have if I want to do my own? I don’t know, debugging or accounting.

Maxim Fateev 00:58:14 First obviously you get the UI. One thing I forgot to mention, we also, everything UI does is for the well-defined GPC interface. So you absolutely can’t build your own version of UI let’s say doing that. Second is that we have CLI, so everything you do in UI, even more you can do for CLI and which talks to the same interface. So absolutely can look at the workflow list workflows like send signals, send commands and so on queries through CLI. So CLI is pretty powerful in the cloud. You also get access to the same UI, even more powerful and then you have CLI and for metrics we can expose specific endpoint to do Prometheus metrics. So you can configure your own endpoint through like with your own certificate so you would be able to access your own metrics. Also SDKs and most of the metrics actually made by SDK. So only some metrics which are server side only and not visible by SDK. I emitted through that endpoint mostly performance related, which are needed to optimize your workload, which exposed by, through the cloud, but you have access to that.

Philip Winston 00:59:10 And if I need to encrypt my inputs and outputs and so I can’t natively observe them on the UI, there’s a codec server, something that’s going to allow me to see the actual data.

Maxim Fateev 00:59:23 Yeah. Okay, let me just give a little big picture here first. I think the first thing when we say, oh, if you don’t want to, for example, you want to use Temporal cloud versus open open-source version, very common answer immediately, there is no way we would ever use your SaaS offering, right? Like Temporal cloud is your SaaS offering and it’s a usual reaction. What happens is that after we go and explain how, Temporal cloud works, most people say, oh yeah, it’s absolutely practical and we can pass security review and we pass security reviews of biggest banks in the world. So which are very strict. Why few things? First as you mentioned, encryption. So Temporal is not a database, it doesn’t need to look into the data center to the backend cluster, right? First is that code runs on your premises, right?

Maxim Fateev 01:00:00 Because Temporal just keeps the backend cluster the same way as Confluent runs your Cass Kafka cluster and you run your application which publishes and reads from that cluster on your premises. The same way is Temporal. You link the SDK, you deploy application into your system any way you want. Temporal doesn’t know how you deploy your application. All it needs to do is connection to the Temporal cluster. You need only outgoing connection from your VPC or your data center to Temporal because Temporal never connects back. It’s always your code to Temporal, it’s through GRPC. So you need only one port open there and then you run your code. It’s only outgoing connections and then you can encrypt everything before sending there because Temporal never looks in the payloads. So if you have an activity input or a flow input, it’ll be encrypted using your Codex server, your encryption algorithm, your keys and Temporal will never see anything clear text.

Maxim Fateev 01:00:49 And then we have a special UI plugin practically in UI you can say, okay, I’m running JavaScript in my browser, I want a CAD deck which is local to my VPC and browser will directly connect to that CAD deck server and decrypt things if I have permissions. So Temporal Cloud never sees that because UI runs in your browser. So everything unencrypted data never leaves your kind of internal data center or your VPC. So I think this is important to understand is that like three things: Temporal doesn’t run your code, it never sees anything in clear text and no connections besides the connection to the Temporal endpoint. And these three things are enough to pass practice security view of the most strict organizations.

Philip Winston 01:01:25 And I think that goes back to the start of the show talking about separation of concerns, which is that Temporal doesn’t need to know these inner details of my code because that’s just not its domain. That’s not what it’s responsible for.

Maxim Fateev 01:01:38 Correct. Yeah, because Temporal doesn’t understand your workflow logic, it doesn’t see any of your code. All it sees is practically events, right? Run this activity, complete this activity and create this timer, run this timer. So it’s very kind of low level details but your business logic lives in your code and Temporal never sees it.

Philip Winston 01:01:56 Okay, that makes a lot of sense. How about pricing? How does that work with Temporal cloud?

Maxim Fateev 01:02:00 So we are a hundred percent consumption based. So it means that you pay only for what you use. Unit of consumption is what we call action. So every activity execution is in action, every signal is in action.

Philip Winston 01:02:13 Alright, I think that covers Temporal cloud. We have to start wrapping up now. You mentioned the single binary as being a good way to get started with Temporal. Is there any other advice you have for someone just starting out? Where did they look? Is it on the website videos? What do you recommend?

Maxim Fateev 01:02:30 I absolutely recommend doing our courses. So if you go to a website and I think the resources, we have links to how to learn Temporal and we have getting started guides and we also have courses. Absolutely recommend doing courses. We have one-on one, one or two courses for all major languages. And if you want to just learn Temporal, understand Temporal, anyone who starts with that absolutely recommend to do the course because it’s called, but you need to understand how these things works, right? There’s so much magic because the way I describe it looks like magic, but when you do the course, you understand how magic works, it becomes obvious and then you just can’t focus on your application without, kind of thinking and running into some age cases. So absolutely, please do the courses also join our Slack community. So we have, community forum, we have our Slack around 9,000 people in Slack I think right now. So we have pretty healthy community, which people get help there all the time. So please and you can find me there as well. So if you go to our Slack, my name is there, so Maxim Fateev, you can always find me there and ask questions.

Philip Winston 01:03:28 How about when users are advanced and they want to get involved in implementations since it is open-source, are there special channels on the Slack for that or is there a mailing list?

Maxim Fateev 01:03:38 There is a channel on the Slack and it’s all in GitHub so you can always file issues and requests and so on. So you can communicate with that as well. There are quite a few large contributions, especially around this SDKs. For example, Ruby SDK was contributed by Coinbase and I think right now Stripe and Coinbase maintain that together. And so I think there is also skull SDK. There are some other SDKs being written. So these are the biggest contributors I think right now. Also there are companies building open-source project, like an people build open-source project using Temporal as an engine. So that is also pretty common use case.

Philip Winston 01:04:11 Oh, can you explain that for a little bit? I wasn’t clear. So as an engine, how does that work?

Maxim Fateev 01:04:16 So one example I can give probably is Netflix for example. The internal version of Spinnaker required practically CICD pipeline, right? And deployment pipeline, they rewrote that using Temporal as an underlying implementation. So imagine you are trying to do startup which creates new machine learning whatever platform and it’s open-source for example. You absolutely can build Temporal as core and then build your business logic around that. So you can absolutely build much more complex applications and save a lot of time, right? Because your practices write your business logic. And we actually have startup program, I forgot to mention that because a lot of startups like us, it’s a very good value proposition because you write your code faster, you write less code, it’s easier to troubleshoot and we, granted it’ll scale infinitely if your company becomes successful. We had startups growing like thousand x, 10,000 x within a few months on their traffic and they just didn’t need to change the line of code. We just kept like just our cloud was able to handle all their load.

Philip Winston 01:05:08 I’ll put links for the courses and other resources. How about what’s next for Temporal and Temporal cloud? I think we’re looking at quarter one of 2024 or into 2024.

Maxim Fateev 01:05:20 So a few big things going on. One is that we are productizing this multi-region support I mentioned. We didn’t have it in the cloud working. So it’ll be available beginning next year. So it would, we call it global namespace, so by namespace, which is distributed across multiple regions. So it will allow you to have very high availability for your workloads. The other thing, we still don’t have the ability to sign up for our cloud to just through signup flow without just passing your credit card information, you still need to kind of fill up the form and we’ll send you email and it’s a little bit more complicated than necessary. So beginning next year we will kind of create a fully self-served pipeline for signing up accounts. That is another big thing. And then the other important project we call Nexus, it’s a kind of new way to think about because think about Temporal, it’s not just workflow.

Maxim Fateev 01:06:04 Temporal is a service mesh for long run operations because when you walk activity, you technically call a function in a block way, right? Depending on your language. Maybe it’s a, I think block, but it’s maybe real block in Java. And you this function runs on this remote machine. So you technically core RPC and RPC can take five days. It’s a very powerful paradigm. It makes your life as a developer much simpler than think trying to model that as a bunch of, I think events. And Temporal, we want to make it very first class citizen, so practically will be able to define APIs and every API would be able to have like as could run as long as necessary. So we kind of have this notion of arbitrary length operations operation, which can take any amount of time and that will allow you to practically use Team Portal as a service mesh to link all services you enterprise to together and don’t rely on queues or events just practically doing this long run in RPC. We call it Project Nexus. So I think the first version of that should come out at the beginning of next year.