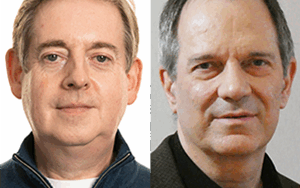

Michael Ashburne and Maxwell Huffman of Aspiritech discuss Quality Assurance with host Jeremy Jung. This episode examines the value of exploratory testing; finding repeatable test cases; introducing QA to a new product; when to drop old tests; improving usability and documentation; the complexity of bluetooth; benefits of testing outside a development team; accessibility; small vs large companies; and automated vs manual testing.

This episode sponsored by LogDNA.

Show Notes

Related Links

⦁ Aspiritech

⦁ Episode 386: Spencer Dixon on Building Low Latency Applications with WebRTC

⦁ Section508 Test for Accessibility

⦁ ANDI Accessibility Testing Tool

⦁ Windows Hardware Compatibility Program

⦁ Audio over Bluetooth

Transcript

Transcript brought to you by IEEE Software

This transcript was automatically generated. To suggest improvements in the text, please contact [email protected].

Jeremy Jung 00:00:22 This is Jeremy Jung for software engineering radio. Today I’m joined by Maxwell Huffman and Michael Ashburne. They’re both QA managers at Aspiritech. I’m going to start with defining quality assurance. Could one of you start by explaining what it is.

Michael Ashburne 00:00:38 So when I first joined Aspiritech I was kind of curious about that as well. One of the main things that we do at Aspiritech beside quality assurance is we also give meaningful employment individuals on the autism spectrum. I myself, am on the autism spectrum. And that’s what initially attracted me to the company. Quality assurance in a nutshell is making sure that products and software is not defective, that it functions the way it was intended to function.

Jeremy Jung 00:01:09 How would somebody know when they’ve, when they’ve met that goal?

Maxwell Huffman 00:01:12 It all depends on the client’s objectives. I guess quality assurance testing is always about trying to mitigate risk. There’s only so much testing that is realistic to do, you know, you could test forever and never release your product, and that’s not good for business. It’s really about balancing, like how likely is it that the customer is going to encounter how much time and energy would be required to fix it. Overall company reputation, impact, there’s all sorts of different metrics. Every customer’s unique, really they get to set the pace.

Michael Ashburne 00:01:51 Does the product work well? Is the user experience frustrating or not? That’s always a bar that I look for. One of the main things that we were view in the different defects that we find is customer impact and how much of this is going to frustrate the customers. And when we’re going through that analysis, is this cost effective or not the client they’ll determine it’s worth the cost of the quality assurance and of the fix of the software to make sure that that customer experience is smooth. When

Jeremy Jung 00:02:26 You talk to software developers. Now, a lot of them are familiar with things like they need to test their code, right? They have things like unit tests and integration tests that they’re running regularly. Where does quality assurance fit in with that? Like, is that considered a part of quality assurance is quality assurance something different.

Maxwell Huffman 00:02:45 We try to partner with our clients because the goal is the same quality product that’s as free of defects as possible. We have multiple clients that will let us know these are clients typically that we’ve worked with for a long time and have sort of established a rhythm. They’ll let us know when they’ve got a new product in the pipeline. And as soon as they have available software requirements, documentation, specs, user guides, that kind of thing. They’ll provide that to us to be able to then plan, okay, what are these new features? What defects have been repaired since the last build, or all depends on what the actual product is. And we start preparing tests even before there may be a version of the software to test. Now that’s more of a, what they call a waterfall approach, where it’s kind of a back and forth.

Maxwell Huffman 00:03:38 You know, the client preps the software, we test the software. If there’s something amiss, the client makes changes, then they give us a new build, but we just, as well, we work in iterative design or agile is a popular term, of course, where we have embedded testers that are, you know, on a daily basis, interacting with client developers to address, to verify certain parts of the code as it’s being developed. Because of course the problem with waterfall is you find a defect and it could be deep in the code or some sort of linchpin aspect of the code. And then there’s a lot of work to be done to try to fix that sort of thing. Whereas embedded testers can identify a defect or, or even just like a friction point as early as possible. And so then they don’t have to tear it all down and start over and just fix that while they’re working on that part, basically.

Jeremy Jung 00:04:38 So I think there’s two things you touched on there. One is the ability to bring in QA early into the process. And if I understand correctly, what you were sort of describing is even if you don’t have a complete product, yet, if you just have an idea of what you want to build, you were saying, you start to generate test cases and it almost feels like you would be a part of generating the requirements generating, like, what are the things that you need to build into your software before the team that’s building? It necessarily knows themselves that I sort of,

Michael Ashburne 00:05:14 I’ve been in projects that we’ve worked with the product from cradle to grave. A lot of them haven’t gotten all the way to a grave yet, but some of them, the amount of support that they’re offering, it’s reached that milestone in its life cycle, where they’re no longer going to address the defects in the same way. They want to know that they’re there. They want to know what exists, but then now there are new products that are being created, right? So we are engaged in embedded testing, which is, which is testing certain facets of the code actively and making sure that it’s doing what it needs to do, and we can make that patch on that code and put it out to market. And we’re also doing that at earlier stages with, in, in earlier development, where before it’s an even fully formed design concept or offering suggestions and recommending that, you know, this doesn’t follow with the design strategy and the concept design. So that part of embedded testing or unit testing can be involved at earlier stages as well. Sure, sure.

Maxwell Huffman 00:06:26 Of course. Those, you have to be very careful, you know, we wouldn’t necessarily make blanket recommendations to a new client. A lot of the clients that we have, we have been with us for several years. And so you develop a rhythm, common vocabulary, you know, you know, which generally speaking, which goals way more than other goals and things like that from, from client to client or even coder to coder, there’s only once we’ve really developed that shared language that we would say by the way, no such and such is missing from blankety blank. Great example with a bunch of non words in it. I think you get the picture,

Jeremy Jung 00:07:07 We’re starting to work on a project. You don’t know a whole lot about it, right? You’re trying to, to understand how this product is supposed to work. And what does that process look like? Like what should a company be providing to you? What are the sorts of meetings or conversations you’re having that sort of thing.

Maxwell Huffman 00:07:27 We’ll have an initial meeting with a handful of people from both sides and just sort of talk about both what we can bring to the project and what their objectives are. The thing that they want us to test, if you will. And if we reach an agreement that we want to move forward, then the next step would be like a product demo. Basically we would come together and we would start to fold in leads and some other analysts, you know, people that were, it might be a good match for the project to say, we always ask our clients and they’re usually pretty accommodating. If we can record the meeting, you know, now everyone’s meeting on Google, meet and virtually and so forth. And so that makes it a little easier, but a lot of our analysts, everyone has their own learning style, right? You know, some people are more auditory, some people are more visual.

Maxwell Huffman 00:08:16 So we preserve, you know, the client’s own demonstration of what it’s either going to be like, or is like is wrong or whatever they want us to know about it. And then we, we can add that file to our secure project folder and anybody down the road that’s being onboarded. Like that’s, that’s a resource and asynchronous resource that they can turn to, right? A person doesn’t have to read, demonstrate the software to onboard them, or sometimes, you know, by the time we’re onboarding new people, the software has changed enough that we have to set those aside and then you have to do in person kind of deal.

Michael Ashburne 00:08:48 We want to consider individuals on the, on the spectrum, the different analysts and testers, they do have different styles. We do want to ask for as many different resources that are available to accommodate for that, but also to have us be the best enabled to be the subject matter experts on the product. So what we’ve found is that what we’re really involved in is writing test cases and rewriting test cases to humanize the software, to really get at what are you asking this software to do that in turn is what the product is doing. A lot of the testing we do as black box testing. And we want to understand what the original design concept is. So that involves the user interface design document, right? The early stages of that, if available, or just that dialogue that Michael was referring to to get that common language of what do you want this product to do? What are you really asking this code to do to have having recordings or any sort of training material is absolutely essential to being the subject matter experts and then developing the kind of testing that’s required for

Maxwell Huffman 00:10:03 That. Yeah. And all sorts of different clients have different different amounts of testing material. So to speak everything from, you know, a company that has their own internal test tracking software, and they just have to give us access to it. And the test cases are already there to a piece of paper, like a physical piece of paper that they copied the checklist into Excel. And now, like, these are the things that we look at, but of course there’s always a lot more to it than that, but that at least gives us a starting point to sort of, to build off of and, you know, testing areas and sections and, you know, sort of thematically related features, things like that. And then we can develop our own tests on their behalf.

Jeremy Jung 00:10:44 And you’re, you’re building out your own tests. W what would be the, the level of detail there? Would it be a high level thing that you want to accomplish in the software and then like absolute step by step?

Maxwell Huffman 00:10:57 I hate to make every answer conditional, right. But it sort of depends on the software itself and what the client’s goals are. One of our clients is developing a new screen sharing app. That’s for developers both work on the same code at the same time, but they can take turns typing, controlling the mouse, that sort of thing. And although this product has been on the market for awhile, we started out with one of those checklists and now have hundreds of test cases based on both features that they’ve added as well as weird things that we found like, oh, make sure sometimes you have to write a test case that tests for the negative likely the absence of a problem, right? So we can make sure X connects to Y and the video doesn’t drop, or you can answer the connection before the first ring is done and it successfully connects any way or any host of options. So our test cases for that project, we have a lot of screencaps and stuff, because a picture is worth a thousand words as the cliche goes, but we also try to describe the features and not just, you know, present the picture with an arrow, like click here and see what happens. Because again, everyone has sort of different data processing styles, and some would prefer to read step by step instructions, rather than trying to interpret, you know, some colors in a picture. What does this even mean out of context? And

Michael Ashburne 00:12:19 Lots of times you’ll end up potentially seen test cases. They seem like they could be very easily automated because literally they’re written all in code and the client will occasionally ask us to do a test cycle scrub, or they’ll ask us, okay, well, what can be automated within this? Right. But one of the key things that we really look at is to try to humanize that test case a little more away from that, just basic automation, lots of times that that literally involves asking what are you trying to get out of this test case? Because it’s fallen so much into the, into the weeds that you no longer can really tell what you’re really asking it to really do so lots of times we will, we will help them automate them, but also just give it the proper test environment and the proper steps. You’d really be amazed. How many test cases just do not have the proper steps to get an, an actual expected result. And if it’s written wrong at that basic manual level, you’re not adding value. So that’s one thing that we, that we really have found is added value to the clients and to their

Maxwell Huffman 00:13:29 Tests. A lot of people ask about automation because it’s a very sexy term right now, and it certainly has its place, right? But you can’t automate new feature testing. It has to be an aspect of the product that’s mature, not changing from build to build. And you also have to have test cases that are mature, that, you know, every little virtual or otherwise, you know, T is crossed and I is dotted, or else you end up having to do manual testing anyway, because the computer just goes up and didn’t work because that’s really all the automated process can do is either it passes or it doesn’t. And so then we have to come in and we have clients where we do plenty of that, like, okay, the ran through the tests and these three failed figure out why, and then they go in and start digging around and, oh, it turns out this is missing, or this got moved in the latest update or something like that.

Jeremy Jung 00:14:21 That’s an interesting perspective for testing in general, where it sounds like when a feature is new, when you’re making a lot of changes to how the software works, that’s when manual testing can actually be really valuable because as a person, you have a sense of what you want and if things kind of move around or don’t work exactly the way you expect them to, but you kind of know what the end goal is. You have an idea of like, yes, this worked, no, this didn’t. And then once that’s solidified, then that’s when you said it’s easier to, to shift into automatic testing. For example, having an application, spin up a browser and click through things or trigger things through code, things like that.

Maxwell Huffman 00:15:07 You know, you have to get the timing just right, because the computer can only wait in so many increments. If it tries to click the next thing too soon, and it hasn’t finished loading, then it’s all over. But that’s actually the discernment that you were sort of referring to the, using your judgment when executing a test, that’s where we really, we really do our best work. And we have some analysts that specialize in exploratory testing, which is where you’re just sort of looking around systematically or otherwise. I personally have never been able to do that very well, but that’s critical because those exploratory tests are always where you turn up the weirdest combination of things. Oh, I happen to have this old pair of headphones on. And when I switched from Bluetooth to manual plug, you know, it just disconnected the phones, the conference call altogether, you know, and who does that. Right? But, you know, there’s all, all sorts of different kinds of combinations and who knows what the end user is going to bring. He’s not going to necessarily buy all new gear, right. When he gets the new computer or the new software or whatever.

Jeremy Jung 00:16:11 I feel like there’s been a, a kind of a trend in terms of testing and software companies, where they used to commonly have in-house testing or in-house QA. It would be separated from development. And now you’re seeing more and more of people on the engineering staff, on the developing staff being responsible for testing their own software, whether that be through unit tests, integration tests, or even just using the software themselves, where you’re getting to the point where you have more and more people are engineers that maybe have some expertise or some knowledge in tests and less, so people who are specifically dedicated to test. And so I wonder from your perspective, you know, a QA firm or just testers in general, like what their role is in software development, going forward, having

Michael Ashburne 00:17:02 Specialized individuals that are constantly testing it and analyzing the components and making sure that you’re on track to make that and concept design come to life really is essential. And that’s what you get with the quality assurance. It’s like a whole other wing of your company that basically is making sure that everything you are, that you are doing with this product and with this software is within scope and you can’t be doing anything better as well. That’s the other aspect of it, right? Because lots of times when we find a component and we’ve found something that we’ve broken, or we found a flaw in the design, we look at what that means, bigger picture with the overall product. And we try to figure out, all right, well, does this part of the functionality, is it worth it to fix this part of the functionality? Is it cost-effective right.

Michael Ashburne 00:18:01 So lots of times quality assurance that comes right down to the, to the cost effectiveness of the different patches and lots of times it’s even the safety of the product itself. And all depends on what exactly you’re, you’re designing, but I can give you an example of a product that, that we were, that we were working with in the past, where we were able to get a component to overheat, obviously that is a critical defect that needs to be addressed and fixed. That’s something that can be found as you’re just designing the product, but to have a specialized division that’s just focused on quality assurance. They’re more liable, they’re more inclined. And that is what their directive is, is to find those sorts of defects. And I’ll tell you the defect that we found that overheated this, this product, it was definitely an exploratory find.

Michael Ashburne 00:18:55 It was actually caught off of a test case that was originally automated. So, so we definitely were engaged in every aspect or a lot of the aspects of the engineering departments with this, uh, product. But in the end, it was exploratory testing. It was out of scope of what they had automated. Then I ended up finding this that’s where I really see quality assurance. And this, then this field within software engineering, really gaining respect and gaining momentum in understanding that, Hey, these are really intelligent, potentially software engineers themselves. That their key focus is to, is to testing our product and making sure that it’s a design that that is within scope.

Maxwell Huffman 00:19:40 It’s helpful to have a fresh set of eyes to, you know, the person who’s been working on a product for, you know, day in, day out for months on end, inevitably, there will be aspects that become second nature may allow them to effectively like skip steps in the course of testing. Some end result when they’re doing their own testing, but you bring in a group of a group of analysts who know testing, but don’t know your product other than generally what it’s supposed to do. And you sort of have at it, you find all sorts of interesting things that way.

Jeremy Jung 00:20:16 Yeah, I, you, you brought up two interesting points. One of them is the fact that nowadays there is such a big focus on automated testing as a part of a continuous integration process, right? Somebody will write code they’ll check in their code, it’ll build, and then automated tests will see that it’s still working. But those tests that the developers wrote, they’re never going to find things that there were never test written for. Right. So I think that whole exploratory testing aspect is interesting. And then Maxwell also brought up a good point. It sounds like QA can also not just help find what defects or issues exists, but they can also help grade how much of an issue those defects are so that the developers they can prioritize. Okay. Which ones are really a big deal that we need to fix versus what are things that, yeah, it’s, I guess it’s a little broken, but it’s not, not such a big deal in

Michael Ashburne 00:21:17 A broader sense. There are certain whole areas of design right now. Uh, Bluetooth is a really, uh, big area that we’ve been working in. I’m the QA manager for the Bose client at Aspiritech and Bluetooth is really a big thing that, that is involved in all of their different speakers. So obviously if we find anything, anything wrong with, with a certain area, you know, we want them to consider what areas they might want to focus more manual testing and less automation on, right. And we’re always thinking about feature specific in that sense to help the clients out as well. And analysts that are on the spectrum, they really have, it’s fascinating how they tend to be very particular about certain defects. And then they can really find things that are very exploratory, but they don’t miss the forest for the trees in the sense that they still maintain the larger concept design, funnily enough, where they can let you know, you know, it is Bluetooth, really the factor in this that should be fixed here, or is it something else to, it leads to different two interesting avenues.

Maxwell Huffman 00:22:32 Yeah. Bluetooth is really a bag of nuts in a lot of ways, you know, the different versions, different hardware vendors. We work with zebra technologies and they make barcode printers and scanners and so forth. You know, many of their printers are Bluetooth enabled, but you know, the question is, is it Bluetooth three, simply two for backwards compatible? And a certain rather ubiquitous computer operating system is notorious for having trouble with Bluetooth management, whether it’s headphones or printers or whatever. And in that instance, because we want to, you know, we’re not testing the computer us, we’re testing the driver for the printer, right? So part of the protocol we wound up having to build into the, into the test cases is like, okay, first go in deactivate, the computer’s own resident, internal hardware, Bluetooth, then connect to this third-party USB dongle, install the software, make sure it’s communicating, then try to connect to your printer for a long time. An analyst would run into some kind of issue. And the first question is always, why are you using the computer Bluetooth or is a third-party Bluetooth is discoverable on, is it a Bluetooth low energy because you don’t to print using Bluetooth low energy cause it’ll take forever. Right? And then the customer thinks, oh, this isn’t working, it’s broke. You know, not even knowing that there’s multiple kinds of Bluetooth, it’s a hairy for sure.

Jeremy Jung 00:23:59 Yeah. And then I guess as a part of that, that process you’re finding out there, there isn’t necessarily a problem in the customer’s software, but it’s some external case so that when you get a support ticket or a call, then you know, like, okay, this is another thing we can ask them to check. Yeah, absolutely.

Michael Ashburne 00:24:18 And then that’s something that we’ve been definitely leveraged for to help out, to try to resolve customer issues that come in as well and try to set up a testing environment then the next that’s. And we’ve occasionally integrated that to become part of our manual testing and some automated scenarios as well. So those have been interesting scenarios having to buy different routers and what, and what have you. And once again, it gets back to the cost-effectiveness of it, you know, what is, what is the market impact? Yes, this particular ATNT T router or what have, you might be having an issue, but you know, how many users in the wind, the world are really running the software on this. Right? And that’s something that everyone needs to, you know, that every company should consider when they’re considering a patch in the software,

Jeremy Jung 00:25:09 You also brought up is as a software developer, when there is a problem. One of the things that we always look for is we look for a reproducible case, right? Like what are the steps you need to take to have this bug or this problem occur? And it sounds like one of the roles might be, we get in a report from a customer saying like this part of the software doesn’t work, but I’m not sure when that happens or how to get it to happen. And so as a QA analyst, one of your roles might be taking those reports and then building a repeatable test case.

Maxwell Huffman 00:25:46 Absolutely. There’s lots of times where clients have said, we haven’t been able to reproduce this, see if you can. And you know, we get back to them after some increment of time and sometimes we can, and sometimes we can’t, sometimes we have to buy special like headphones or some kind of, you know, try to reproduce the environment that the client was using in case there was some magic sauce interaction going on there,

Michael Ashburne 00:26:12 Our analysts on the spectrum, they are so particular in writing up defects, all the little details. And that really is so important in quality assurance is documentation for the entire process. That’s one area where I think quality assurance really helps development in general is making sure that everything is documented, that it’s all there on paper. And the documentation is, is solid and really sound so for a lot of these defects, we’ve come in. And I think up the standard a little bit where you can’t have the defect written where, you know, the reproducibility is one out of one and it turns out this was a special build that the developer was using that no one else in the company was even using. So it’s a waste of time to track this defect down. And that’s based on the fact that it was a poorly written up report in the first place. So it can be fun to have to track down all the various equipment you need for it. And analysts are really well-suited for writing those up and investigating these different defects are errors that we, that we find sometimes they’re not actually defects. They’re just errors in the system.

Maxwell Huffman 00:27:23 Tell them about the Bose guide that Boz wound up using the guide that we had made internally.

Michael Ashburne 00:27:29 Then so many guides that we’ve ended up creating that have been like terminal access shortcuts, just different, different ways to access the system from a tester perspective that have absolutely helped just documenting all these things that engineers end up using to test code. Right? But lots of times these shortcuts aren’t well documented anywhere. So what quality assurance and what the spear tech has done is we come in and we really create excellent training guides for how to, how to check the code and what all the various commands are that have to be inputted and how that translates to want the more obvious user experiences, which is, I think a lot of times what ends up being lost. It ends up all being code language, and you don’t really know what the user experiences. It’s nice to be able to have found that, that the guides that we’ve created when we show them to the client, cause really we created them to make life easier for us.

Michael Ashburne 00:28:37 Right. And make the testing easier for us to make it more translatable. When you see all this different code that some of us are very well versed in, but other analysts might not be as well versed in the code or that aspect of it. Right. But once you humanize it and you, and you sort of say, okay, well, this is what you’re asking the code to do. Then you have that other perspective of, I actually can think of a better way that we could potentially do this. So we brought a lot of those guides to the clients and they’ve really been, they’ve really been blown away at how well documented all of that was all the way down to the level of the, uh, of all of the systems. We have very good inventory tracking and even being able to test and run internal components of the system.

Michael Ashburne 00:29:29 And that’s why I bring up the . So a lot of the testing that we end up doing, or I wouldn’t say a lot, but a portion of it is the sort of tests that installers would be running. This sort functionality that only installers of systems would be, would be running. So it’s still black box testing, but it’s behind the scenes of what the normal user experience is. Right. It’s sort of the installer experience for lack of a better word. And even having that well documented and finding errors in those processes have been quite beneficial. I remember one scenario in which there was an emergency update method that we had to test, right? And this is, this was a type of method where if someone had to run it, they would take it into the store and a technician would run it. Right? So basically we’re, we’re running software quality assurance on a technicians test for a system.

Michael Ashburne 00:30:28 And the way a technician would update the system. And what we found is that what they were asking the technician to do was a flawed and complex series of steps. It did work, but only one out of 30 times. And only if you did everything in a very particular timing, right. And it just was not something that was user-friendly for the technician. So that’s the kind of thing that we ended up finding. And lots of times it requires the creation of a guide because they don’t have guides for technicians to end up finding a defect like that.

Maxwell Huffman 00:31:04 And the poor technician is dealing with hundreds of different devices, whatever it is, whatever the field is, whether it’s phones or speakers or printers or computers or whatever. And you know, this guy is not working with the same software day in and day out the way we have to sometimes again, because the developer is sort of building the tool that will do the stuff we’re, we’re dealing with, the stuff it’s doing. And so in a lot of ways we can bring our own level of expertise to a product we can surpass the developer. Even it’s not like a contest, right. But just in terms of, you know, how many times is a developer installing it for the first time like Maxwell was saying, when we do out of box testing, we have to reset everything and install it fresh over and over and over and over again. So we wind up being exposed to this particular series of steps that the end user might only see a couple of times, but you know, who wants their brand new shiny thing, especially if it costs hundreds of dollars, you know, you don’t want to have a lot of friction points in the process of finally using it. You know, you just kind of want it to just work as effectively as possible.

Speaker 0 00:32:28 Our friends at log DNA are super tuned into the DevOps space. They’ve taken feedback from devs ops and security teams to create a log management platform that gives users access to the information they need, where they need it, check them out. They’re offering a fully featured 14 day free trial to se radio listeners visit now dot log dna.com/se to start yours today and see what all the hype is about.

Jeremy Jung 00:32:58 Am I understood correctly in Maxwell’s example, that would be you had a, a physical product, like let’s say a pair of headphones or something like that. And you need to upgrade the firmware or perform some kind of reset. And that’s something that like you were saying, a technician would normally have to go through, but as QA, you go in and do the same process and realize like, this process is really difficult and it’s really easy to make a mistake or just not do it properly at all. And then, so you can propose like either, you know, ways to improve those steps or just show the developers like, Hey, look, I have to do all these things just to, you know, just update my firmware. Um, you might want to consider like, making that a little easier on, on your customers. Yeah,

Michael Ashburne 00:33:45 Absolutely. And the other nice thing about it, Jeremy is, you know, we don’t look at it at a series of tests like that as lower level functionality, just because, you know, it’s more for a technician to have run. It’s actually part of the update testing. So it’s, so it’s actually very intricate. As far as the design of the, of the product, you find a defect in how this system updates, it’s usually going to be a critical defect. We don’t want the product to ever end up being a boat anchor or a doorstop. Right. So that’s what we’re always trying to avoid. And in that scenario, it’s one of those things where then we don’t exactly close the book on it once we figure out, okay, this was a difficult scenario for the technician, we resolve it for the technician. And then we look at bigger scope. How does this affect the update process in general? You know, does this affect the customer’s testing that suite of test cases that we have for those update processes? You know, it can, it can extend to that as well. Uh, and, and then we look at it in terms of automation too, to see if there’s any areas where we need to fix the automation tests.

Maxwell Huffman 00:34:56 It can be as simple as power loss during the update at exactly the wrong time. The system will recover if it happens within the first 50 seconds or the last 30 seconds, but there’s this middle part where it’s trying to reboot in the process of updating its own firmware. And if the power happens to go out, then you’re out of luck that does not make for a good reputation for the client. The first thing a customer that’s unhappy about that kind of thing is going to do is tell everybody else about this horrible experience they had.

Michael Ashburne 00:35:31 Right? And I can think of a great example, Michael, we had found a ad hoc defect. They had asked us to look in this particular area. There was a very rare customer complaints of update issues, but they could not find it with their automation. We had one analyst that amazingly enough, was able to pull the power at the right exact time in the right exact sequence. And we reported the ticket and we were able to capture the logs for this incident. And they must have run this through 200,000 automated tests and they could not replicate what this human could do with his hands. And it would really amaze them after we had found it because they really had run it through that many automation tests. But it does. It does happen where you find those

Jeremy Jung 00:36:19 We we’ve been talking about in this case, you were saying this was for Bose, which is a very large company. And I think that when the average developer thinks about quality assurance, they usually think about it in the context of, I have a big enterprise company, I have a large staff, I have money to pay for a whole bunch of analysts, things like that. I want to go back to where Michael, you had mentioned how one of your customers was for a screen sharing application. We had an interview with Spencer Dickson. Who’s the CTO at Tupelo. I believe that’s the product you’re referring to. So I wonder if you could walk us through, like for somebody who has a business that’s I want to say they’re probably maybe four or five people, something like that. What’s the process for them bringing on dedicated analysts or testers, given that you’re coming in, you have no knowledge of their software. What’s the process there, like

Maxwell Huffman 00:37:20 First of all, not to kiss up, but the guys at Tupelo are a really great bunch of guys. They’re very easy to work with. We have like an hourly cap per month, you know, to try to not exceed a certain number of hours. That agreement helps to manage their costs. They’re very forthcoming and they really have folded us in to their development process. You know, they’ve given us access to their trouble ticket software. We use their internal instant messaging application to double-check on expected results. And is this a new feature or is this something that’s changed unintentionally? So when we first started working with them, there was really only one person on the project. And this person was in essence, tasked with turning the Excel checklist of features into suites of test cases. And, you know, you, you start with make sure X happens when you click Y and then you make that the title of a test case.

Maxwell Huffman 00:38:19 And, you know, once you get all the easy stuff done, then you go through the steps of making it happen. They offered us a number of very helpful sort of starting videos that they have on their website for how to use the software by no means are they comprehensive, but it enough to get us comfortable with the basic functionality. And then you just wind up playing with the software. They were very open to giving us the ramp up time that we needed in order to check all the different boxes, both ones on their list and the new ones that we found, because know, there’s more than one connection type, right? That can be just a voice call or there can be the screen sharing and you can show your local video from your computer camera. So you can see each other in a small box and know what order do you turn those things on?

Maxwell Huffman 00:39:08 And which one has to work before the next one can work? Or what if a person changes their preferences in the midst of a call? And, you know, these are things that fortunately tuples audiences, a bunch of developers. So when their clients, their customers report a problem, the report is extremely thorough because they know what they’re talking about. And so the reproduction steps are pretty good, but we still, sometimes we’ll run into a situation that they shared with us was like, we can make this happen. And I don’t know. I mean, getting back to the Bluetooth, like they’ve even had customers where, uh, I guess one headset used a different frequency than another one, even though they were on the same Bluetooth version. And when he changed this customer, but I shouldn’t say he, the customer, whoever whomever they are, they changed from one headset to another.

Maxwell Huffman 00:39:56 And you know, the whole thing fell apart and say, how do you even, you know, cause you don’t go to the store and look on the package and see, oh, this particular headphone uses 48 killers for there at the outset. I didn’t even know that that was a thing that could be a problem. Right? You figured Bluetooth has its band of the telecom spectrum. And, but you know, anything’s possible. So they gave us time to ramp up, you know, cause they knew that they didn’t have any test cases. And over time now there’s a dedicated team of three people that are on the project regularly, but it can expand to as many as six, you know, because it’s a sharing application, right? So you tend to need multiple computers involved. And we’ve really enjoyed our relationship with Tupelo and our, and our eagerly awaiting, if there would be a windows version. Cause there’s so many times when we’ll be working on another project, even, you know, talking with the person and saying, oh, I wish I could, you know, we could use Tupelo cause then I could click the thing on your screen and you could see it happen instead of just, you know, they are working on a Linux version though. I don’t think that’s a trade secrets. So that’s, that’s in the pipeline. We’re excited about that. And these guys, they pay their bills in like two days, no customers do that. They’re really something. I mean,

Jeremy Jung 00:41:11 I think that’s a sort of a unique case because it is a screen-sharing application. You have things like headsets and webcams and you’re making calls between machines. So I guess if you’re testing, you would have all these different laptops or workstations set up all talking to one another. So yeah, I can imagine why it would be really valuable have people or a team dedicated to that

Maxwell Huffman 00:41:36 And external webcams and you know, whether you’re like my Mac mini is a 2012, so it doesn’t have the three band audio port, right. It’s got one for microphone and one for headphones. So that in itself is like, well, I wonder how many of their customers are really going to have an older machine, but it wound up being an interesting challenge because then I had to, if I was doing a testing, I had to have a microphone sort of distinct from the headphones. And then that brings in a whole other nest of interactivity that you’d have to account for maybe the microphones USB based. There’s all sorts of craziness. I’m wondering

Jeremy Jung 00:42:14 If you have projects where you not only have to use the client, but you also have to, to set up the server infrastructure so that you can run tests internally. I’m wondering if you do that with clients and if you do like, what’s your process for learning? How do I set up a test environment? How do I make it behave? Like the real thing, things like that. So

Michael Ashburne 00:42:38 The production and testing equipment is what the customers have, right? It’s basically to create that setup. We just need the equipment from them and the user guides and the less information, frankly, the better in those setups, because you want to mimic what the customer’s scenario is, right? You don’t want to mimic too pristine of a setup. And that’s something that we’re always careful about when we’re doing that sort of setup. As far as more of the integration and the, a sandbox testing bed where you’re testing a new build for regressions or what, or what have you, that’s going to be going out. We’d be connected to a different server environment.

Maxwell Huffman 00:43:20 And with zebra technologies, they’re their zebra designer, printer driver. They support windows seven windows, 8.1 windows 10 and windows server 2012, 2016, 2019. And in the case of the non server versions, bill 32 bit and 64 bit cause apparently windows 10 30, two bid is more common in Europe, I guess, than it is here. And even though windows seven has been deprecated by Microsoft, they’ve still got a customer base, you know, still running, you know, don’t fix what ain’t broke, right? So why would you update a machine if it’s doing exactly what you want, you know, in your store or business or whatever it is. And so we make a point of executing tests in all 10 environments. It can be tedious because windows seven 30, two and 64 have their own quirks. So we always have to test those, you know, windows eight and windows 10 are fairly similar, but they keep updating windows 10.

Maxwell Huffman 00:44:21 And so it keeps changing. And then when it’s time for their printer driver to go through the windows logo testing, they call it that’s like their, their hardware, quality labs, hardware that Microsoft has, which in essence means when you run a software update on your computer, if there’s a new version of the driver, it’ll download it from Microsoft servers. You don’t have to go to the customer website, specifically, seek it out. So we actually do a certification testing for zebra, with that driver in all of those same environments and then submit the final package for Microsoft’s approval. And that’s, uh, that’s actually been sort of a job of ours if you will, for several years now. And that’s not something you take lightly when you’re dealing with Microsoft and actually this sort of circles back to the, the writing the guides, because you know, there are instructions that come with the windows hardware lab kit, but it doesn’t cover everything obviously.

Maxwell Huffman 00:45:23 And we wound up creating our own internal zebra printer driver certification guide, and it’s over a hundred pages because we wanted to be sure to include every weird thing that happens, even if it’s only sometimes, and be sure you sent this before you do this because in the wrong order, then it will fail and they won’t tell you why and all sorts of strange things. And we’ve of course, when we were nearing completion on that guide, our contact at zebra was actually wanted a copy because you know, we’re not their only QA vendor obviously. And so, you know, if there’s anything that would help and they have other divisions too, you know, they do, uh, they have a browser print application that allows you to print directly to the printer from a web browser without installing a driver. And that’s a whole separate division and you know, but overall all these divisions have the same end goal as we do, which is, you know, sort of reducing the friction for the customer, using the product.

Jeremy Jung 00:46:21 That’s an example of a case where you said it’s like a hundred pages. So you’ve got these test cases basically ballooning in size. And maybe more specifically towards the average software project as development continues, new features get added, the product becomes more complex. I would think that the number of tests would grow, but I would also think that it, it can’t grow indefinitely, right. There has to be a point where it’s just not worth going through, you know, X number of tests versus the value you’re going to get. So I wonder how you, how you manage that as things get more complicated, how do you choose what to drop and what to continue on?

Maxwell Huffman 00:47:05 It obviously depends on the client, in the case of zebra to use them again. You know, when we first started working with them, they put together the test suites, we just executed the test cases as time went by, they began letting us put the test suites together. Cause you know, we’ve been working with the same test cases and trying to come up with the systems. So we sort of spread out the use instead of it always being the same number of test cases, because what happens when you get, when you execute the same tests over and over again, and they don’t fail. That doesn’t mean that you’re, you fixed everything. It means that your tests are worthless eventually. So they actually, a couple of summers ago, they had us go through all of the test cases, looking at the various results to evaluate like, okay, this is a test case that we’ve run 30 times and it hasn’t failed for the last 28 times.

Maxwell Huffman 00:47:56 Is there really any value in running it at all anymore? So long as that particular functionality isn’t being updated because they update their printer driver every few months when they come out with a new line of printers, but they’re not really changing the core functionality of what any given printer can do. They’re just adding like model numbers and things like that. So when it comes to like the ability of the printer to generate such and such barcode on a particular kind of media, like that only gets you so far, some printers have RFID capability and some don’t. And so then you can, you get to kind of mix it up a little bit, depending on what features are present on the model. So deprecation of worn out test cases does help to mitigate, you know, the ballooning test suite. I’m sure Bose has their own approach. Absolutely.

Michael Ashburne 00:48:48 There are certain features that might also fall off entirely where you’ll look at how many users are actually using a certain feature. So like Michael was saying, you know, there might not be any failures on this particular feature, plus it’s not particularly being used a lot. So it’s a good candidate for being automated, right? So we’ll look at cases such as such as that. And we’ll go through a test cycle scrubs. We’ve had to do a series of update matrixes that we’ve had to progressively look at how much of the market has already updated to a certain version. So if a certain part of the market, if 90% of the market has already updated to this version, you don’t, you no longer have to test from here to here as far as your update testing. So that’s another way in which you can, in which you slowly start to reduce test, test cases and coverage, but you’re always looking at that with the risk assessment in mind, right?

Michael Ashburne 00:49:44 And you’re looking at, you know, who are the end users that what’s the customer impact if we’re pulling away or if we’re automating this set of test cases. So, you know, we go about that very carefully, but we’ve been gradually more and more involved in helping them assess what test cases are the best ones to be manually run. Cause those are the ones that we end up finding defects in time and time again. So those are the areas that we’ve really helped rather than having, you know, cause lots of times clients will, if they do have a QA department, you know, the test cases will be written more in an automation type language. So it’s like, okay, why don’t we just automate these test cases to begin with? And it’ll be very broad scope where they have everything is written as a test case for the overall functionality. And it’s just way too much as you’re pointing out Jeremy and as features grow, it just, that just continues on, it has to be whittled down in the early stages to begin with, but that’s how we help out to finally, you know, help manage these cycles, to get them in a more reasonable manual testing cadence. Right. And then having, having the automated section of test cases have that be, you know, the larger portion of the overall coverage as it should be in general.

Maxwell Huffman 00:51:07 So it sounds like there’s this,

Jeremy Jung 00:51:09 This process of you working with the client, figuring out what are the test cases that haven’t brought up an issue in a long time or the things that get the most or the least use from customers, things like that. You, you, you look at all this information to figure out what are the things that for our manual tests we can focus on and try to push everything else, like you said into some items.

Maxwell Huffman 00:51:36 So if over time we’re starting to see these trends with older test cases or simpler test cases. Know if we notice that there’s a potential, we’ll bring that to the client’s attention. And we’ll say we were looking at this batch of tests for basic features and we haven’t noticed that they haven’t failed ever or in two years or whatever, would you consider us dropping those, at least for the time being, see how things go and you know, that way we’re spending less of their time. So to speak, you know, on the whole testing process, because as you pointed out, like the more you build a thing, the more time you’d have to take, you know, to test it from one end to the other. But at the same time, a number of our analysts are OST five oh eight trusted test are certified for accessibility testing using screen readers and things like that.

Maxwell Huffman 00:52:30 It’s interesting how many web applications, you know, it just becomes baked into the bones, right? And so, you know, you’ll be having a, a team meeting talking about yesterday’s work and somebody will mention, you know, when I, when I went to the such and such page, you know, because this person happened to use, uh, stylists to change the custom colors of the webpage or something like that. And I’ll say, you know, really it was not very accessible. There was light green, there was dark green, there was light blue, like I can, you know, and so I used my style sheets to make them red and yellow. When you see enough of that kind of stuff. And then that’s an opportunity to grow our engagement with the client, right. Because we can say by the, by, you know, we noticed these things, we do offer this as a service.

Maxwell Huffman 00:53:13 If you wanted to fold that in or, you know, set it up as like a one-time thing, even, you know, it all depends on how much value it can bring the client, you know, we’re not pushing sales, trying to get more whatever, but it’s just about when you see an opportunity for improvement of the client’s product or, you know, uh, helping, uh, better secure their position in the market or, you know, however, however it works or could work to their advantage. You know, we sort of feel like it’s our duty to mention it as their partner. We also do data analysis. You know, we don’t just do QA testing. I know that’s the topic here, of course, but that is another, another way where, you know, our discerning analysts can find one of our products or one of our client’s rather we do monthly call center, like help desk calls. We analyze that data in aggregate. And you know, they’ll find these little spikes on a certain day, say everywhere or a clutch over a week of people calling about a particular thing. And then we can say to them, to the client, you know, did you push a new feature that day? Or was it rainy that day? Or, you know, I mean, it could be any, and maybe the client doesn’t care, but what we see it. So we say it and let them decide what to do with the information. Common about

Jeremy Jung 00:54:38 Accessibility is really good because it sounds like if you’re a company and you’re building up your product and you may not be aware of the accessibility issues, you have a tested by someone who’s using a screen reader, you know, sees those issues with contrast and so on. And now the developer, they have like these, these specific, actionable things to do and potentially even move those into automated tests to go like, okay, we need to make sure that these UI elements have this level of contrast,

Maxwell Huffman 00:55:12 You know, and there’s different screen readers to the certification process. Like with the government to become a trusted tester, uses one particular, screenreader named Andy initialism, but there are others. And then it’s on us to become familiar with what else is out there because it’s not like everyone is going to be using the same screen reader, just like not everyone uses the same browser.

Michael Ashburne 00:55:36 I think that clients realize that, yeah, we do have a good automation department, but is it well balanced with what they’re doing manual QA wise? And I think that’s where we often find that there’s a little bit lacking that we can provide extra value for, or we can boost what is currently there.

Maxwell Huffman 00:55:54 Our employees are quality assurance analysts. They’re not testers. They don’t just come in, read the script, then Pokemon go afterwards. We count on them to bring that critical eye and everyone’s own unique perspective when they go to use any given product pay attention to what’s happening. Even if it’s not in the test case, you know, something might flash on the screen or there might be this pause before the next thing kicks off that you are waiting for. That happens enough times. And you kind of notice like there’s always this lag right before the next step, you know, and then you can check that out with the developer, like, is this lag? You guys care about this lag? You know, sometimes we find out that it’s unavoidable because something under the hood has to happen before the next thing can happen. And even

Michael Ashburne 00:56:44 Asking those questions, we found out fascinating things like, you know, why is there this lag every time when we run this test, you know, we never want to, I want to derail a client too much. You know, we’re always very patient for the answer. And sometimes we don’t, you know, we might not get the answer, but I think that that does help build that level of respect between us and the developers that we really care what their code is doing. And we want to understand, you know, if there is a slight hiccup what’s causing that slight hiccup, it ends up being fascinating for our analysts as we are learning the product. And that’s what makes us want to really learn exactly what the code is

Maxwell Huffman 00:57:20 Doing. And even though I’m not a developer, when I first started at Aspiritech, I worked on Bose as well. And I really enjoyed just watching their code, scroll down the screen as the machine was moving up or the speaker was updating because you can learn all sorts of interesting things about what’s happening, that you don’t see normally, you know, there’s all sorts of weird inside jokes in terms of like what they called the command or, oh, there’s that same spelling error where it’s only one team. You kind of get to know the developers in a way, oh, so-and-so wrote that line. We always wondered. Cause there’s only this one and that we’re supposed to have to keep giving them a hard time about that, but now we can’t change it. So we have fun.

Jeremy Jung 00:58:09 Learn more about what you guys are working on or about Aspiritech

Michael Ashburne 00:58:15 Www.speartech.org is our, is our website head to there, uh, give you all the information you need about

Maxwell Huffman 00:58:21 Us. We also have a LinkedIn presence that we’ve been trying to leverage lately. Talk to our current clients. I mean, they’ve really been our biggest cheerleaders and the vast majority of our, of our work has come from client referrals. As an example of that too, you know, they were referred by a client who was referred, were very problematic. You know, it speaks volumes about, about the quality of our work and the relationships that we build. And we have very little customer turnover in addition to very little staff turnover and that’s because we invest in these relationships. It seems to work for both sides. Michael Maxwell.

Jeremy Jung 00:59:01 Thanks for coming on the show. Thank you so much, Jeremy. It’s great talking to you.

Maxwell Huffman 00:59:04 Thanks for having us.

Jeremy Jung 00:59:07 This has been Jeremy Jung for Software Engineering Radio. Thanks for listening.

[End of Audio]

SE Radio theme: “Broken Reality” by Kevin MacLeod (incompetech.com — Licensed under Creative Commons: By Attribution 3.0)

#SE-Radio Episode 461:: It would be ideal for SQA, if the Software Requirements Specification document includes how each software quality characteristic or its sub-characteristic (refer ISO-IEC 25010: 2017 SQuaRE standad) will manifest in the software, so as to test them apart from the functional aspects. This will also prove to be an aid for assigning a quality-score to the software being qualified.