Venue: Internet

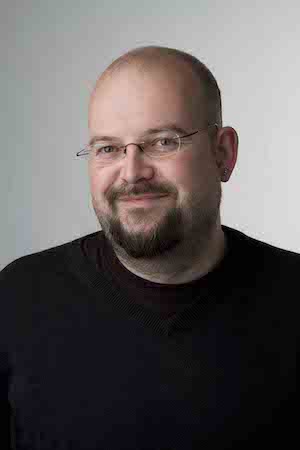

James Turnbull joins Charles Anderson to discuss Docker, an open source platform for distributed applications for developers and system administrators. Topics include Linux containers and the functions they provide, container images and how they are built, use cases for containers, and the future of containers versus virtual machines.

Show Notes

Related Links

- James’s home page: http://www.jamesturnbull.net

- James on Twitter: @kartar

- Docker Project Site: http://docker.com

- Docker Documentation: https://docs.docker.com

- The Docker Book: http://www.dockerbook.com

- The Logstash Book: http://www.logstashbook.com

- Pro Puppet: http://www.apress.com/9781430260400

- Pro Linux System Administration: http://www.apress.com/9781430219125

- Hardening Linux: http://www.apress.com/9781590594445

- CoreOS: https://coreos.com

- Fig Project: http://www.fig.sh

Transcript

Transcript brought to you by innoQ

What is Docker?

Docker is a container virtualization technology. So, it’s like a very lightweight virtual machine [VM]. In addition to building containers, we provide what we call a developer workflow, which is really about helping people build containers and applications inside containers and then share those among their teammates.

What problems does it address?

There are a couple of problems we’re looking at specifically. The first one is aimed at the fact that a VM is a fairly large-weight compute resource. Your average VM is a copy of an operating system running on top of a hypervisor running on top of physical hardware, which your application is then on top of. That presents some challenges for speed and performance, and some challenges in an agile sort of environment.

So, we’re aiming to solve the problem of producing a more lightweight, more agile compute resource. Docker containers launch in a subsecond, and you can then have a hypervisor that sits directly on top of the operating system. So, you can pack a lot of them onto a physical or virtual machine. You get quite a lot of scalability.

For most people, the most important IT asset they own is the code they’re developing, and that code lives on a developer’s workstation or laptop or in a dev test environment. It’s not really valuable to the company until it actually gets in front of the customer. The process by which it gets in front of a customer, that workflow of dev test, staging, and deployment to production, is one of the most [tension-fraught] in IT.

The DevOps movement, for example, emerged from one of the classic stumbling blocks in a lot of organizations. Developers build code and applications and ship them to the operations people, only to discover that the code and applications don’t run in production. This is the classic “it works on my machine; it’s operations’ problem now.”

We were aiming to build a lightweight computing technology that helped people put code and applications inside that resource, have them be portable all the way through the dev test, and then be able to be instantiated in production. We made the assumption that what you build and run in dev test looks the same as what you build and run in production.

What are some typical use cases in which a developer or admin might want to use Docker?

We have two really hot use cases right now. The first one is continuous integration and continuous deployment. With Docker being so lightweight, developers can build stacks of Docker containers on their laptops that replicate some production environments—for example, a LAMP stack or a multitier application. They can build and run their application against that stack.

You can then move these containers around—they’re very portable. Let’s say you have a Jenkins continuous-integration environment. Instead of relying on VMs, we have to spin up a new VM, install all the software, install your application source code, run the tests, and then probably tear it all down again because you may have destroyed the VM as part of the test process.

Let’s say it would take 10 minutes to build those VMs. In the Docker world, you can build those VMs or the containers that replace them in a matter of seconds, which means if you’ve cut 10 minutes out of your build–test run, that’s an amazing cost saving. It allows you to get much more bang out of your buck from the continuous-deployment and continuous-testing model.

The other area where we’re seeing a lot of interest is what we call high capacity. Traditional VMs have a hypervisor, which probably occupies about 10 to 15 percent of the capacity of a host. We have a lot of customers for whom that 10 to 15 percent is quite an expensive 10 to 15 percent. They want to say, “Okay, let’s root that out, replace it with a Docker host, and then we can run a lot more containers.” We can run hyperscale numbers of containers on a host container because without a hypervisor, they sit right on top of the operating system and are very, very fast.

IBM released some research last year that suggested that on a per-transaction basis, the average container is about 26 times faster than a VM, which is pretty amazing.

Docker is based on containers, which provide an isolated environment for user-mode code. Some of our listeners might be familiar with earlier container systems such as Solaris Zones or FreeBSD jails, or even going back to the chroot system call from Unix Version 7. How does the container have its own copy of the file system without duplicating the space between identical containers, especially when you’re talking about hyperscale?

One of the other interesting technologies Docker relies on is a concept we call copy-on-write. Many file systems, such as Btrfs, Device Mapper, and AuFS, all support this copy-on-write model, which is what the kernel developers call a union file system. Essentially, what happens is that you build layers of file systems. So, every Docker container is built on what we call an image. The Docker image is like a prebaked file system that contains a very thin layer of libraries and binaries that are required to make your application work, and perhaps your application code and maybe some supporting packages.

For example, you might have a LAMP stack that might have a container that has Apache in it, and libc, and a small number of very thin shims that fake out an operating system. That image is saved in what we call a file system layer.

If I was to then make a change to that image—for example, if I wanted to install another package—I’d say, “I want to have PHP as well.” On an Ubuntu system I’d say, “apt-get install PHP,” and Docker says, “You want to create a new thing. I’m going to create a new layer on top of our existing layer, and I’m only going to add in the things that I have changed.” So, for example, “I’m going to add in the new package, and that’s it.” That is a layered construction. I end up with a read-only file system with multiple layers. You can think about this layer a bit like a git commit or a version control commit. As a result, I end up with a very lightweight system that only has the things on it that I want.

Docker understands that I can cache things. So it says, “You’ve already installed PHP. I’m not going to make any changes to the environment; I’m not going to have to write anything. So, I’m going to just reuse that existing layer, and I’ll drag that in as the PHP layer.” For example, if you’re changing your source code, instead of a VM you may be rebuilding the DM. With Docker, you say, “Here’s the new commit of my source code. I’m going to add it to my Docker image and maybe that’s 10 Kbytes worth of code change.” Docker says, “That’s the only thing you want to change; therefore, that’s the only thing I’ll write to the file system.” As a result, it’s very lightweight, and with the cache, extraordinarily fast to rebuild.

Explain how a process in a container can only see other processes in the container.

Docker relies heavily on two pieces of Linux kernel technology. The first one is called namespaces. If you run a new process on the Linux kernel, then you’re making a system call to the namespaces: “I want to create a new process.” If you want to create a new network interface, you’re making a call to the network namespace. The kernel assigns you a namespace that has a process and whatever other resources you want. For example, it might have some access to the network. It might have access to some parts of the file system. It might have access just to memory or CPU.

When we create a Docker container, we’re basically making a bunch of calls to the Linux kernel to say, “Can you build me a box? The box should have access to this particular file system, access to CPU and memory, and access to the network, and it should be inside this process namespace.” And from inside that process namespace, you can’t see any other process namespaces outside it.

The second piece of technology we use is called control groups, or cgroups. These are designed around managing the resources available to a container. It allows us to do things such as “This container only gets 128 Mbytes of RAM” or “This container doesn’t have access to the network.” You can add and drop capabilities as needed, and that makes it fairly powerful to be able to granularly control a container in much the same way you would with the point-and-shoot VM interface to say, “Get this network interface,” “Get this CPU core,” “Take this bit of memory access to this file system,” or “Create a virtual CD-ROM drive.” It’s a similar level of technology.

Besides files and processes, containers provide an isolated environment for network addresses and ports. For example, I can have Web servers running in multiple containers all using port 80. By default, the container doesn’t expose network ports to the outside. However, they can be exposed manually or intentionally, which is a bit like opening up ports on a firewall such as iptables, right?

That’s correct. Each container has its own network interface, which is a virtual network interface. You can run processes that use network ports inside those containers. For example, I can run 10 Apache containers inside port 80, inside the container. And then, outside the container, I can say, “Expose this port.” And if I want to actually expose port 80 to port 80 I can, but obviously I can only do that once, because you can only map one port inside the container to the one port outside the host. By default, Docker chooses a random port and says, “I’m going to do a network address translation between this port 80 inside the container and, say, port 49154 on the host.”

For example, I could have multiple Apache processes running on different ports. Then I put a service discovery tool or a load balancer or some sort of proxy in front of it. HAProxy is very commonly used. We can use things such as nginx or service discovery tools such as ZooKeeper, etcd, and Consul that allow you to either proxy the connections or provide a way to say, “I want this application. Query that particular service discovery tool,” or “That application belongs to these 10 containers, and you can choose one of these 10 ports,” which will connect to the Apache process. So it’s a very flexible, scalable model. It’s designed to run complicated applications.

Those are going to be aimed at running containers on multiple hosts as well, because so far we’ve been talking about Docker on a single host, right?

We launched a prototype called libswarm, like a swarm of wasps. That tool was designed to prototype how you would get Docker hosts to talk to one another, because currently containers on one Docker host have to use the network to talk to one another. But they should have a back channel of communication. You should essentially be able to have Docker hosts communicating together. We look at this like a way to say, “I’m a Docker host and I run Apache Web services,” and another Docker host is saying, “I have a database back end that needs an Apache front end. Can you link one of your containers with one of my containers?” This starts to create some really awesome stories around scalability, autoscaling, and redundancy. And, you start to be able to build really complex applications, which up until now has been very challenging for a lot of organizations.

Great interview and great piece of software. I love docker. This is so revolutionary, filling a big gap in the development-release cycle.

Next discussion could be about Flockport, now that Docker has been advertised to saturation.

[…] Docker (podcast) – a very nice audio introduction into Docker. Always wanted to play with it. Got even more desire after listening this podcast. It’s definitely a theme I’m going to push for the next mini-hackathon we help Friday nights (till 3-4 AM :-D) […]

Really great podcast! But: A higher audio bitrate would be perfect! With only 80kbit/s it’s sometimes quite hard to understand everything (espacially for a non-native speaker).

[…] Episode 217: James Turnbull on Docker: http://www.se-radio.net/2015/01/episode-217-james-turnbull-on-docker/ […]

[…] SE radio podcast episode 217 on Docker […]

[…] Episode 217: James Turnbull on Docker http://www.se-radio.net/2015/01/episode-217-james-turnbull-on-docker/ […]

[…] James Turnbull on Docker, Software Engineering Radio, January 7, 2015 […]

Love it, Docker.

you should tag this podcast with docker, so that it shows up on https://www.se-radio.net/tag/docker/

Great podcast series…this is truly one of the best podcasts out there, keep up the good work. I’ve been a long time listener.